Difference between revisions of "L2 and Directory cache controller"

| Line 5: | Line 5: | ||

This component interfaces with the Network Interface in to send/receive coherence requests. | This component interfaces with the Network Interface in to send/receive coherence requests. | ||

| − | + | == Introduction == | |

| − | + | This component is composed of three stages, each one with particular tasks. Even if the structure could seem that of a pipelined component that is not the solution adopted here. | |

| + | This approach of stage division has been taken in order to manage the complexity of the component and to ease testing phase. | ||

== Stage 1 == | == Stage 1 == | ||

Revision as of 19:49, 13 December 2017

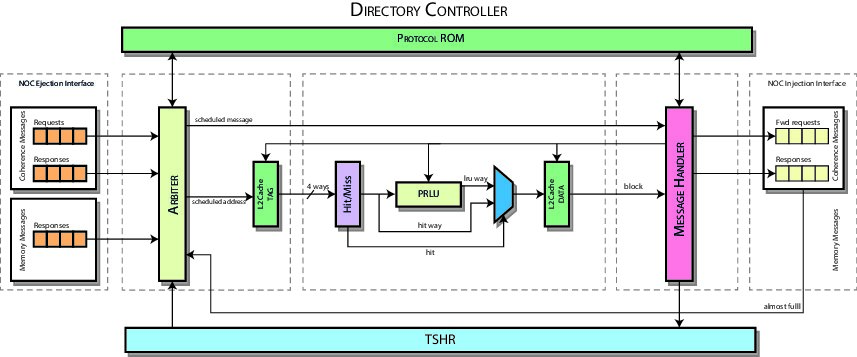

L2 & Directory cache controller is that component whose task is managing the L2 cache and relative directory (recall that directory is inclusive).

This component interfaces with the Network Interface in to send/receive coherence requests.

Contents

Introduction

This component is composed of three stages, each one with particular tasks. Even if the structure could seem that of a pipelined component that is not the solution adopted here.

This approach of stage division has been taken in order to manage the complexity of the component and to ease testing phase.

Stage 1

Stage 1 is responsible for the issue of requests to controller. A request could be a replacement request from the local core or a coherence request/response from the network interface.

Issue Signals

In order to issue a request it is required that:

- TSHR is not full and has not issued the same request;

- the network interface is available;

- the other stages are not busy;

This is the case of a replacement request:

can_issue_replacement_request = !rp_empty && !tshr_full && !replacement_request_tshr_hit && ! (( dc2_pending ) ||( dc3_pending )) && ni_forwarded_request_network_available && ni_response_network_available;

A cache coherence request adds more constraints other than those above, that is:

- the network interface provides a valid request;

- if the request is already in TSHR it has to be not valid;

- if the request is already in TSHR and valid it must not have been stalled by Protocol ROM (see Stall Signals).

The latter two are added in order to give priority to pending requests first.

assign can_issue_request = ni_request_valid && !tshr_full &&

( !request_tshr_hit ||

( request_tshr_hit && !request_tshr_entry_info.valid) ||

( request_tshr_hit && request_tshr_entry_info.valid && !stall_request ) ) &&

! (( dc2_pending ) || ( dc3_pending )) &&

ni_forwarded_request_network_available && ni_response_network_available;

Finally in order to issue a response it is sufficient that the network interface has provided a valid response only:

assign can_issue_response = ni_response_valid;

TSHR Signals

In order to find out if a particular request is already issued, tag and sets for each type of request are provided to TSHR. TSHR data respose are considered valid for that class of request if and only if its hit signal is asserted. Here is the code for the class of coherence request signals:

// Signals to TSHR assign ni_request_address = ni_request.memory_address; assign dc1_tshr_lookup_tag[REQUEST_TSHR_LOOKUP_PORT] = ni_request_address.tag; assign dc1_tshr_lookup_set[REQUEST_TSHR_LOOKUP_PORT] = ni_request_address.index; // Signals from TSHR assign request_tshr_hit = tshr_lookup_hit[REQUEST_TSHR_LOOKUP_PORT]; assign request_tshr_index = tshr_lookup_index[REQUEST_TSHR_LOOKUP_PORT]; assign request_tshr_entry_info = tshr_lookup_entry_info[REQUEST_TSHR_LOOKUP_PORT];

Stall Protocol ROM

In order to be compliant with the coherence protocol all incoming coherence requests on blocks whose state is in a particular non-stable state have to be stalled. This task is performed through a protocol ROM whose output signal will stall the issue of a coherence request if asserted, that is for example when a block is in state S D and a getS, getM or a replacement request for the same block is received. In order to assert this signal the protocol ROM needs the type of the request, the state and the actual owner of the block:

assign dpr_state = tshr_lookup_entry_info[REQUEST_TSHR_LOOKUP_PORT].state; assign dpr_message_type = ni_request.packet_type; assign dpr_from_owner = ni_request.source == request_tshr_entry_info.owner; dc_stall_protocol_rom stall_protocol_rom ( .input_state ( dpr_state ), .input_request ( dpr_message_type ), .input_is_from_owner ( dpr_from_owner ), .dpr_output_stall ( stall_request ) );

Requests Scheduler

Once the conditions for the issue have been verified, two or more requests could be ready at the same time so a scheduler must be used. In particular this scheduler uses fixed priorities set as below:

- Replacement request

- Coherence response

- Coherence request

This ordering ensures coherence is preserved. Once a type of request is scheduled this block drives conveniently the output signals for the second stage.

L2 Tag & Directory State Cache

Finally there is a cache memory to store L2 tags and their directory state (recall that the directory is inclusive). The directory state is updated when the request is processed by stage 3.

Stage 2

Stage 2 is responsible for managing L2 Data Cache and forwarding signals from Stage 1 to Stage 3. It simply contains the L2 Data Cache and all related logic for managing cache hits and block replacement. The policy used to replace a block is LRU (Least Recently Used).

The L2 Data Cache contains cache data and coherence data, i.e. owner and sharer list (the directory state is included in L2 Directory State Cache).

Stage 3 updates LRU and cache data once the request is processed.

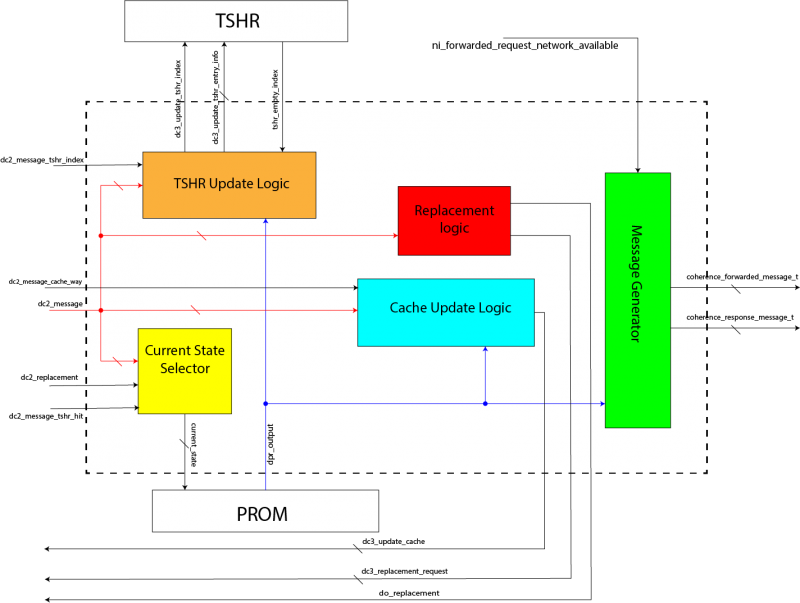

Stage 3

Stage 3 is responsible for the actual execution of requests. Once a request is processed, this module issues signals to the units in the above stages in order to update control data properly. Every group of signals to a particular unit is managed by a subsystem, each one represented in the picture below. Each subsystem is simply a combinatorial logic that "converts" signals from protocol ROM in proper commands to the relative unit.

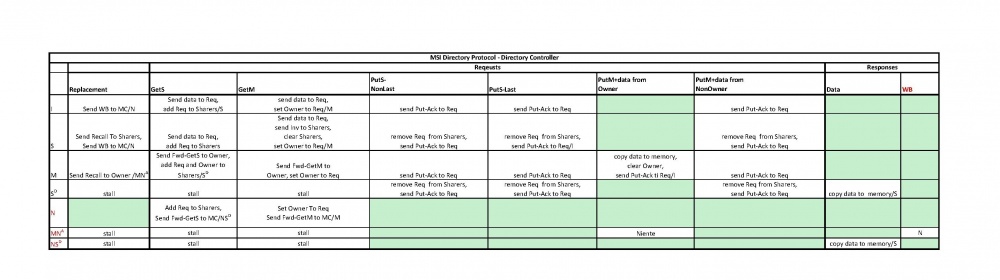

Protocol ROM

This module implements the coherence protocol as represented in figure below:

The coherence protocol used is MSI plus some changes due to the directory's inclusivity. In particular, a new stable state has been added, N, meaning the block is not cached in directory and has to be taken from off-chip memory. The adding of this state has been necessary because when a block reach the stable state I it is not updated automatically to off-chip memory until a replacement request for that block has been issued. So the stable state I means that the block is cached only by directory controller and that could have a more recent copy than memory.

Furthermore other two non-stable states have been added:

- state MN_A in which the directory controller is waiting for data from block owner in order to send it to the off-chip memory after a replacement request issued for that block. Subsequent requests on the same block are stalled until data has been received from block owner and sent to off-chip memory. Note that the block is invalidated so a new access to the off-chip memory has to be done;

- state NS_D in which the directory controller is waiting for data coming from off-chip memory in order to serve coherence request(s) for that block. Subsequent requests on the same block are stalled until data has been received from off-chip memory and sent to requestor(s).

TSHR Update Logic

TSHR could be updated in three different ways:

- entry allocation;

- entry deallocation;

- entry update.

TSHR is used to store cache lines data whose coherence transactions are pending. This is the case in which a cache line is in a non-stable state. So an entry allocation is made every time the cache line's state moves towards a non-stable state. In opposite way a deallocation is made when a cache line's state enters a stable state. Finally an update is made when there is something to change regarding the TSHR line but cache line's state is yet non-stable.

assign tshr_allocate = current_state_is_stable & !next_state_is_stable; assign tshr_deallocate = !current_state_is_stable & next_state_is_stable; assign tshr_update = !current_state_is_stable & !next_state_is_stable & coherence_update_info_en;

Once one of the previous signals is asserted then properly informations are passed to TSHR. The remaining controls are simply sanity checks in order to check all signals are set properly.

Note that if the operation is an entry allocation then the index of the first empty THSR line is passed. This index is obtained directly from THSR unit. Remember that at this point there is surely an empty TSHR line otherwise the request would have not been issued (see Issue Signals).

If the operation is an update or a deallocation the the index is obtained from Stage 1 (through Stage 2) in which TSHR is queried for the index of the entry associated with the actual request.

assign dc3_update_tshr_index = tshr_allocate ? tshr_empty_index : dc2_message_tshr_index;

Cache Update Logic

Cache could be updated in three different ways:

- entry allocation;

- entry deallocation;

- entry update.

Unlike TSHR, cache is used to store cache lines data whose coherence transactions are completed. This is the case in which a cache line is in a stable state. So an entry allocation is made every time the cache line's state moves towards a stable state except when the state is N (that is when cache line has to be invalidated). In opposite way a deallocation is made when a cache line's state enters a non-stable state, that is when its data are written in TSHR (see TSHR Update Logic). Finally an update is made when there is something to change regarding the cache line but cache line's state is yet stable.

assign allocate_cache = next_state_is_stable & ( coherence_update_info_en | dpr_output.store_data ) & ~(tshr_deallocate & dpr_output.invalidate_cache_way) & ~update_cache; assign deallocate_cache = tshr_allocate & dc2_message_cache_hit ; assign update_cache = current_state_is_stable & next_state_is_stable & dc2_message_cache_hit & ( coherence_update_info_en | dpr_output.store_data );

Once one of the previous signals is asserted then properly informations are passed to cache memory.

Note that the remaining controls are simply sanity checks in order to check all signals are set properly.

Replacement Logic

Every time a replacement request has been issued its cache block is not invalidated. That is because the same cache line is replaced with a new valid one from another previous coherence request. The replaced cache block is queued in a replacement queue where it lies until a new request can be issued from Stage 1 (this replacement request will have maximum priority and will be scheduled first of all other pending requests (see Requests Scheduler)).

So this module manages replacement queue and allows a cache block to be enqueued when there is a replacement, that is when the actual coherence request need to store its info in cache memory ((allocate_cache || update_cache) & !dc2_message_cache_hit), it's not a replacement request as well (!is_replacement) and it doesn't need TSHR in order to complete (!deallocate_cache, see Cache Update Logic):

assign do_replacement = dc2_message_valid && dc2_message_cache_valid

&& ((allocate_cache || update_cache) && !deallocate_cache)

&& !is_replacement

&& !dc2_message_cache_hit;

assign dc3_replacement_enqueue = dc2_message_valid && do_replacement;

Current State Selector

Before a coherence request is processed the correct source for cache block state has to be chosen. These data have to be fetched from:

- Cache memory (see Cache Update Logic);

- TSHR (see TSHR Update Logic);

- Replacement Queue (see Replacement Logic);

If none of the conditions above are met then cache block must be in state N.

Message Generator

This module sends a forward request or a response message to the network interface whenever one is available. Messages are formatted properly with the coherence protocol.

Note that this block manages instruction cache misses as well. In this case requests are forwarded directly to memory without passing through coherence logic.