Difference between revisions of "Programming Model"

(→OpenCL Example) |

(→OpenCL Example) |

||

| Line 80: | Line 80: | ||

} | } | ||

} | } | ||

| + | } | ||

| + | |||

| + | |||

| + | #include <CL/cl.h> | ||

| + | #include <iostream> | ||

| + | #include <unistd.h> | ||

| + | |||

| + | #define THREAD_NUM 4 | ||

| + | #define NAME_SIZE 30 | ||

| + | |||

| + | #define COLS 16 | ||

| + | #define ROWS 16 | ||

| + | |||

| + | bool callback_done = false; | ||

| + | cl_int matrix_1[ROWS][COLS]; | ||

| + | cl_int matrix_2[ROWS][COLS]; | ||

| + | cl_int matrix_out[ROWS][COLS] = {{0}}; | ||

| + | cl_int matrix_expected[ROWS][COLS]; | ||

| + | |||

| + | /* function prototypes */ | ||

| + | void init_matrix(int *matrix, int cols, int rows); | ||

| + | void print_matrix(int32_t * matr, int row, int col); | ||

| + | |||

| + | /* kernel function, reported here to allow checking the results | ||

| + | * obtained in the offloaded version | ||

| + | */ | ||

| + | void kernel_function(int *A, int *B, int *C, int rows, int cols) { | ||

| + | for (int r=0;r<rows;r++) { | ||

| + | for (int c=0;c<cols;c++) { | ||

| + | int v = 0; | ||

| + | for (int p=0;p<rows;p++) { | ||

| + | v = v + A[r * cols + p] * B[p * cols + c]; | ||

| + | } | ||

| + | C[r * cols + c] = v; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | void read_callback_function(cl_event event, cl_int event_command_exec_status, void* user_data) { | ||

| + | |||

| + | std::cout << "##################" << std::endl; | ||

| + | std::cout << "READ Callback! " << std::endl; | ||

| + | std::cout << "##################" << std::endl; | ||

| + | |||

| + | std::cout << "Matrix Expected: " << std::endl; | ||

| + | print_matrix(&matrix_expected[0][0], ROWS, COLS); | ||

| + | |||

| + | std::cout << "Matrix Obtained: " << std::endl; | ||

| + | print_matrix(&matrix_out[0][0], ROWS, COLS); | ||

| + | |||

| + | |||

| + | for(int i=0; i<ROWS; i++){ | ||

| + | for(int j=0; j<COLS; j++){ | ||

| + | if(matrix_out[i][j]!=matrix_expected[i][j]) { | ||

| + | std::cout << "Computation Error" << std::endl; | ||

| + | callback_done = true; | ||

| + | return; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | |||

| + | std::cout << "Matching Result" << std::endl; | ||

| + | |||

| + | callback_done = true; | ||

| + | } | ||

| + | |||

| + | int main(int argc, char** argv) { | ||

| + | |||

| + | cl_uint num_entries = 1; | ||

| + | cl_platform_id plat_id[2]; | ||

| + | cl_uint num_platform = 0; | ||

| + | char platform_name[NAME_SIZE]; | ||

| + | size_t param_size_ret; | ||

| + | cl_device_id dev_id[2]; | ||

| + | cl_uint num_devices = 0; | ||

| + | char device_name[NAME_SIZE]; | ||

| + | //Context Vars | ||

| + | cl_context_properties props[3] = {CL_CONTEXT_PLATFORM, 0, 0}; | ||

| + | props[1] = (cl_context_properties) plat_id[0]; | ||

| + | cl_int errcode_ret = 0; | ||

| + | cl_uint num_context_devices = 0; | ||

| + | size_t returned_size = 0; | ||

| + | void* host_ptr = NULL; | ||

| + | |||

| + | cl_event event_write_buffer_matrix_1, event_write_buffer_matrix_2, event_read, event_mark, enqueue_kernel_event; | ||

| + | |||

| + | cl_context ctx; | ||

| + | cl_command_queue cq; | ||

| + | cl_mem buffer_matrix_1, buffer_matrix_2, buffer_out; | ||

| + | cl_program matrix_program; | ||

| + | cl_kernel matrix_kernel; | ||

| + | |||

| + | //For enqueue_native | ||

| + | char* kernel_path = "/home/fparricelli/mm_opencl/kernel/obj/matrix_multiplication_dev_mem_mango.hex"; | ||

| + | |||

| + | char* kernel_name = "opencl_mm_mem_mango.hex"; | ||

| + | char* kernel_names[2] = {kernel_path, kernel_name}; | ||

| + | |||

| + | char* binaries[1]; | ||

| + | binaries[0] = kernel_path; | ||

| + | cl_int binary_status[1]; | ||

| + | binary_status[0] = CL_PROGRAM_BINARY_TYPE_EXECUTABLE; | ||

| + | size_t lengths[1]; | ||

| + | lengths[0] = sizeof(binaries[0]); | ||

| + | |||

| + | unsigned work_dim = 1; | ||

| + | size_t global_offset[work_dim]; | ||

| + | size_t global_size[work_dim]; | ||

| + | size_t local_size[work_dim]; | ||

| + | global_offset[0] = 0; | ||

| + | global_size[0] = 4; | ||

| + | local_size[0] = 4; | ||

| + | |||

| + | |||

| + | size_t* args[11]; | ||

| + | int number_of_threads = THREAD_NUM; | ||

| + | unsigned rows = ROWS; | ||

| + | unsigned cols = COLS; | ||

| + | cl_mem mem_list[3]; | ||

| + | cl_ulong start, end; | ||

| + | cl_int status; | ||

| + | size_t start_size, status_size; | ||

| + | size_t end_size; | ||

| + | init_matrix(&matrix_1[0][0], ROWS, COLS); | ||

| + | init_matrix(&matrix_2[0][0], ROWS, COLS); | ||

| + | |||

| + | std::cout << "Matrix 1: " << std::endl; | ||

| + | print_matrix(&matrix_1[0][0], ROWS, COLS); | ||

| + | std::cout << "Matrix 2: " << std::endl; | ||

| + | print_matrix(&matrix_2[0][0], ROWS, COLS); | ||

| + | |||

| + | kernel_function(&matrix_1[0][0], &matrix_2[0][0], &matrix_expected[0][0], COLS, ROWS); | ||

| + | |||

| + | if(clGetPlatformIDs(num_entries, plat_id, &num_platform) != 0) goto platform_error; | ||

| + | |||

| + | if(clGetPlatformInfo(plat_id[0], CL_PLATFORM_NAME, NAME_SIZE, platform_name, ¶m_size_ret) != 0) goto platform_error; | ||

| + | |||

| + | std::cout << "[PLATFORM] - Name: " << platform_name << std::endl; | ||

| + | |||

| + | if(clGetDeviceIDs(plat_id[0], CL_DEVICE_TYPE_ACCELERATOR, num_entries, dev_id, &num_devices)) goto device_error; | ||

| + | |||

| + | std::cout << "[DEVICE] - Number of Devices Retrived: " << num_devices << std::endl; | ||

| + | |||

| + | for( int i = 0; i < num_devices; i++ ) | ||

| + | std::cout << "[DEVICE] - ID: " << (cl_uint*) dev_id[i] << std::endl; | ||

| + | |||

| + | if(clGetDeviceInfo(dev_id[0], CL_DEVICE_NAME, NAME_SIZE, device_name, ¶m_size_ret) != 0) goto device_error; | ||

| + | |||

| + | std::cout << "[DEVICE] - Name: " << device_name << std::endl; | ||

| + | |||

| + | ctx = clCreateContext(props, 1, &dev_id[0], NULL, NULL, &errcode_ret); | ||

| + | |||

| + | if(errcode_ret != 0) goto context_error; | ||

| + | |||

| + | std::cout << "[CONTEXT] - Created!" << std::endl; | ||

| + | |||

| + | cq = clCreateCommandQueue(ctx, dev_id[0], CL_QUEUE_PROFILING_ENABLE, &errcode_ret); | ||

| + | |||

| + | if(errcode_ret != 0) goto commandqueue_error; | ||

| + | |||

| + | std::cout << "[COMMAND_QUEUE] - Created!" << std::endl; | ||

| + | |||

| + | buffer_matrix_1 = clCreateBuffer(ctx, CL_MEM_READ_WRITE, ROWS*COLS*sizeof(cl_int), NULL, &errcode_ret); | ||

| + | |||

| + | if(errcode_ret != 0) goto buffer_error; | ||

| + | |||

| + | std::cout << "[BUFFER-MATRIX-1] - Created! " << errcode_ret << std::endl; | ||

| + | |||

| + | buffer_matrix_2 = clCreateBuffer(ctx, CL_MEM_READ_WRITE, ROWS*COLS*sizeof(cl_int), NULL, &errcode_ret); | ||

| + | |||

| + | if(errcode_ret != 0) goto buffer_error; | ||

| + | |||

| + | std::cout << "[BUFFER-MATRIX-2] - Created! " << errcode_ret << std::endl; | ||

| + | |||

| + | buffer_out = clCreateBuffer(ctx, CL_MEM_READ_WRITE, ROWS*COLS*sizeof(cl_int), NULL, &errcode_ret); | ||

| + | |||

| + | if(errcode_ret != 0) goto buffer_error; | ||

| + | |||

| + | std::cout << "[BUFFER-OUT] - Created! " << errcode_ret << std::endl; | ||

| + | |||

| + | |||

| + | //Writing buffers | ||

| + | if(clEnqueueWriteBuffer(cq, buffer_matrix_1, CL_TRUE, 0, ROWS*COLS*sizeof(cl_int), &matrix_1[0][0], 0 , NULL, &event_write_buffer_matrix_1) !=0) goto event_error; | ||

| + | if(clEnqueueWriteBuffer(cq, buffer_matrix_2, CL_TRUE, 0, ROWS*COLS*sizeof(cl_int), &matrix_2[0][0], 0 , NULL, &event_write_buffer_matrix_2) !=0) goto event_error; | ||

| + | matrix_program = clCreateProgramWithBinary(ctx, 1, dev_id, (const size_t*) lengths, (const unsigned char**) binaries, binary_status, &errcode_ret); | ||

| + | printf("Error: %d \n", errcode_ret); | ||

| + | if(errcode_ret != 0) goto event_error; | ||

| + | |||

| + | matrix_kernel = clCreateKernel(matrix_program, "kernel_function", &errcode_ret); | ||

| + | |||

| + | printf("Error: %d \n", errcode_ret); | ||

| + | if(errcode_ret != 0) goto event_error; | ||

| + | |||

| + | clSetKernelArg(matrix_kernel, 0, sizeof(cl_mem*), (const void*) &buffer_matrix_1); | ||

| + | |||

| + | clSetKernelArg(matrix_kernel, 1, sizeof(cl_mem*), (const void*) &buffer_matrix_2); | ||

| + | clSetKernelArg(matrix_kernel, 2, sizeof(cl_mem*), (const void*) &buffer_out); | ||

| + | clSetKernelArg(matrix_kernel, 3, sizeof(uint32_t), (const void*) &rows); | ||

| + | clSetKernelArg(matrix_kernel, 4, sizeof(uint32_t), (const void*) &cols); | ||

| + | |||

| + | // args[0] = (size_t* ) kernel_path; | ||

| + | // args[1] = (size_t* ) kernel_name; | ||

| + | // | ||

| + | // args[5] = (size_t* ) number_of_threads; | ||

| + | // args[6] = (size_t* ) buffer_matrix_1; | ||

| + | // args[7] = (size_t *) buffer_matrix_2; | ||

| + | // args[8] = (size_t *) buffer_out; | ||

| + | // args[9] = (size_t *) rows; | ||

| + | // args[10] = (size_t*) cols; | ||

| + | // | ||

| + | // mem_list[0] = buffer_matrix_1; | ||

| + | // mem_list[1] = buffer_matrix_2; | ||

| + | // mem_list[2] = buffer_out; | ||

| + | // errcode_ret = clEnqueueNativeKernel(cq, | ||

| + | // NULL, | ||

| + | // (void*) args, | ||

| + | // sizeof(args), | ||

| + | // 3, | ||

| + | // (const cl_mem*) mem_list, | ||

| + | // (const void**) args, | ||

| + | // 0, | ||

| + | // NULL, | ||

| + | // &enqueue_kernel_event); | ||

| + | // | ||

| + | errcode_ret = clEnqueueNDRangeKernel(cq, | ||

| + | matrix_kernel, | ||

| + | work_dim, | ||

| + | global_offset, | ||

| + | global_size, | ||

| + | local_size, | ||

| + | 0, | ||

| + | NULL, | ||

| + | &enqueue_kernel_event); | ||

| + | if(errcode_ret != 0) goto event_error; | ||

| + | |||

| + | // clWaitForEvents(1, &enqueue_kernel_event); | ||

| + | |||

| + | if(clEnqueueMarker(cq, &event_mark) != 0) goto event_error; | ||

| + | |||

| + | // printf("event_mark 0x%x\n", event_mark); | ||

| + | |||

| + | if(clEnqueueReadBuffer(cq, buffer_out, CL_FALSE, 0, ROWS*COLS*sizeof(cl_int), &matrix_out[0][0], 0 , NULL, &event_read) != 0) goto event_error; | ||

| + | |||

| + | |||

| + | if(clSetEventCallback(event_read, CL_COMPLETE, &read_callback_function, &matrix_out[0][0]) != 0) goto event_error; | ||

| + | |||

| + | clFinish(cq); | ||

| + | |||

| + | while(!callback_done); | ||

| + | |||

| + | /* Printing Timing */ | ||

| + | |||

| + | if(clGetEventInfo(enqueue_kernel_event, CL_EVENT_COMMAND_EXECUTION_STATUS, sizeof(cl_int), (void*) &status, &status_size) != 0) goto exit_error; | ||

| + | if(clGetEventProfilingInfo(enqueue_kernel_event, CL_PROFILING_COMMAND_END, sizeof(cl_ulong), (void*) &end, &end_size) != 0) goto exit_error; | ||

| + | if(clGetEventProfilingInfo(enqueue_kernel_event, CL_PROFILING_COMMAND_START, sizeof(cl_ulong), (void*) &start, &start_size) != 0) goto exit_error; | ||

| + | |||

| + | std::cout << "Kernel started from: " << start << " ns" << std::endl; | ||

| + | std::cout << "Kernel ends from: " << end << " ns" << std::endl; | ||

| + | std::cout << "Kernel duration: " << start - end << " ns" << std::endl; | ||

| + | std::cout << "Kernel Event Status: " << status << " ns" << std::endl; | ||

| + | |||

| + | return EXIT_SUCCESS; | ||

| + | |||

| + | platform_error: | ||

| + | std::cout << "[ERROR] - Platform Error" << std::endl; | ||

| + | goto exit_error; | ||

| + | |||

| + | device_error: | ||

| + | std::cout << "[ERROR] - Device Error" << std::endl; | ||

| + | goto exit_error; | ||

| + | |||

| + | context_error: | ||

| + | std::cout << "[ERROR] - Context Error" << std::endl; | ||

| + | goto exit_error; | ||

| + | |||

| + | commandqueue_error: | ||

| + | std::cout << "[ERROR] - CommandQueue Error" << std::endl; | ||

| + | goto exit_error; | ||

| + | |||

| + | buffer_error: | ||

| + | std::cout << "[ERROR] - Buffer Error" << std::endl; | ||

| + | goto exit_error; | ||

| + | |||

| + | event_error: | ||

| + | std::cout << "[ERROR] - Event Error" << errcode_ret << std::endl; | ||

| + | goto exit_error; | ||

| + | |||

| + | exit_error: | ||

| + | return EXIT_FAILURE; | ||

| + | } | ||

| + | |||

| + | void init_matrix(int *matrix, int rows, int cols) | ||

| + | { | ||

| + | for (int r=0;r<rows;r++) { | ||

| + | for (int c=0;c<cols;c++) { | ||

| + | matrix[r*cols+c] = rand() % 100; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | void print_matrix(int32_t * matr, int row, int col) { | ||

| + | for(int i = 0; i < row; i++) { | ||

| + | for(int j = 0; j < col; j++){ | ||

| + | printf("%i\t", *(matr + (i*row+j))); | ||

| + | } | ||

| + | printf("\n"); | ||

| + | } | ||

| + | printf("\n"); | ||

} | } | ||

Revision as of 13:53, 3 June 2019

Contents

OpenCL support for Nu+

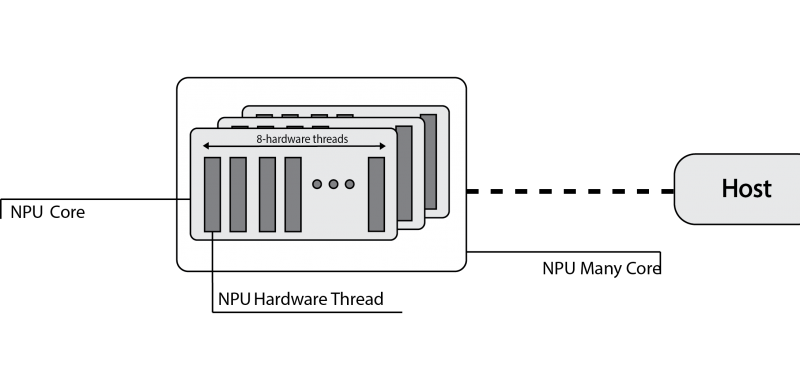

OpenCL defines a platform as a set of computing devices on which the host is connected to. Each device is further divided into several compute units (CUs), each of them defined as a collection of processing elements (PEs). Recall that the target platform is architecturally designed around a single core, structured in terms of a set of at most eight hardware threads. Each hardware threads competes with each other to have access to sixteen hardware lanes, to execute both scalar and vector operations on 32-bit wide integer or floating-point operands.

The computing device abstraction is physically mapped on the nu+ many-core architecture. The nu+ many-core can be configured in terms of the number of cores. Each nu+ core maps on the OpenCL Compute Unit. Internally, the nu+ core is composed of hardware threads, each of them represents the abstraction of the OpenCL processing element.

Execution Model Matching

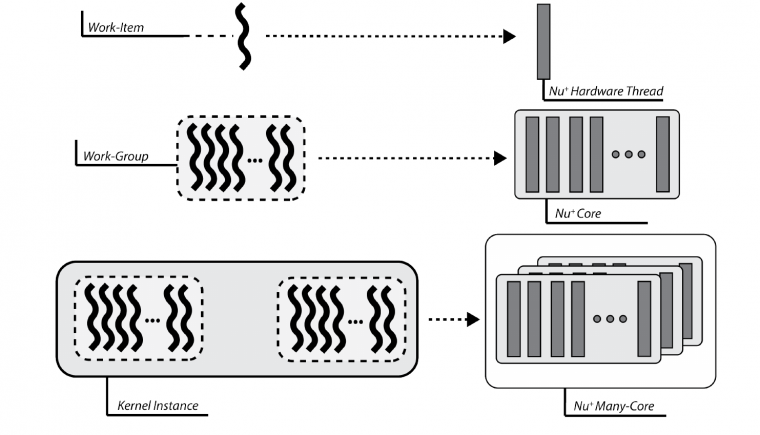

From the execution model point of view, OpenCL relies on an N-dimensional index space, where each point represents a kernel instance execution. Since the physical kernel instance execution is done by the hardware threads, the OpenCL work-item is mapped onto a nu+ single hardware-thread. Consequently, a work-group is defined as a set of hardware threads, and all work-items in a work-group executes on a single computing unit.

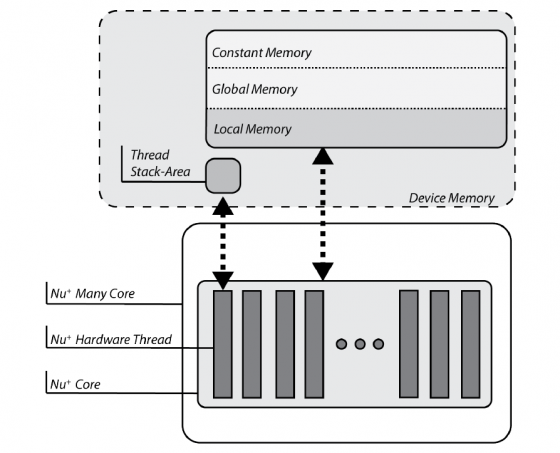

Memory Model Matching

OpenCL partitions the memory in four different spaces:

- the global and constant spaces: elements in those spaces are accessible by all work-items in all work-group.

- the local space: visible only to work-items within a work-group.

- the private space: visible to only a single work-item.

The target-platform is provided of a DDR memory, that is the device memory in OpenCL nomenclature. Consequently, variables are physically mapped on this memory. The compiler itself, by looking at the address-space qualifier, verifies the OpenCL constraints are satisfied.

Each nu+ core is also equipped with a Scratchpad Memory, an on-chip non-coherent memory portion exclusive to each core. This memory is compliant with the OpenCL local memory features

Finally, each hardware thread within the nu+ core has a private stack. This memory section is private to each hardware thread, that is the OpenCL work-item, and cannot be addressed by others. As a result, each stack acts as the OpenCL private memory.

Programming Model Matching

OpenCL supports two kinds of programming models, data- and task-parallel. A data-parallel model requires that each point of the OpenCL index space executes a kernel instance. Since each point represents a work-item and these are mapped on hardware threads, the data-parallel requirements are correctly satisfied. Please note that the implemented model is a relaxed version, without requiring a strictly one-to-one mapping on data.

A task-parallel programming model requires kernel instances are independently executed in any point of the index space. In this case, each work-item is not constrained to execute the same kernel instance of others. The compiler frontend defines a set of builtins that may be used for this purpose. Moreover, each nu + core is built of 16 hardware lanes, useful to realise a lock-step execution. Consequently, OpenCL support is realised to allow the usage of vector types. As a result, the vector execution is supported for the following data types:

- charn, ucharn, are respectively mapped on vec16i8 and vec16u8, where n=16. Other values of n are not supported.

- shortn, ushortn, are respectively mapped on vec16i16 and vec16i32, where n=16. Other values of n are not supported.

- intn, uintn, are respectively mapped on vec16i32 and vec16u32, where n=16. Other values of n are not supported.

- floatn, mapped on vec16f32, where n=16. Other values of n are not supported.

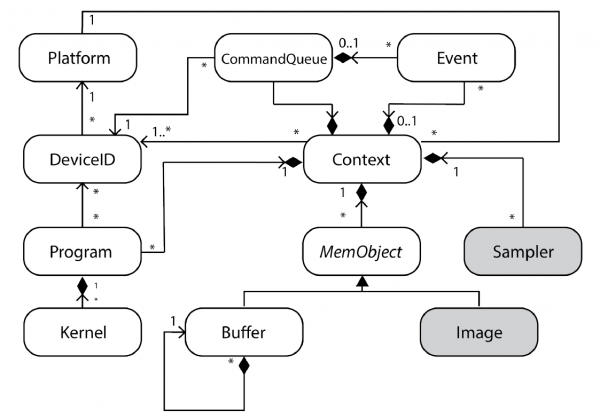

OpenCL Runtime Design

OpenCL APIs are a set of functions meant to coordinate and manage devices, those provide support for running applications and monitoring their execution. These APIs also provides a way to retrieve device-related information.

The following Figure depicts the UML Class diagram for the OpenCL Runtime as defined in the OpenCL specification. Grey-filled boxes represent not available features due to the absence of hardware support.

The custom OpenCL runtime relies on two main abstractions:

- Low-level abstractions, not entirely hardware dependent, provide device-host communication support.

- High-level abstractions, according to OpenCL APIs, administrate the life cycle of a kernel running onto the device.

OpenCL Example

#include <opencl_stdlib.h>

#define WORK_DIM 4

__kernel void kernel_function(__global int16 *A, __global int16 *B, __global int16 *C, int rows, int cols)

{

__private uint32_t threadId = get_local_id(0);

uint32_t nT = WORK_DIM; // number of threads

uint32_t nL = 16; // number of lanes

uint32_t N = rows;

uint32_t nC = N / nL;

uint32_t ndivnT = N / nT;

uint32_t tIdndivnT = threadId * ndivnT;

uint32_t tIdndivnTnC = tIdndivnT * nC;

for (uint32_t i = 0; i < ndivnT * nC; i++)

{

uint32_t col = (tIdndivnT + i) % nC;

C[tIdndivnTnC + i] = 0;

for (uint32_t j = 0; j < nC; j++)

{

for (uint32_t k = 0; k < nL; k++)

{

C[tIdndivnTnC + i] += A[tIdndivnTnC + i - col + j][k] * B[(nC * k) + (j * N) + col];

}

}

}

}

- include <CL/cl.h>

- include <iostream>

- include <unistd.h>

- define THREAD_NUM 4

- define NAME_SIZE 30

- define COLS 16

- define ROWS 16

bool callback_done = false; cl_int matrix_1[ROWS][COLS]; cl_int matrix_2[ROWS][COLS]; cl_int matrix_out[ROWS][COLS] = Template:0; cl_int matrix_expected[ROWS][COLS];

/* function prototypes */ void init_matrix(int *matrix, int cols, int rows); void print_matrix(int32_t * matr, int row, int col);

/* kernel function, reported here to allow checking the results

* obtained in the offloaded version */

void kernel_function(int *A, int *B, int *C, int rows, int cols) {

for (int r=0;r<rows;r++) {

for (int c=0;c<cols;c++) {

int v = 0;

for (int p=0;p<rows;p++) {

v = v + A[r * cols + p] * B[p * cols + c];

}

C[r * cols + c] = v;

}

}

} void read_callback_function(cl_event event, cl_int event_command_exec_status, void* user_data) {

std::cout << "##################" << std::endl; std::cout << "READ Callback! " << std::endl; std::cout << "##################" << std::endl;

std::cout << "Matrix Expected: " << std::endl; print_matrix(&matrix_expected[0][0], ROWS, COLS); std::cout << "Matrix Obtained: " << std::endl; print_matrix(&matrix_out[0][0], ROWS, COLS);

for(int i=0; i<ROWS; i++){

for(int j=0; j<COLS; j++){

if(matrix_out[i][j]!=matrix_expected[i][j]) {

std::cout << "Computation Error" << std::endl;

callback_done = true; return;

}

}

}

std::cout << "Matching Result" << std::endl;

callback_done = true; }

int main(int argc, char** argv) {

cl_uint num_entries = 1; cl_platform_id plat_id[2]; cl_uint num_platform = 0; char platform_name[NAME_SIZE]; size_t param_size_ret; cl_device_id dev_id[2]; cl_uint num_devices = 0; char device_name[NAME_SIZE]; //Context Vars cl_context_properties props[3] = {CL_CONTEXT_PLATFORM, 0, 0}; props[1] = (cl_context_properties) plat_id[0]; cl_int errcode_ret = 0; cl_uint num_context_devices = 0; size_t returned_size = 0; void* host_ptr = NULL;

cl_event event_write_buffer_matrix_1, event_write_buffer_matrix_2, event_read, event_mark, enqueue_kernel_event;

cl_context ctx; cl_command_queue cq; cl_mem buffer_matrix_1, buffer_matrix_2, buffer_out; cl_program matrix_program; cl_kernel matrix_kernel;

//For enqueue_native char* kernel_path = "/home/fparricelli/mm_opencl/kernel/obj/matrix_multiplication_dev_mem_mango.hex";

char* kernel_name = "opencl_mm_mem_mango.hex"; char* kernel_names[2] = {kernel_path, kernel_name};

char* binaries[1]; binaries[0] = kernel_path; cl_int binary_status[1]; binary_status[0] = CL_PROGRAM_BINARY_TYPE_EXECUTABLE; size_t lengths[1]; lengths[0] = sizeof(binaries[0]);

unsigned work_dim = 1;

size_t global_offset[work_dim];

size_t global_size[work_dim];

size_t local_size[work_dim];

global_offset[0] = 0;

global_size[0] = 4;

local_size[0] = 4;

size_t* args[11];

int number_of_threads = THREAD_NUM;

unsigned rows = ROWS;

unsigned cols = COLS;

cl_mem mem_list[3];

cl_ulong start, end;

cl_int status;

size_t start_size, status_size;

size_t end_size;

init_matrix(&matrix_1[0][0], ROWS, COLS);

init_matrix(&matrix_2[0][0], ROWS, COLS);

std::cout << "Matrix 1: " << std::endl; print_matrix(&matrix_1[0][0], ROWS, COLS); std::cout << "Matrix 2: " << std::endl; print_matrix(&matrix_2[0][0], ROWS, COLS);

kernel_function(&matrix_1[0][0], &matrix_2[0][0], &matrix_expected[0][0], COLS, ROWS);

if(clGetPlatformIDs(num_entries, plat_id, &num_platform) != 0) goto platform_error;

if(clGetPlatformInfo(plat_id[0], CL_PLATFORM_NAME, NAME_SIZE, platform_name, ¶m_size_ret) != 0) goto platform_error;

std::cout << "[PLATFORM] - Name: " << platform_name << std::endl;

if(clGetDeviceIDs(plat_id[0], CL_DEVICE_TYPE_ACCELERATOR, num_entries, dev_id, &num_devices)) goto device_error;

std::cout << "[DEVICE] - Number of Devices Retrived: " << num_devices << std::endl;

for( int i = 0; i < num_devices; i++ ) std::cout << "[DEVICE] - ID: " << (cl_uint*) dev_id[i] << std::endl;

if(clGetDeviceInfo(dev_id[0], CL_DEVICE_NAME, NAME_SIZE, device_name, ¶m_size_ret) != 0) goto device_error;

std::cout << "[DEVICE] - Name: " << device_name << std::endl;

ctx = clCreateContext(props, 1, &dev_id[0], NULL, NULL, &errcode_ret);

if(errcode_ret != 0) goto context_error;

std::cout << "[CONTEXT] - Created!" << std::endl;

cq = clCreateCommandQueue(ctx, dev_id[0], CL_QUEUE_PROFILING_ENABLE, &errcode_ret);

if(errcode_ret != 0) goto commandqueue_error;

std::cout << "[COMMAND_QUEUE] - Created!" << std::endl;

buffer_matrix_1 = clCreateBuffer(ctx, CL_MEM_READ_WRITE, ROWS*COLS*sizeof(cl_int), NULL, &errcode_ret);

if(errcode_ret != 0) goto buffer_error;

std::cout << "[BUFFER-MATRIX-1] - Created! " << errcode_ret << std::endl;

buffer_matrix_2 = clCreateBuffer(ctx, CL_MEM_READ_WRITE, ROWS*COLS*sizeof(cl_int), NULL, &errcode_ret);

if(errcode_ret != 0) goto buffer_error;

std::cout << "[BUFFER-MATRIX-2] - Created! " << errcode_ret << std::endl;

buffer_out = clCreateBuffer(ctx, CL_MEM_READ_WRITE, ROWS*COLS*sizeof(cl_int), NULL, &errcode_ret);

if(errcode_ret != 0) goto buffer_error;

std::cout << "[BUFFER-OUT] - Created! " << errcode_ret << std::endl;

//Writing buffers

if(clEnqueueWriteBuffer(cq, buffer_matrix_1, CL_TRUE, 0, ROWS*COLS*sizeof(cl_int), &matrix_1[0][0], 0 , NULL, &event_write_buffer_matrix_1) !=0) goto event_error;

if(clEnqueueWriteBuffer(cq, buffer_matrix_2, CL_TRUE, 0, ROWS*COLS*sizeof(cl_int), &matrix_2[0][0], 0 , NULL, &event_write_buffer_matrix_2) !=0) goto event_error;

matrix_program = clCreateProgramWithBinary(ctx, 1, dev_id, (const size_t*) lengths, (const unsigned char**) binaries, binary_status, &errcode_ret);

printf("Error: %d \n", errcode_ret);

if(errcode_ret != 0) goto event_error;

matrix_kernel = clCreateKernel(matrix_program, "kernel_function", &errcode_ret);

printf("Error: %d \n", errcode_ret); if(errcode_ret != 0) goto event_error;

clSetKernelArg(matrix_kernel, 0, sizeof(cl_mem*), (const void*) &buffer_matrix_1);

clSetKernelArg(matrix_kernel, 1, sizeof(cl_mem*), (const void*) &buffer_matrix_2); clSetKernelArg(matrix_kernel, 2, sizeof(cl_mem*), (const void*) &buffer_out); clSetKernelArg(matrix_kernel, 3, sizeof(uint32_t), (const void*) &rows); clSetKernelArg(matrix_kernel, 4, sizeof(uint32_t), (const void*) &cols);

// args[0] = (size_t* ) kernel_path; // args[1] = (size_t* ) kernel_name; // // args[5] = (size_t* ) number_of_threads; // args[6] = (size_t* ) buffer_matrix_1; // args[7] = (size_t *) buffer_matrix_2; // args[8] = (size_t *) buffer_out; // args[9] = (size_t *) rows; // args[10] = (size_t*) cols; // // mem_list[0] = buffer_matrix_1; // mem_list[1] = buffer_matrix_2; // mem_list[2] = buffer_out; // errcode_ret = clEnqueueNativeKernel(cq, // NULL, // (void*) args, // sizeof(args), // 3, // (const cl_mem*) mem_list, // (const void**) args, // 0, // NULL, // &enqueue_kernel_event); // errcode_ret = clEnqueueNDRangeKernel(cq, matrix_kernel, work_dim, global_offset, global_size, local_size, 0, NULL, &enqueue_kernel_event); if(errcode_ret != 0) goto event_error;

// clWaitForEvents(1, &enqueue_kernel_event);

if(clEnqueueMarker(cq, &event_mark) != 0) goto event_error;

// printf("event_mark 0x%x\n", event_mark);

if(clEnqueueReadBuffer(cq, buffer_out, CL_FALSE, 0, ROWS*COLS*sizeof(cl_int), &matrix_out[0][0], 0 , NULL, &event_read) != 0) goto event_error;

if(clSetEventCallback(event_read, CL_COMPLETE, &read_callback_function, &matrix_out[0][0]) != 0) goto event_error;

clFinish(cq);

while(!callback_done);

/* Printing Timing */

if(clGetEventInfo(enqueue_kernel_event, CL_EVENT_COMMAND_EXECUTION_STATUS, sizeof(cl_int), (void*) &status, &status_size) != 0) goto exit_error; if(clGetEventProfilingInfo(enqueue_kernel_event, CL_PROFILING_COMMAND_END, sizeof(cl_ulong), (void*) &end, &end_size) != 0) goto exit_error; if(clGetEventProfilingInfo(enqueue_kernel_event, CL_PROFILING_COMMAND_START, sizeof(cl_ulong), (void*) &start, &start_size) != 0) goto exit_error;

std::cout << "Kernel started from: " << start << " ns" << std::endl;

std::cout << "Kernel ends from: " << end << " ns" << std::endl;

std::cout << "Kernel duration: " << start - end << " ns" << std::endl;

std::cout << "Kernel Event Status: " << status << " ns" << std::endl;

return EXIT_SUCCESS;

platform_error:

std::cout << "[ERROR] - Platform Error" << std::endl;

goto exit_error;

device_error:

std::cout << "[ERROR] - Device Error" << std::endl;

goto exit_error;

context_error:

std::cout << "[ERROR] - Context Error" << std::endl;

goto exit_error;

commandqueue_error:

std::cout << "[ERROR] - CommandQueue Error" << std::endl;

goto exit_error;

buffer_error:

std::cout << "[ERROR] - Buffer Error" << std::endl;

goto exit_error;

event_error:

std::cout << "[ERROR] - Event Error" << errcode_ret << std::endl;

goto exit_error;

exit_error:

return EXIT_FAILURE;

}

void init_matrix(int *matrix, int rows, int cols)

{

for (int r=0;r<rows;r++) {

for (int c=0;c<cols;c++) {

matrix[r*cols+c] = rand() % 100;

}

}

}

void print_matrix(int32_t * matr, int row, int col) {

for(int i = 0; i < row; i++) {

for(int j = 0; j < col; j++){

printf("%i\t", *(matr + (i*row+j)));

}

printf("\n");

}

printf("\n");

}