Difference between revisions of "MC System"

(→NUPLUS tile) |

(→NUPLUS tile) |

||

| Line 31: | Line 31: | ||

end | end | ||

... | ... | ||

| + | |||

| + | In case of SYNCH message, the above arbiter checks if the message is an ACCOUNT or a RELEASE type. In the first case, the service message is dispatched to the Synchronization core, otherwise to the nu+ core on the service interface, which is directly connected to the Barrier core module allocated in the core. | ||

Possible incoming host requests are defined in the ''nuplus_message_service_define.sv'' header file: | Possible incoming host requests are defined in the ''nuplus_message_service_define.sv'' header file: | ||

Revision as of 15:35, 17 May 2019

The many-core version of the project provides different type of tiles, although all share the same network- and coherence-related components. On the networking side, a generic tile of the system features a Network Interface module and a hardware Router, both described in Network architecture section.

The nu+ many-core features a shared-memory system, each nu+ core has a private L1 data cache, while the L2 cache is spread all over the instantiated tile along with a Directory Controller module which handles and stores coherence information for cached memory lines. This component is further described in Coherence architecture section.

Contents

NUPLUS tile

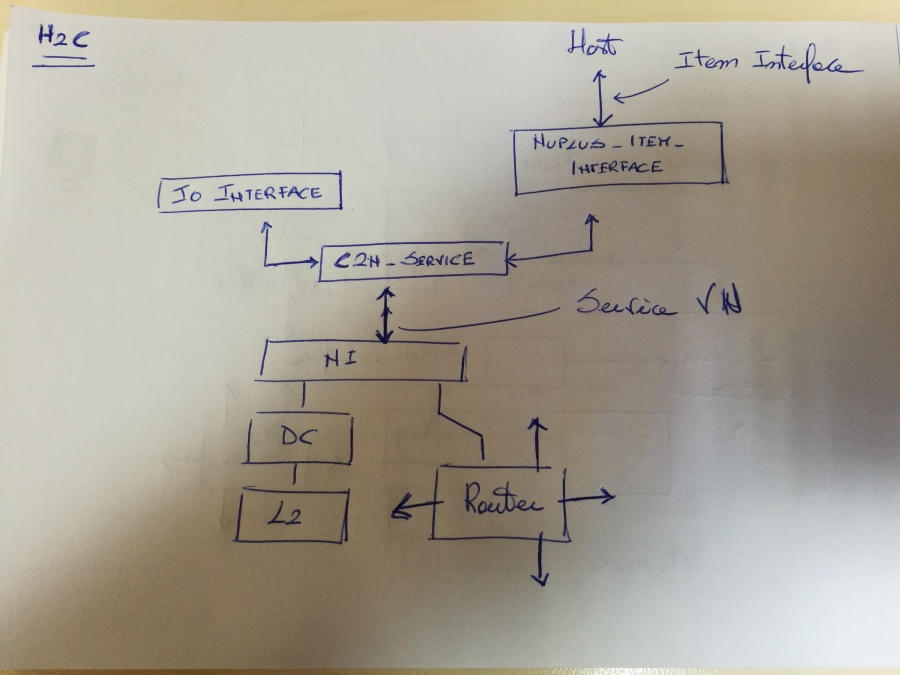

The NUPLUS tile mainly equips a nu+ GPGPU core, described in Nu+ GPGPU core section, and components used to interface the core with both networking and coherence systems. The following figure depicts a block view of the NUPLUS tile:

The tile implements a Cache controller, called l1d_cache, which interfaces the core to the memory system. The Cache controller exploits three Network interface virtual channels dedicated to the coherence system (ID 0 to 2), providing an abstracted view of the coherence to the core. For further details of the module and of the coherence system refer to Coherence architecture section.

Host communication, synchronization system and IO request leverage on the last Virtual Channel (ID 3) named Service Virtual Channel. The tile_nuplus module implements an arbiter which dispatches the incoming message from the service channel to the right module, based on the message_type field. E.g., a message from the host is marked as HOST and is forwarded to the boot manager module in the tile, same is for others:

always @( ni_n2c_mes_service_valid, ni_n2c_mes_service, n2c_sync_message, bm_c2n_mes_service_consumed, sc_account_consumed, n2c_mes_service_consumed, io_intf_message_consumed ) begin

...

if (ni_n2c_mes_service_valid) begin

if( ni_n2c_mes_service.message_type == HOST ) begin

bm_n2c_mes_service.data = ni_n2c_mes_service.data;

bm_n2c_mes_service_valid = ni_n2c_mes_service_valid;

c2n_mes_service_consumed = bm_c2n_mes_service_consumed;

end else if ( ni_n2c_mes_service.message_type == SYNC ) begin

if ( n2c_sync_message.sync_type == ACCOUNT ) begin

ni_account_mess = n2c_sync_message.sync_mess.account_mess;

ni_account_mess_valid = ni_n2c_mes_service_valid;

c2n_mes_service_consumed = sc_account_consumed;

end else if ( n2c_sync_message.sync_type == RELEASE ) begin

n2c_release_message = n2c_sync_message.sync_mess.release_mess;

n2c_release_valid = ni_n2c_mes_service_valid;

c2n_mes_service_consumed = n2c_mes_service_consumed;

end

...

In case of SYNCH message, the above arbiter checks if the message is an ACCOUNT or a RELEASE type. In the first case, the service message is dispatched to the Synchronization core, otherwise to the nu+ core on the service interface, which is directly connected to the Barrier core module allocated in the core.

Possible incoming host requests are defined in the nuplus_message_service_define.sv header file:

typedef enum logic [`MESSAGE_TYPE_LENGTH - 1 : 0] {

HOST = 0,

SYNC = 1,

IO_OP = 2

} service_message_type_t;

While Synchronization Core is widely described in the Synchronization architecture section, in the remains of this section we will focus on the other modules that rely on the Service Virtual Channel.

C2N Service Scheduler

This module stores and serializes requests from modules that attempt to send a message over the Service Virtual Channel. Since this channel has a single entry point, the c2n_service_scheduler provides a dedicated port to each of the requiring modules and forwards their request to the service virtual channel. When a conflict occurs, this module serializes the conflicting requests, each request is selected by an internal round-robin arbiter.

Boot Manager

Host commands are dispatched to the boot_manager module interconnected with the Thread Controller and control registers on the core. This module implements core-side logic for the item interface, described in theItem Interface section, interacting with the core on the basis of messages from the host.

IO Interface

MC tile

H2C tile

NONE tile

HT tile

Described in Heterogeneous Tile section.