Difference between revisions of "Load/Store unit"

(→Stage 2) |

|||

| (10 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

| − | + | [[File:Load-Store_unit.jpg|1000px]] | |

| + | |||

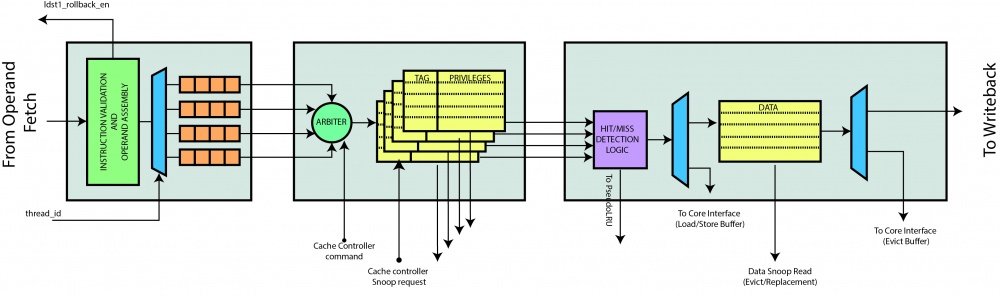

| + | Load/Store unit provides access to the L1 N-way set-associative cache and manages load and store operations coming from the core; it is structured according to a Pipeline architecture divided into three stages (that will be explained in detail below). | ||

| + | It interfaces the [[Core|Operand fetch stage]] and [[Core|Writeback stage]] on the core side, dually on the bus side it communicates with the [[L1 Cache Controller|cache controller]] which updates information (namely tags and privileges) and data. Moreover, such a module sends a signal to the instruction buffer unit which has the purpose of stopping a thread in case of miss. | ||

| + | Load/Store unit does not store any information about the coherency protocol used, although it keeps track of information regarding privileges on all cache addresses. Each cache line stored, in fact, has two privileges: ''can read'' and ''can write'' that are used to determine cache miss/hit and are updated by the Cache Controller. | ||

| + | Finally, it should be noted that this unit does not manage addresses that refer to the IO address space: whenever an issued request belongs to the non-coherent memory space, this is directly forwarded to the Cache Controller which will handle bypassing the coherency protocol, sending the request to the memory controller and report the data back to the third stage. | ||

== Stage 1 == | == Stage 1 == | ||

| − | This | + | This stage contains a queue for each thread, each of them enqueues in such queues load/store instructions from the Operand Fetch waiting to be scheduled. |

| + | Before the requests are queued, a phase of decoding and verification of the type of operation is performed (to understand if it is a word, half word or byte), afterwards the control logic checks the alignment of the incoming request. Alignment is done based on operations (both scalar and vector) on byte, half-word and word. In case of vector alignment, the data is compressed so that it can be written consecutively. For example, a vec16i8 - that has 1 significant byte each 4 bytes - is compressed to have 16 consecutive bytes. The following lines check all possible alignments: | ||

| + | |||

| + | assign is_1_byte_aligned = 1'b1; | ||

| + | assign is_2_byte_aligned = ~( effective_address[0] ); | ||

| + | assign is_4_byte_aligned = ~( |effective_address[1 : 0] ); | ||

| + | assign is_16_byte_aligned = ~( |effective_address[3 : 0] ); | ||

| + | assign is_32_byte_aligned = ~( |effective_address[4 : 0] ); | ||

| + | assign is_64_byte_aligned = ~( |effective_address[5 : 0] ); | ||

| + | |||

| + | Then, the following code determines if the access is misaligned based on the current operation and the required alignment for the memory access: | ||

| + | |||

| + | assign is_misaligned = | ||

| + | ( is_vectorial_op && | ||

| + | ( ( is_byte_op && !is_16_byte_aligned ) || | ||

| + | ( is_halfword_op && !is_32_byte_aligned ) || | ||

| + | ( is_word_op && !is_64_byte_aligned ) ) ) || | ||

| + | ( !is_vectorial_op && | ||

| + | ( ( is_byte_op && !is_1_byte_aligned ) || | ||

| + | ( is_halfword_op && !is_2_byte_aligned ) || | ||

| + | ( is_word_op && !is_4_byte_aligned ) ) ); | ||

| + | |||

| + | In case of misaligned access, the is_misaligned bit is asserted and raises a rolloback request to the Rollback handler. The Rollback handler issue the trap for the current thread, which jumps to `LDST_ADDR_MISALIGN_ISR, location of the corresponding trap: | ||

| + | |||

| + | always_ff @ ( posedge clk, posedge reset ) | ||

| + | if ( reset ) | ||

| + | ldst1_rollback_en <= 1'b0; | ||

| + | else | ||

| + | ldst1_rollback_en <= ( is_misaligned | is_out_of_memory ) & instruction_valid; | ||

| + | |||

| + | always_ff @ ( posedge clk ) begin | ||

| + | ldst1_rollback_pc <= `LDST_ADDR_MISALIGN_ISR; | ||

| + | ldst1_rollback_thread_id <= opf_inst_scheduled.thread_id; | ||

| + | end | ||

| − | |||

| − | + | On the other hand, when an instruction is aligned the instruction in the FIFO related to that thread is enqueued into its corresponding queue, but only if the instruction is valid or a flush request, and if there is no rollback occurring. | |

| − | ' | + | assign fifo_enqueue_en = ( instruction_valid | is_flush ) && opf_inst_scheduled.thread_id == thread_id_t'( thread_idx ) && !ldst1_rollback_en; |

| − | The | + | The instruction is then loaded (scalar or vector) appropriately aligned in the FIFO. |

| − | + | if (is_halfword_op ) begin | |

| + | fifo_input.store_value = halfword_aligned_scalar_data; | ||

| + | fifo_input.store_mask = halfword_aligned_scalar_mask; | ||

| + | ... | ||

| + | end | ||

| − | |||

| − | + | Finally, a pending instruction for each thread is forwarded in parallel to the next stage. Whenever the next stage can execute an instruction provided by the i-th thread, it asserts the ''ldst2_dequeue_instruction'' signal that notifies the stage 1 whether the instruction has been consumed or not. In this way, it is possible to stall the instruction at the head of each FIFO if the next stage is busy. | |

| + | Moreover, stage 1 is equipped with a recycle buffer: if a cache miss occurs in the Stage 3, the instruction and its operands are saved in this buffer. The output of this buffer is in competition with the normal load/store instructions, but the recycled instructions have a higher priority than the other operations. | ||

== Stage 2 == | == Stage 2 == | ||

| − | + | In this stage information are managed (tags and privileges), while data are handled by the next stage. Those informations are updated by the cache controller, based on the implemented coherency protocol (MSI by default). | |

| − | + | This stage receives as many parallel requests from the core as the number of threads. A pending request is selected from the set of pending requests from the previous stage. | |

| + | A thread is chosen by a Round Robin arbiter; first the selected thread index is obtained, if it has both a valid recycled and FIFO requests, then the former will be served first because it has higher priority. | ||

| − | + | always_comb begin | |

| + | if ( ldst1_recycle_valid[ldst1_fifo_winner_id] ) begin | ||

| + | ... | ||

| + | end else begin | ||

| + | ... (normal request) | ||

| + | end | ||

| + | |||

| + | Note that, if the cache controller sends a request (asserting the ''cc_update_ldst_valid signal'') then the arbiter gives maximum priority to it and takes no instructions from the FIFO. Priority is managed by these signals: | ||

| + | |||

| + | ldst1_fifo_requestor = ( ldst1_valid | ldst1_recycle_valid ) & {`THREAD_NUMB{~cc_update_ldst_valid}} & ~sleeping_thread_mask_next; | ||

| + | //if there is a valid request | ||

| + | ldst1_request_valid = |ldst1_fifo_requestor; | ||

| + | tag_sram_read1_address = ( cc_update_ldst_valid ) ? cc_update_ldst_address.index : ldst1_fifo_request.address.index; | ||

| + | next_request = ( cc_update_ldst_valid ) ? cc_update_request : ldst1_fifo_request; | ||

| + | ldst2_valid = ldst1_request_valid; | ||

| + | |||

| + | As stated above, this stage manages tag and privileges. The tag caches are accessed in parallel, for such a reason they are equipped with 2 read and one write ports; the second reading port is exclusively used by the Cache Controller for managing the coherency protocol (through its privileged bus called ''cc_snoop_request''). | ||

| + | Writes are performed by the Core or Cache Controller. Requests from the Core (load or store) at this stage are always performed in reading tags and privileges; in case of store hit writes requests from the Core are finalized in the next stage. | ||

| + | |||

| + | The cache controller can send different commands to the Load/Store unit. | ||

| + | In case of '''CC_INSTRUCTION''', the stage 2 is receiving an instruction that could not be served previously. The cache controller, in this case, provides the new privileges, tags and data. So, this stage bypasses the new data (privileges, tags and data) to the next one in order to "complete" the operation (whether it is a load or a store). In detail, when ''ldst2_valid'' signal is asserted, it propagates the ''ldst2_instruction'', the new address is passed on ''ldst2_address'', the data is passed over ''ldst2_store_value signal'', with related masks, tags and privileges. | ||

| + | |||

| + | In case of '''CC_UPDATE_INFO''' from the cache controller, stage 2 receives a command in order to update the information (tags and privileges) of a given set. In this case nothing is propagated and no validation signal is asserted to the next stage, since it is not necessary to update the data cache. The privileges and the cache tag are updated with the values provided by the cache controller. It also indicates which way must be updated being responsible for the PseudoLRU. | ||

| + | |||

| + | In case of '''CC_UPDATE_INFO_DATA''' from the cache controller, stage 2 receives an update command, but in this case must also be updated data cache. Stage 2 propagates the address information (which are used to identify the index relative to the set), way and value provided by the cache controller. As in the previous case, the privileges and the cache tag are updated with the values provided by the cache controller. In this case the notification is made by asserting ''ldst2_update_valid'' and stage 3 should not propagate anything to the Writeback stage. In detail, only ''ldst2_update_valid'' is asserted, the way is ''ldst2_update_way'', the new address is passed using ''ldst2_address'', the data passed using ''ldst2_store_value''. | ||

| + | |||

| + | In case of '''CC_EVICT''' from the CC, stage 2 must notify next one that the data must be replaced. In this case the notification is made by asserting ldst2_evict_valid and providing the complete address of the data to be replaced (which will have the same index as the new one), the way to use and the data. In detail, ''ldst2_evict_valid'' and ''ldst2_update_valid'' are asserted, the data is passed on ''ldst2_store_value'', the old address is composed of stage 3 using the past tag and the index of the new one, the way is ''ldst2_update_way''. | ||

| + | |||

| + | The last responsibility of this stage is to wake a thread up when a cache miss occurs, thread that caused a cache miss is asleep (via the ldst3_thread_sleep signal); | ||

| + | |||

| + | assign sleeping_thread_mask_next = ( sleeping_thread_mask | ldst3_thread_sleep ) & ( ~thread_wakeup_mask ); | ||

| + | assign thread_wakeup_mask = thread_wakeup_oh & {`THREAD_NUMB{cc_wakeup}}; | ||

| + | |||

| + | When a memory transaction falls into the memory space for IO communication, the L1 is bypassed. The following code checks which pending instruction falls in the memory space for IO: | ||

| + | |||

| + | localparam IOM_BASE_ADDR = `IO_MAP_BASE_ADDR; | ||

| + | localparam IOM_SIZE = `IO_MAP_SIZE; | ||

always_comb begin | always_comb begin | ||

| − | + | for ( integer i = 0; i < $bits(thread_mask_t); i++) begin | |

| − | + | if (ldst1_recycle_valid[i]) begin | |

| − | + | ldst1_fifo_is_io_memspace[i] = ldst1_recycle_address[i] >= IOM_BASE_ADDR & ldst1_recycle_address[i] <= ( IOM_BASE_ADDR + IOM_SIZE ); | |

| − | + | ldst1_fifo_is_io_memspace_dequeue_condition[i] = ( ldst1_fifo_is_io_memspace[i] ) ? ( io_intf_resp_valid & io_intf_wakeup_thread == thread_id_t'(i) ) : 1'b1; | |

| + | end else begin | ||

| + | // controlla su ldst1_address | ||

| + | ldst1_fifo_is_io_memspace[i] = ldst1_valid[i] & ( ldst1_address[i] >= IOM_BASE_ADDR & ldst1_address[i] <= ( IOM_BASE_ADDR + IOM_SIZE ) ); | ||

| + | ldst1_fifo_is_io_memspace_dequeue_condition[i] = ( ldst1_fifo_is_io_memspace[i] ) ? io_intf_available : 1'b1; | ||

| + | end | ||

| + | end | ||

end | end | ||

| + | |||

| + | Then, the ldst1_fifo_is_io_memspace bit for the scheduled thread is forwarded to the next stage: | ||

| − | + | always_ff @ ( posedge clk, posedge reset ) | |

| + | if ( reset ) begin | ||

| + | ldst2_valid <= 1'b0; | ||

| + | ... | ||

| + | ldst2_io_memspace <= 1'b0; | ||

| + | ldst2_io_memspace_has_data <= 1'b0; | ||

| + | end else begin | ||

| + | ldst2_io_memspace <= ldst1_fifo_is_io_memspace[ldst1_fifo_winner_id]; | ||

| + | ldst2_io_memspace_has_data <= ldst1_recycle_valid[ldst1_fifo_winner_id] & ldst1_fifo_is_io_memspace[ldst1_fifo_winner_id]; | ||

| + | ldst2_valid <= ldst1_request_valid; | ||

| + | ... | ||

| + | end | ||

| − | == | + | == Stage 3 == |

| − | + | This stage receives input instructions, data update requests and replacement requests. | |

| + | Before managing logic to generate the output it is necessary to understand the type of request received (instruction, data update, replacement). Moreover, in case of instruction the control logic have to check if there is a hit or a miss and if there is a ''load_miss'' or ''store_miss'' (that occur if we do not have the necessary privileges). | ||

| − | + | assign is_instruction = ldst2_valid & ~ldst2_is_flush; | |

| + | assign is_replacement = ldst2_update_valid && ldst2_evict_valid; | ||

| + | assign is_update = ldst2_update_valid && !ldst2_evict_valid; | ||

| + | assign is_flush = ldst2_valid & ldst2_is_flush & is_hit & ~cr_ctrl_cache_wt; | ||

| + | assign is_store = is_instruction && !ldst2_instruction.is_load; | ||

| + | assign is_load = is_instruction && ldst2_instruction.is_load; | ||

| − | + | A cache hit is asserted if we have read or write privileges on such address, and if the tag of the requested address is equal to an element present in the tag array (dcache_way) read from the tag cache using the address set (passed from the Stage 2). | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | for ( dcache_way = 0; dcache_way < `DCACHE_WAY; dcache_way++ ) begin : HIT_MISS_CHECK | |

| + | assign way_matched_oh[dcache_way] = ( ( ldst2_tag_read[dcache_way] == ldst2_address.tag && | ||

| + | (ldst2_privileges_read[dcache_way].can_write || ldst2_privileges_read[dcache_way].can_read ) ) && ldst2_valid ); | ||

| + | end | ||

| + | assign is_hit = |way_matched_oh; | ||

| − | + | Then it must be verified if we have permissions to perform operations on the block for which there was a hit. E.g., if there is a hit for that address and the request is a store, we must have write permissions for that block otherwise there will be a store miss. | |

| − | ==== | + | assign is_store_hit = is_store && ldst2_privileges_read[way_matched_idx].can_write && is_hit; |

| − | + | assign is_store_miss = is_store && ~is_store_hit; | |

| + | assign is_load_hit = is_load && ldst2_privileges_read[way_matched_idx].can_read && is_hit; | ||

| + | assign is_load_miss = is_load && ~is_load_hit; | ||

| − | + | Therefore, in case of store hit it saves data in the data cache (and does not send anything outwards), in case of load hit the ''ldst3_valid'' signal is asserted, and data will be sent to the WriteBack module. In case of store/load miss the ldst3_miss is asserted towards the cache controller. Furthermore, in the case of miss the thread must be put to sleep. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ldst3_thread_sleep[thread_idx] = ( is_load_miss || is_store_miss ) && ldst2_instruction.thread_id == thread_id_t'( thread_idx ); | |

| − | |||

| − | + | In case of update operations, the data cache line identified by the signals pair {ldst2_address.index, ldst2_update_way} is updated with the new data ldst2_store_value coming from previous stage. | |

| − | + | Finally, for replacement operations, similarly to the update case, the cache line addressed by {''ldst2_address.index'', ''ldst2_update_way''} is updated with the new value ''ldst2_store_value'', and its old content sent to the cache controller through the output ''ldst3_cache_line'' asserting the ''ldst3_evict'' signal. In detail, are sent to the controller cache: the old address on ''ldst3_address''; the old cache line contents on ''ldst3_cache_line'' and ''ldst3_evict'' is asserted. | |

| − | + | Note that, there is a second read port for the data cache that is used by the cache for snoop requests (that is, the read requests that are used by the controller cache to read the data, is a sort of fast lane). This port is ''WRITE FIRST'' so the cache controller receives the latest version of the data even when we are doing a store operation on that cache line. | |

| − | |||

| − | + | In case of address in the memory space for IO, normal load and store flows are disabled: | |

| − | |||

| − | + | assign is_store = ~ldst2_io_memspace & is_instruction && !ldst2_instruction.is_load; | |

| + | assign is_load = ~ldst2_io_memspace & is_instruction && ldst2_instruction.is_load; | ||

| − | + | While the IO request is forwarded to the CC through a special purpose interface: | |

| − | + | always_ff @ ( posedge clk, posedge reset ) begin : IO_MAP_CONTROL_OUT | |

| − | + | if ( reset ) | |

| − | + | ldst3_io_valid <= 1'b0; | |

| − | + | else | |

| − | + | ldst3_io_valid <= ldst2_valid & ldst2_io_memspace & ~ldst2_io_memspace_has_data; | |

| − | + | end | |

| − | + | ||

| + | always_ff @ ( posedge clk ) begin : IO_MAP_OUTPUT | ||

| + | if ( ldst2_io_memspace ) begin | ||

| + | ldst3_io_thread <= ldst2_instruction.thread_id; | ||

| + | ldst3_io_operation <= ldst2_instruction.op_code.mem_opcode == STORE_32 ? IO_WRITE : IO_READ; | ||

| + | ldst3_io_address <= ldst2_address; | ||

| + | ldst3_io_data <= ldst2_store_value[$bits(register_t)-1 : 0]; | ||

| + | end | ||

| + | end | ||

Latest revision as of 13:30, 3 May 2019

Load/Store unit provides access to the L1 N-way set-associative cache and manages load and store operations coming from the core; it is structured according to a Pipeline architecture divided into three stages (that will be explained in detail below). It interfaces the Operand fetch stage and Writeback stage on the core side, dually on the bus side it communicates with the cache controller which updates information (namely tags and privileges) and data. Moreover, such a module sends a signal to the instruction buffer unit which has the purpose of stopping a thread in case of miss. Load/Store unit does not store any information about the coherency protocol used, although it keeps track of information regarding privileges on all cache addresses. Each cache line stored, in fact, has two privileges: can read and can write that are used to determine cache miss/hit and are updated by the Cache Controller. Finally, it should be noted that this unit does not manage addresses that refer to the IO address space: whenever an issued request belongs to the non-coherent memory space, this is directly forwarded to the Cache Controller which will handle bypassing the coherency protocol, sending the request to the memory controller and report the data back to the third stage.

Stage 1

This stage contains a queue for each thread, each of them enqueues in such queues load/store instructions from the Operand Fetch waiting to be scheduled. Before the requests are queued, a phase of decoding and verification of the type of operation is performed (to understand if it is a word, half word or byte), afterwards the control logic checks the alignment of the incoming request. Alignment is done based on operations (both scalar and vector) on byte, half-word and word. In case of vector alignment, the data is compressed so that it can be written consecutively. For example, a vec16i8 - that has 1 significant byte each 4 bytes - is compressed to have 16 consecutive bytes. The following lines check all possible alignments:

assign is_1_byte_aligned = 1'b1; assign is_2_byte_aligned = ~( effective_address[0] ); assign is_4_byte_aligned = ~( |effective_address[1 : 0] ); assign is_16_byte_aligned = ~( |effective_address[3 : 0] ); assign is_32_byte_aligned = ~( |effective_address[4 : 0] ); assign is_64_byte_aligned = ~( |effective_address[5 : 0] );

Then, the following code determines if the access is misaligned based on the current operation and the required alignment for the memory access:

assign is_misaligned =

( is_vectorial_op &&

( ( is_byte_op && !is_16_byte_aligned ) ||

( is_halfword_op && !is_32_byte_aligned ) ||

( is_word_op && !is_64_byte_aligned ) ) ) ||

( !is_vectorial_op &&

( ( is_byte_op && !is_1_byte_aligned ) ||

( is_halfword_op && !is_2_byte_aligned ) ||

( is_word_op && !is_4_byte_aligned ) ) );

In case of misaligned access, the is_misaligned bit is asserted and raises a rolloback request to the Rollback handler. The Rollback handler issue the trap for the current thread, which jumps to `LDST_ADDR_MISALIGN_ISR, location of the corresponding trap:

always_ff @ ( posedge clk, posedge reset )

if ( reset )

ldst1_rollback_en <= 1'b0;

else

ldst1_rollback_en <= ( is_misaligned | is_out_of_memory ) & instruction_valid;

always_ff @ ( posedge clk ) begin

ldst1_rollback_pc <= `LDST_ADDR_MISALIGN_ISR;

ldst1_rollback_thread_id <= opf_inst_scheduled.thread_id;

end

On the other hand, when an instruction is aligned the instruction in the FIFO related to that thread is enqueued into its corresponding queue, but only if the instruction is valid or a flush request, and if there is no rollback occurring.

assign fifo_enqueue_en = ( instruction_valid | is_flush ) && opf_inst_scheduled.thread_id == thread_id_t'( thread_idx ) && !ldst1_rollback_en;

The instruction is then loaded (scalar or vector) appropriately aligned in the FIFO.

if (is_halfword_op ) begin fifo_input.store_value = halfword_aligned_scalar_data; fifo_input.store_mask = halfword_aligned_scalar_mask; ... end

Finally, a pending instruction for each thread is forwarded in parallel to the next stage. Whenever the next stage can execute an instruction provided by the i-th thread, it asserts the ldst2_dequeue_instruction signal that notifies the stage 1 whether the instruction has been consumed or not. In this way, it is possible to stall the instruction at the head of each FIFO if the next stage is busy.

Moreover, stage 1 is equipped with a recycle buffer: if a cache miss occurs in the Stage 3, the instruction and its operands are saved in this buffer. The output of this buffer is in competition with the normal load/store instructions, but the recycled instructions have a higher priority than the other operations.

Stage 2

In this stage information are managed (tags and privileges), while data are handled by the next stage. Those informations are updated by the cache controller, based on the implemented coherency protocol (MSI by default).

This stage receives as many parallel requests from the core as the number of threads. A pending request is selected from the set of pending requests from the previous stage. A thread is chosen by a Round Robin arbiter; first the selected thread index is obtained, if it has both a valid recycled and FIFO requests, then the former will be served first because it has higher priority.

always_comb begin

if ( ldst1_recycle_valid[ldst1_fifo_winner_id] ) begin

...

end else begin

... (normal request)

end

Note that, if the cache controller sends a request (asserting the cc_update_ldst_valid signal) then the arbiter gives maximum priority to it and takes no instructions from the FIFO. Priority is managed by these signals:

ldst1_fifo_requestor = ( ldst1_valid | ldst1_recycle_valid ) & {`THREAD_NUMB{~cc_update_ldst_valid}} & ~sleeping_thread_mask_next;

//if there is a valid request

ldst1_request_valid = |ldst1_fifo_requestor;

tag_sram_read1_address = ( cc_update_ldst_valid ) ? cc_update_ldst_address.index : ldst1_fifo_request.address.index;

next_request = ( cc_update_ldst_valid ) ? cc_update_request : ldst1_fifo_request;

ldst2_valid = ldst1_request_valid;

As stated above, this stage manages tag and privileges. The tag caches are accessed in parallel, for such a reason they are equipped with 2 read and one write ports; the second reading port is exclusively used by the Cache Controller for managing the coherency protocol (through its privileged bus called cc_snoop_request). Writes are performed by the Core or Cache Controller. Requests from the Core (load or store) at this stage are always performed in reading tags and privileges; in case of store hit writes requests from the Core are finalized in the next stage.

The cache controller can send different commands to the Load/Store unit. In case of CC_INSTRUCTION, the stage 2 is receiving an instruction that could not be served previously. The cache controller, in this case, provides the new privileges, tags and data. So, this stage bypasses the new data (privileges, tags and data) to the next one in order to "complete" the operation (whether it is a load or a store). In detail, when ldst2_valid signal is asserted, it propagates the ldst2_instruction, the new address is passed on ldst2_address, the data is passed over ldst2_store_value signal, with related masks, tags and privileges.

In case of CC_UPDATE_INFO from the cache controller, stage 2 receives a command in order to update the information (tags and privileges) of a given set. In this case nothing is propagated and no validation signal is asserted to the next stage, since it is not necessary to update the data cache. The privileges and the cache tag are updated with the values provided by the cache controller. It also indicates which way must be updated being responsible for the PseudoLRU.

In case of CC_UPDATE_INFO_DATA from the cache controller, stage 2 receives an update command, but in this case must also be updated data cache. Stage 2 propagates the address information (which are used to identify the index relative to the set), way and value provided by the cache controller. As in the previous case, the privileges and the cache tag are updated with the values provided by the cache controller. In this case the notification is made by asserting ldst2_update_valid and stage 3 should not propagate anything to the Writeback stage. In detail, only ldst2_update_valid is asserted, the way is ldst2_update_way, the new address is passed using ldst2_address, the data passed using ldst2_store_value.

In case of CC_EVICT from the CC, stage 2 must notify next one that the data must be replaced. In this case the notification is made by asserting ldst2_evict_valid and providing the complete address of the data to be replaced (which will have the same index as the new one), the way to use and the data. In detail, ldst2_evict_valid and ldst2_update_valid are asserted, the data is passed on ldst2_store_value, the old address is composed of stage 3 using the past tag and the index of the new one, the way is ldst2_update_way.

The last responsibility of this stage is to wake a thread up when a cache miss occurs, thread that caused a cache miss is asleep (via the ldst3_thread_sleep signal);

assign sleeping_thread_mask_next = ( sleeping_thread_mask | ldst3_thread_sleep ) & ( ~thread_wakeup_mask );

assign thread_wakeup_mask = thread_wakeup_oh & {`THREAD_NUMB{cc_wakeup}};

When a memory transaction falls into the memory space for IO communication, the L1 is bypassed. The following code checks which pending instruction falls in the memory space for IO:

localparam IOM_BASE_ADDR = `IO_MAP_BASE_ADDR; localparam IOM_SIZE = `IO_MAP_SIZE;

always_comb begin

for ( integer i = 0; i < $bits(thread_mask_t); i++) begin

if (ldst1_recycle_valid[i]) begin

ldst1_fifo_is_io_memspace[i] = ldst1_recycle_address[i] >= IOM_BASE_ADDR & ldst1_recycle_address[i] <= ( IOM_BASE_ADDR + IOM_SIZE );

ldst1_fifo_is_io_memspace_dequeue_condition[i] = ( ldst1_fifo_is_io_memspace[i] ) ? ( io_intf_resp_valid & io_intf_wakeup_thread == thread_id_t'(i) ) : 1'b1;

end else begin

// controlla su ldst1_address

ldst1_fifo_is_io_memspace[i] = ldst1_valid[i] & ( ldst1_address[i] >= IOM_BASE_ADDR & ldst1_address[i] <= ( IOM_BASE_ADDR + IOM_SIZE ) );

ldst1_fifo_is_io_memspace_dequeue_condition[i] = ( ldst1_fifo_is_io_memspace[i] ) ? io_intf_available : 1'b1;

end

end

end

Then, the ldst1_fifo_is_io_memspace bit for the scheduled thread is forwarded to the next stage:

always_ff @ ( posedge clk, posedge reset )

if ( reset ) begin

ldst2_valid <= 1'b0;

...

ldst2_io_memspace <= 1'b0;

ldst2_io_memspace_has_data <= 1'b0;

end else begin

ldst2_io_memspace <= ldst1_fifo_is_io_memspace[ldst1_fifo_winner_id];

ldst2_io_memspace_has_data <= ldst1_recycle_valid[ldst1_fifo_winner_id] & ldst1_fifo_is_io_memspace[ldst1_fifo_winner_id];

ldst2_valid <= ldst1_request_valid;

...

end

Stage 3

This stage receives input instructions, data update requests and replacement requests. Before managing logic to generate the output it is necessary to understand the type of request received (instruction, data update, replacement). Moreover, in case of instruction the control logic have to check if there is a hit or a miss and if there is a load_miss or store_miss (that occur if we do not have the necessary privileges).

assign is_instruction = ldst2_valid & ~ldst2_is_flush; assign is_replacement = ldst2_update_valid && ldst2_evict_valid; assign is_update = ldst2_update_valid && !ldst2_evict_valid; assign is_flush = ldst2_valid & ldst2_is_flush & is_hit & ~cr_ctrl_cache_wt; assign is_store = is_instruction && !ldst2_instruction.is_load; assign is_load = is_instruction && ldst2_instruction.is_load;

A cache hit is asserted if we have read or write privileges on such address, and if the tag of the requested address is equal to an element present in the tag array (dcache_way) read from the tag cache using the address set (passed from the Stage 2).

for ( dcache_way = 0; dcache_way < `DCACHE_WAY; dcache_way++ ) begin : HIT_MISS_CHECK

assign way_matched_oh[dcache_way] = ( ( ldst2_tag_read[dcache_way] == ldst2_address.tag &&

(ldst2_privileges_read[dcache_way].can_write || ldst2_privileges_read[dcache_way].can_read ) ) && ldst2_valid );

end

assign is_hit = |way_matched_oh;

Then it must be verified if we have permissions to perform operations on the block for which there was a hit. E.g., if there is a hit for that address and the request is a store, we must have write permissions for that block otherwise there will be a store miss.

assign is_store_hit = is_store && ldst2_privileges_read[way_matched_idx].can_write && is_hit; assign is_store_miss = is_store && ~is_store_hit; assign is_load_hit = is_load && ldst2_privileges_read[way_matched_idx].can_read && is_hit; assign is_load_miss = is_load && ~is_load_hit;

Therefore, in case of store hit it saves data in the data cache (and does not send anything outwards), in case of load hit the ldst3_valid signal is asserted, and data will be sent to the WriteBack module. In case of store/load miss the ldst3_miss is asserted towards the cache controller. Furthermore, in the case of miss the thread must be put to sleep.

ldst3_thread_sleep[thread_idx] = ( is_load_miss || is_store_miss ) && ldst2_instruction.thread_id == thread_id_t'( thread_idx );

In case of update operations, the data cache line identified by the signals pair {ldst2_address.index, ldst2_update_way} is updated with the new data ldst2_store_value coming from previous stage.

Finally, for replacement operations, similarly to the update case, the cache line addressed by {ldst2_address.index, ldst2_update_way} is updated with the new value ldst2_store_value, and its old content sent to the cache controller through the output ldst3_cache_line asserting the ldst3_evict signal. In detail, are sent to the controller cache: the old address on ldst3_address; the old cache line contents on ldst3_cache_line and ldst3_evict is asserted.

Note that, there is a second read port for the data cache that is used by the cache for snoop requests (that is, the read requests that are used by the controller cache to read the data, is a sort of fast lane). This port is WRITE FIRST so the cache controller receives the latest version of the data even when we are doing a store operation on that cache line.

In case of address in the memory space for IO, normal load and store flows are disabled:

assign is_store = ~ldst2_io_memspace & is_instruction && !ldst2_instruction.is_load; assign is_load = ~ldst2_io_memspace & is_instruction && ldst2_instruction.is_load;

While the IO request is forwarded to the CC through a special purpose interface:

always_ff @ ( posedge clk, posedge reset ) begin : IO_MAP_CONTROL_OUT

if ( reset )

ldst3_io_valid <= 1'b0;

else

ldst3_io_valid <= ldst2_valid & ldst2_io_memspace & ~ldst2_io_memspace_has_data;

end

always_ff @ ( posedge clk ) begin : IO_MAP_OUTPUT

if ( ldst2_io_memspace ) begin

ldst3_io_thread <= ldst2_instruction.thread_id;

ldst3_io_operation <= ldst2_instruction.op_code.mem_opcode == STORE_32 ? IO_WRITE : IO_READ;

ldst3_io_address <= ldst2_address;

ldst3_io_data <= ldst2_store_value[$bits(register_t)-1 : 0];

end

end