Difference between revisions of "Programming Model"

(→OpenCL Example) |

(→NaplesPU Vector intrinsics) |

||

| (61 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | == OpenCL support for | + | = NaplesPU Programming Model = |

| + | |||

| + | ==SIMD Support== | ||

| + | Arithmetic operators (+, -, *, /, %), relational operators (==, !=, <, <=, >, >=), bitwise operators (&, |, ^, ~, <<, >>), logical operators (&&, ||, !) and assignment operators (=, +=, -=, *=, /=, %=, <<=, >>=, &=, ^=, |=) can be used with scalar and vector types and produce a scalar or vector signed integer result respectively. In some cases, mixed scalar/vector operations are possible. In such a cases, the scalar is considered as a vector with all elements equal to the scalar value. | ||

| + | |||

| + | For instance, to add two vectors: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | vec16i32 c = a+b; | ||

| + | } | ||

| + | |||

| + | or a vector with a scalar: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | int b; | ||

| + | … | ||

| + | vec16i32 c = a+b; | ||

| + | } | ||

| + | |||

| + | In order to access vector elements, it is possible to use the operator []. For instance | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | // assign some values: | ||

| + | for (int i=0; i<16; i++) a[i]=i; | ||

| + | int sum = 0; | ||

| + | // calculate sum | ||

| + | for (int i=0; i<16; i++) sum += a[i]; | ||

| + | } | ||

| + | |||

| + | Vectors can be initialized using curly bracket syntax. For instance, a constant vector: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | const vec16i32 a = { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15 }; | ||

| + | } | ||

| + | |||

| + | or a non-constant vector | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | int x, y, z; | ||

| + | ... | ||

| + | vec16i32 a = { x, y, z, x, y, z, x, y, z, x, y, z, x, y, z, x}; | ||

| + | } | ||

| + | |||

| + | Conversions between vectors with the same number of elements can be performed using the LLVM intrisic __builtin_convertvector. Vector types v16i32, v16u32, v16f32 can be converted between each other. Similarly, vector types v8i64, v8u64, v8f64can be converted between each other. For instance: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16f32 a; | ||

| + | ... | ||

| + | vec16i32 d = __builtin_convertvector(a,vec16i32); | ||

| + | } | ||

| + | |||

| + | It is also possible to convert floating point vectors with a different number of elements. In such a case, it is required to use the two NPU intrinsics __builtin_npu_v8f64tov16f32 or __builtin_npu_v16f32tov8f64. The first one converts 8 double precision FP elements into 8 single precision FP elements that are placed in the first 8 elements of a v16f32 vector. The second one converts the first 8 single precision FP elements of a v16f32 vector into 8 double precision FP elements. For instance: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16f32 a; | ||

| + | ... | ||

| + | vec8f64 b = __builtin_npu_v16f32tov8f64(a); | ||

| + | } | ||

| + | |||

| + | Vector comparisons can be done in two different ways. It is possible to use the traditional relational operators that result in another vector type of the same size of the two vectors. For instance: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | vec16i32 c = a < b; | ||

| + | } | ||

| + | |||

| + | After executing the above code, the vector c elements will be equal to 0xFFFFFFFF or 0x00000000 according to the result of the comparison. | ||

| + | In addition, we also provide vector comparison intrinsics, as follow: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | int c = __builtin_npu_mask_cmpi32_slt (a, b) | ||

| + | } | ||

| + | |||

| + | After executing the above code, the integer c will contain a bitmap that can be directly used to write the mask register if required. Using vector comparison intrinsics is the natural way of performing comparisons in NaplesPU. | ||

| + | |||

| + | Note that, in NaplesPU, all instructions are masked and at the beginning, all lanes are enabled. If you want to handle SIMD control flow, you need to explicitly take care of masking operations so that they are only applied to some elements. For instance, take a look at the above code: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | int c = __builtin_npu_mask_cmpi32_slt (a, b) //generate mask for a<b | ||

| + | int rm_old = __builtin_npu_read_mask_reg(); //save mask register | ||

| + | __builtin_npu_write_mask_reg(c); //write mask register for a<b | ||

| + | do_something(); | ||

| + | c = c^-1; //generate mask for a greater equal b | ||

| + | __builtin_npu_write_mask_reg(c); //write mask register for a greater equal b | ||

| + | do_somethingelse(); | ||

| + | __builtin_npu_write_mask_reg(rm_old); //restore the old mask | ||

| + | } | ||

| + | |||

| + | == Thread Parallelism == | ||

| + | In a NPU core each thread runs the same code provided by the user, through builtins developers can parallelize it or differentiate flows. Each thread has a private stack, while the memory system is distributed and all threads have the same view of the main memory. User can differentiate threads flow based on the running thread ID, as described in [[Core#Control_Register|control register]] each thread can read its ID from the control register through the <code>__builtin_npu_read_control_reg</code> accessing to the needed control register (for fetching thread ID use 2, for core ID use 0 or 1): | ||

| + | #define CORE_ID __builtin_npu_read_control_reg(0) | ||

| + | #define THREAD_ID __builtin_npu_read_control_reg(2) | ||

| + | |||

| + | In the current release, each tile is equipped with 1 NPU core, hence each core ID overlaps with its tile ID. | ||

| + | |||

| + | ===Thread Synchronization=== | ||

| + | NaplesPU supports barrier synchronization among threads. The programmer needs to know the number of threads that require to synchronize, i.e. NumberOfThreads. For each synchronization, there is a unique barrier ID. The NaplesPU intrinsic <code>__builtin_npu_barrier(BarrierID, NumberOfThreads-1)</code> takes care of the synchronization. It is possible to exploit a maximum number of barriers equal to Bmax, i.e. 4 x number of threads. Barrier IDs range from 0 to Bmax-1. Note that, same barrier ID cannot be used in different kernels and can be used multiple times inside a kernel only by the same threads or by a subset of them. | ||

| + | |||

| + | In the following example, we have four threads and two barriers. After performing some operations, all threads synchronize on barrier 1. Then, only threads 0 and 1 synchronize on barrier 2. Remember that, in the main code, the user has to provide the total number of synchronizing threads: | ||

| + | #include <stdint.h> | ||

| + | static vec16i32 C[4]; | ||

| + | static vec16i32 D[2]; | ||

| + | const vec16i32 A[4]={{...} ,{...},{...}, {...}}; | ||

| + | const vec16i32 B[4]={{...},{...},{...}, {...}}; | ||

| + | int main(){ | ||

| + | //execution thread 0,1,2,3 | ||

| + | int threadId = __builtin_npu_read_control_reg(2); | ||

| + | C[threadId] = A[threadId] + B[threadId]; | ||

| + | __builtin_npu_barrier(1,3);//Synchronization Threads:0,1,2,3. | ||

| + | |||

| + | if(threadId<2){ | ||

| + | //execution thread 0,1 | ||

| + | D[threadId]=C[threadId*2]+C[(threadId*2)+1]; | ||

| + | __builtin_npu_barrier(2,1);//Synchronization Threads:0,1. | ||

| + | } | ||

| + | if(threadId==0){ | ||

| + | D[threadId]=D[threadId]+D[threadId+1]; | ||

| + | __builtin_npu_flush((int)(&D[threadId])); | ||

| + | } | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | == NaplesPU Other Aspects == | ||

| + | ===Flush instruction=== | ||

| + | The NaplesPU ISA has a flush instruction that is required to avoid data to keep stuck in the cache. It is mandatory to use this instruction in case of output data that is required from the host. Otherwise, the host will read data from the main memory that is not coherent with the cache. The flush instruction takes in input the address of the involved variable and flushes an entire 512-bit cache line. Please remember to cast to integer the address, otherwise you will see the following error: “cannot initialize a parameter of type 'int' with an rvalue of type (YOUR VARIABLE TYPE)*'”. For instance, take a look at the above code: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | vec16i32 c = a + b; | ||

| + | __builtin_npu_flush((int)&c); | ||

| + | } | ||

| + | |||

| + | Please, remember that the flush instruction works for a single 512-bit cache line. As a consequence, multiple flush instructions are required in case of variable types bigger than 512 bits. | ||

| + | |||

| + | ===Scratchpad memory=== | ||

| + | In addition to traditional main memory, a NPU core supports a scratchpad memory with a different address space. In order to use the scratchpad memory, the <code>__scratchpad</code> keyword should be used when declaring a variable. Note that just a global variable can be placed in the scratchpad memory. For instance, take a look at the above code: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | __scratchpad int a; | ||

| + | int main (){ | ||

| + | ... | ||

| + | } | ||

| + | |||

| + | The compiler will recognize the keyword and the integer <code>a</code> variable will be placed in the scratchpad using appropriate load/store instructions. | ||

| + | |||

| + | ===Barrier Instruction=== | ||

| + | NPU supports barrier synchronization between threads within the same core or among different tiles. The programmer needs to know the number of threads that require to synchronize, i.e. <code>NumberOfThreads</code>. For each synchronization there is an unique barrier ID. The intrinsic __builtin_npu_barrier(BarrierID, NumberOfThreads-1) takes care of the synchronization. It is possible to exploit a maximum number of barriers equal to Bmax, i.e. 4 x number of threads. Barrier IDs range from 0 to Bmax-1. Note that the same barrier ID cannot be used in different kernels and can be used multiple times inside a kernel only by the same threads or by a subset of them. | ||

| + | |||

| + | In the following example, we have four threads and two barriers. After performing some execution, all threads synchronize on barrier 1. Then, only threads 0 and 1 synchronize on barrier 2. Remember that, on the host side, it is required to initialize barrier 0 with Count=4 and barrier 1 with Count=2. | ||

| + | |||

| + | #include <stdint.h> | ||

| + | static vec16i32 C[4]; | ||

| + | static vec16i32 D[2]; | ||

| + | const vec16i32 A[4]={{...} ,{...},{...}, {...}}; | ||

| + | const vec16i32 B[4]={{...},{...},{...}, {...}}; | ||

| + | |||

| + | int main(){ | ||

| + | //execution thread 0,1,2,3 | ||

| + | int threadId = __builtin_npu_read_control_reg(2); | ||

| + | C[threadId] = A[threadId] + B[threadId]; | ||

| + | __builtin_npu_barrier(1,3); //Synchronization Threads:0,1,2,3. | ||

| + | |||

| + | if(threadId<2){ | ||

| + | //execution thread 0,1 | ||

| + | D[threadId]=C[threadId*2]+C[(threadId*2)+1]; | ||

| + | __builtin_npu_barrier(2,1);//Synchronization Threads:0,1. | ||

| + | } | ||

| + | if(threadId==0){ | ||

| + | D[threadId]=D[threadId]+D[threadId+1]; | ||

| + | __builtin_npu_flush((int)(&D[threadId])); | ||

| + | } | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | == NaplesPU Intrinsics == | ||

| + | |||

| + | ===NaplesPU Misc Intrinsics=== | ||

| + | Main NaplesPU builtins are summarized in the following table: | ||

| + | |||

| + | {| class="wikitable" | ||

| + | ! style="font-weight: bold;" | Intrinsic name (when using these intrinsics you should add “__builtin_npu_” as prefix ) | ||

| + | ! style="font-weight: bold;" | Operation | ||

| + | ! style="font-weight: bold;" | Corresponding Instruction | ||

| + | |- | ||

| + | | void barrier (int a, int b) | ||

| + | | Refer to the description of the barrier instruction. a contains the barrier ID, while b contains the number of threads that should synchronize-1 | ||

| + | | barrier | ||

| + | |- | ||

| + | | void flush (int a) | ||

| + | | Flush a cache line to the main memory. a contains the memory address of the cache line. Explicit integer conversion is required. | ||

| + | | flush | ||

| + | |- | ||

| + | | int createmaskv16i32 (v16i32 a) | ||

| + | | Convert the vector a that is made of all elements equal to 0 or -1 into a 32-bit mask value that can be written in the mask register. It can be used to compute the bitmask when a vector comparison operation is performed using the common C/C++ relational operators. | ||

| + | | crt_maskv16 | ||

| + | |- | ||

| + | | void write_mask_reg(int a) | ||

| + | | Write a 32-bit bitmask inside the mask register | ||

| + | | move | ||

| + | |- | ||

| + | | void write_mask_regv16i32 (v16i32 a) | ||

| + | | Write a 512-bit vector mask inside the mask register. | ||

| + | | crt_maskv16 + move | ||

| + | |- | ||

| + | | int read_mask_reg () | ||

| + | | Read a 32-bit bitmask from the mask register. | ||

| + | | move | ||

| + | |- | ||

| + | | void write_control_reg (int a, int b) | ||

| + | | Write values to the mask register. The integer a contains the ID of the sub-register to access, while the integer b contains the data. | ||

| + | | write_cr | ||

| + | |- | ||

| + | | int read_control_reg (int a) | ||

| + | | Read values from the mask register. The integer a contains the ID of the sub-register to access. | ||

| + | | read_cr | ||

| + | |} | ||

| + | |||

| + | |||

| + | ===NaplesPU Vector intrinsics=== | ||

| + | {| class="wikitable" | ||

| + | ! style="font-weight: bold;" | Intrinsic name (when using these intrinsics you should add “__builtin_npu_” as prefix ) | ||

| + | ! style="font-weight: bold;" | Operation | ||

| + | ! style="font-weight: bold;" | Corresponding Instruction | ||

| + | |- | ||

| + | | vec16i32 makevectori32 (int a) | ||

| + | | Create a vector of 16 elements whose elements are all equal to a | ||

| + | | move_i32 | ||

| + | |- | ||

| + | | vec16f32 makevectorf32 (float a) | ||

| + | | Create a vector of 16 elements whose elements are all equal to a | ||

| + | | move_i32 | ||

| + | |- | ||

| + | | vec16i32 shufflei32 (vec16i32 a, vec16i32 b) or vec16i32 shufflef32 (vec16f32 a, vec16i32 b) | ||

| + | | Vector Shuffle - it allows elements of vector a to be copied to the output vector in different positions. The elements in vector b specify, for each corresponding position in the destination register, the indexes of the elements in the source vector register. (see the description of the shuffle instruction) | ||

| + | | shuffle_i32 or shuffle_f32 | ||

| + | |- | ||

| + | | int mask_cmp'w'32_'xyz' (vec16'w'32 a, vec16'w'32 b) | ||

| + | | Return an integer bitmask where the i-th bit is equal to one if | ||

| + | (a[i] comp b[i]) is true. Otherwise, the i-th bit is equal to zero. | ||

| + | w = type of compare: i for interger and f for float. | ||

| + | x = s if signed or u otherwise. | ||

| + | yz = traditional compare conditions, namely: gt, ge, lt, le | ||

| + | | cmp'xyz'_'w'32 | ||

| + | |- | ||

| + | | int mask_cmp'w'32_eq (vec16'w'32 a, vec16'w'32 b) | ||

| + | | Return an integer bitmask where the i-th bit is equal to one if | ||

| + | (a[i] comp b[i]) is true. Otherwise, the i-th bit is equal to zero. | ||

| + | w = type of compare: i for interger and f for float. | ||

| + | | cmpeq_'w'32 | ||

| + | |- | ||

| + | | int mask_cmp'w'32_ne (vec16'w'32 a, vec16'w'32 b) | ||

| + | | Return an integer bitmask where the i-th bit is equal to one if | ||

| + | (a[i] comp b[i]) is true. Otherwise, the i-th bit is equal to zero. | ||

| + | w = type of compare: i for interger and f for float. | ||

| + | | cmpne_'w'32 | ||

| + | |} | ||

| + | |||

| + | Vector comparisons can be done in two different ways. It is possible to use the traditional relational operators that result in another vector type of the same size of the two vectors. For instance: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | vec16i32 c = a < b; | ||

| + | } | ||

| + | |||

| + | After executing the above code, the vector c elements will be equal to 0xFFFFFFFF or 0x00000000 according to the result of the comparison. | ||

| + | In addition, we also provide vector comparison intrinsics, as follow: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16i32 a; | ||

| + | vec16i32 b; | ||

| + | … | ||

| + | int c = __builtin_npu_mask_cmpi32_slt (a, b) | ||

| + | } | ||

| + | |||

| + | After executing the above code, the integer c will contain a bitmap that can be directly used to write the mask register if required. Using vector comparison intrinsics is the natural way of performing comparisons in NPU. | ||

| + | |||

| + | Note that, in NPU, all vector operations are masked and after the boot all hardware lanes are enabled. If you want to handle SIMD control flow, you need to explicitly take care of masking operations so that they are only applied to some elements. For instance, take a look to the above code: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | volatile vec16i32 a; | ||

| + | volatile vec16i32 b; | ||

| + | … | ||

| + | int c = __builtin_npu_mask_cmpi32_slt (a, b) //generate mask for a lt b | ||

| + | int rm_old = __builtin_npu_read_mask_reg(); //save mask register | ||

| + | __builtin_npu_write_mask_reg(c); //write mask register for a lt b | ||

| + | do_something(); | ||

| + | c = c^-1; //generate mask for a ge b | ||

| + | __builtin_npu_write_mask_reg(c); //write mask register for a ge b | ||

| + | do_somethingelse(); | ||

| + | __builtin_npu_write_mask_reg(rm_old); //restore the old mask | ||

| + | } | ||

| + | |||

| + | In those cases, it is important to prevent compiler optimizations of the involved vectors and variable marking them as volatile. The compiler tends to rearrange operation, and it is good practice to double-check the compiler objdumb in order to check the order of the operation. If volatile does not prevent reordering, you might embed the code in a function or use -O0 as optimization flag. | ||

| + | |||

| + | ===Standard LLVM intrinsics=== | ||

| + | {| class="wikitable" | ||

| + | ! style="font-weight: bold;" | Intrinsic name | ||

| + | ! style="font-weight: bold;" | Operation | ||

| + | ! style="font-weight: bold;" | Corresponding Instruction | ||

| + | |- | ||

| + | | vec16X __builtin_convertvector(vec16Y a, vec16X) | ||

| + | | It is is used to express generic vector type-conversion operations. The input vector and the output vector type must have the same number of elements. X can be equal to i32/f32, while Y can be equal to i32/f32 | ||

| + | | sext or | ||

| + | itof or | ||

| + | ftoi | ||

| + | |} | ||

| + | |||

| + | Conversions between vectors with the same number of elements can be performed using the LLVM intrisic __builtin_convertvector. Vector types v16i32, v16u32, v16f32 can be converted between each other. For instance: | ||

| + | |||

| + | #include <stdint.h> | ||

| + | int main (){ | ||

| + | vec16f32 a; | ||

| + | ... | ||

| + | vec16i32 d = __builtin_convertvector(a,vec16i32); | ||

| + | } | ||

| + | |||

| + | == Learning by Example == | ||

| + | ===Matrix Multiplication Multithreaded Example=== | ||

| + | |||

| + | The following code shows a multithread version of the matrix multiplication kernel running on a NPU core. The code spreads the output computation among all available threads. Since each output element computation is independent of others, the thread parallelism is achieved dividing the outer cycle of the function. Output matrix row calculations are equally spanned across all cores and further spanned across threads. For each thread, the function first calculates the portion of the output matrix to compute at the core level: <code>N / CORE_NUMB</code>, with <code>N</code> the dimension of the matrix and <code>CORE_NUMB</code> the number of NPU core in the system. | ||

| + | Then, each thread starts computing the outer loop with <code>start_loop = (core_id * N / CORE_NUMB) + thread_id</code> the portion of output matrix to compute is multiplied to the running core ID (<code>core_id</code>) and added to the running thread ID (<code>thread_id</code>), and each iteration increments by the number of threads in the core <code>THREAD_NUMB</code>. | ||

| + | |||

| + | void matrix_mult(const int a[N][N], const int b[N][N], int mult[N][N], int core_id, int thread_id) { | ||

| + | int start_loop = (core_id * N / CORE_NUMB) + thread_id; | ||

| + | int end_loop = N / CORE_NUMB * (core_id + 1); | ||

| + | |||

| + | for (int i = start_loop; i < end_loop; i += THREAD_NUMB){ | ||

| + | for (int j = 0; j < N; j++) | ||

| + | for (int k = 0; k < N; k++) | ||

| + | mult[i][j] += a[i][k] * b[k][j]; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | Parameters <code>core_id</code> and <code>thread_id</code> are passed by the main function, and fetched from NPU control registers through builtins: | ||

| + | |||

| + | #define CORE_ID __builtin_npu_read_control_reg(0) | ||

| + | #define THREAD_ID __builtin_npu_read_control_reg(2) | ||

| + | |||

| + | In this way, each thread in each core computes a portion of the output matrix concurrently to the others. | ||

| + | |||

| + | The final result is ready when all thread over the system ended the assigned task, a system synchronization is required and in the main function this is achieved using barrier builtin provided by the programming model: | ||

| + | __builtin_npu_barrier(42, CORE_NUMB * THREAD_NUMB - 1); | ||

| + | The builtin above synchronizes <code>CORE_NUMB * THREAD_NUMB</code> number of threads (total number of thread running in the system) on the ID 42. When all threads hit the barrier the output matrix is ready, although most of it could be in private L1 caches. The next step is to ''flush'' output lines into the main memory: | ||

| + | if (THREAD_ID == 0 && CORE_ID == 0) { | ||

| + | for (int i = 0; i < N*N; i += 64 / sizeof(int)) { | ||

| + | __builtin_npu_flush((int) &C[i / N][i % N]); | ||

| + | } | ||

| + | __builtin_npu_write_control_reg(N*N, 12); // For cosimulation purpose | ||

| + | } | ||

| + | Generally, flush operations are performed by a thread, in this case the first thread in the first core calls the flush builtin which sends output results still in L1 caches to the main memory. | ||

| + | |||

| + | The full code for the main function is attached below: | ||

| + | |||

| + | #define CORE_ID __builtin_npu_read_control_reg(0) | ||

| + | #define THREAD_ID __builtin_npu_read_control_reg(2) | ||

| + | |||

| + | int main(){ | ||

| + | init_matrix(A); | ||

| + | init_matrix(B); | ||

| + | |||

| + | matrix_mult(A, B, C, CORE_ID, THREAD_ID); | ||

| + | __builtin_npu_barrier(CORE_ID + 1, THREAD_NUMB - 1); | ||

| + | |||

| + | if (THREAD_ID == 0 && CORE_ID == 0) { | ||

| + | for (int i = 0; i < N*N; i += 64 / sizeof(int)) { | ||

| + | __builtin_npu_flush((int) &C[i / N][i % N]); | ||

| + | } | ||

| + | __builtin_npu_write_control_reg(N*N, 12); // For cosimulation purpose | ||

| + | } | ||

| + | |||

| + | return (int)&C; | ||

| + | } | ||

| + | |||

| + | |||

| + | ===Matrix Multiplication Vectorial Example=== | ||

| + | |||

| + | On the other hand, the vectorial version of the matrix multiplication function computes the output matrix in a SIMD multithreading fashion. Both input and output matrices are organized in target specific vector types, becoming vectors of vectors. Such an organization results in a distribution of the N column partial results on the 16 hardware lanes; each thread calculates N partial results every cycle. The size of the matrix must be multiple of 16. | ||

| + | |||

| + | void kernel_function(vec16i32 *A, vec16i32 *B, vec16i32 *C, int N) { | ||

| + | uint32_t coreId = __builtin_npu_read_control_reg(0); | ||

| + | uint32_t threadId = __builtin_npu_read_control_reg(2); | ||

| + | uint32_t nT = 2; // number of threads | ||

| + | uint32_t nL = 16; // number of lanes | ||

| + | uint32_t nC = N/nL; | ||

| + | uint32_t ndivnT = N/nT; | ||

| + | uint32_t tIdndivnT = threadId*ndivnT; | ||

| + | uint32_t tIdndivnTnC = tIdndivnT*nC; | ||

| + | |||

| + | for (uint32_t i = coreId; i < ndivnT*nC; i+=CORE_NUMB){ | ||

| + | uint32_t col = (tIdndivnT+i)%nC; | ||

| + | C[tIdndivnTnC+i] = 0; | ||

| + | for (uint32_t j = 0; j < nC; j++){ | ||

| + | for (uint32_t k = 0; k < nL; k++){ | ||

| + | C[tIdndivnTnC+i] += A[tIdndivnTnC+i-col+j][k] * B[(nC*k)+(j*N)+col]; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | Please note, the <code> C[tIdndivnTnC+i] += A[tIdndivnTnC+i-col+j][k] * B[(nC*k)+(j*N)+col] </code> performs 16 operations on 16 different data at time. The organization of the code and the thread parallelization are equivalent to its scalar version. | ||

| + | |||

| + | === How to compile a kernel === | ||

| + | Currently, the NaplesPU toolchain is released with some example kernels located in the ''npu/software/kernels'' folder. We provide makefiles to compile these kernels for NaplesPU. In case you want to add a new kernel, it is suggested to copy a kernel folder and replace C/CPP files with your own source code. Then, remember to modify the makefile updating the SRCS variable with the current main C/CPP filenames. | ||

| + | |||

| + | = OpenCL support for NaplesPU = | ||

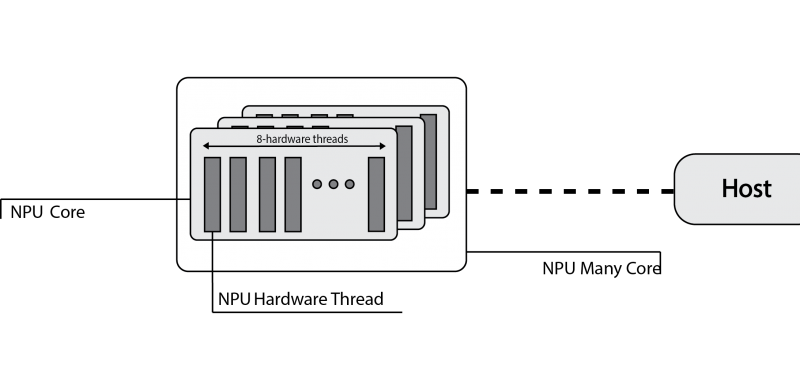

OpenCL defines a platform as a set of computing devices on which the host is connected to. Each device is further divided into several compute units (CUs), each of them defined as a collection of processing elements (PEs). | OpenCL defines a platform as a set of computing devices on which the host is connected to. Each device is further divided into several compute units (CUs), each of them defined as a collection of processing elements (PEs). | ||

Recall that the target platform is architecturally designed around a single core, structured in terms of a set of at most eight hardware threads. Each hardware threads competes with each other to have access to sixteen hardware lanes, to execute both scalar and vector operations on 32-bit wide integer or floating-point operands. | Recall that the target platform is architecturally designed around a single core, structured in terms of a set of at most eight hardware threads. Each hardware threads competes with each other to have access to sixteen hardware lanes, to execute both scalar and vector operations on 32-bit wide integer or floating-point operands. | ||

| − | [[File:Plat | + | [[File:Plat model_new.png|800px]] |

| − | The computing device abstraction is physically mapped on the | + | The computing device abstraction is physically mapped on the NaplesPU many-core architecture. The NaplesPU many-core can be configured in terms of the number of cores. Each NPU core maps on the OpenCL Compute Unit. Internally, the NPU core is composed of hardware threads, each of them represents the abstraction of the OpenCL processing element. |

| − | + | ==Execution Model Matching== | |

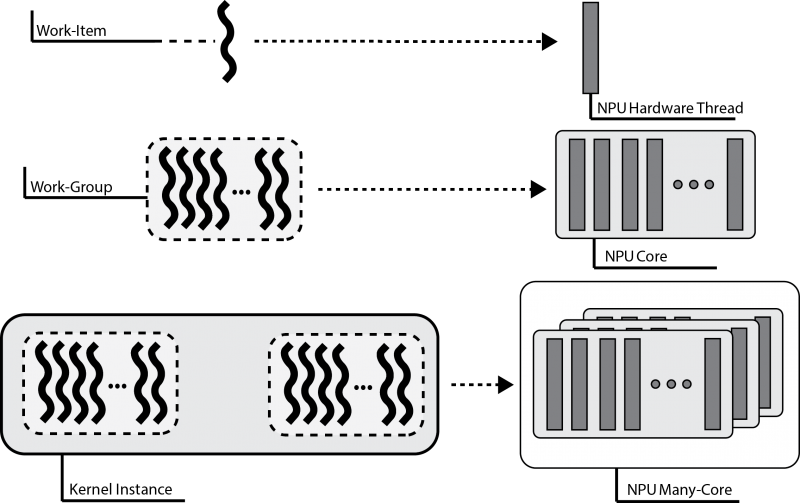

| − | From the execution model point of view, OpenCL relies on an N-dimensional index space, where each point represents a kernel instance execution. Since the physical kernel instance execution is done by the hardware threads, the OpenCL work-item is mapped onto | + | From the execution model point of view, OpenCL relies on an N-dimensional index space, where each point represents a kernel instance execution. Since the physical kernel instance execution is done by the hardware threads, the OpenCL work-item is mapped onto an NPU single hardware-thread. Consequently, a work-group is defined as a set of hardware threads, and all work-items in a work-group executes on a single computing unit. |

| − | [[File:Execution | + | [[File:Execution model_new.png|800px]] |

| − | + | ==Memory Model Matching== | |

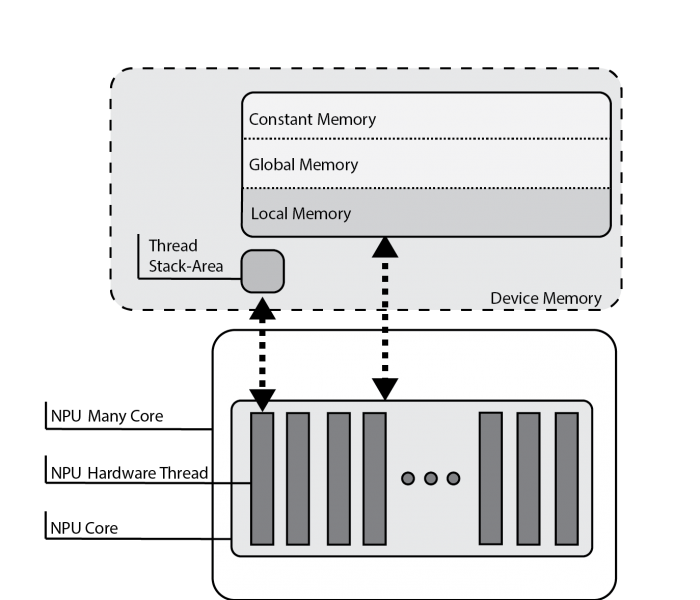

OpenCL partitions the memory in four different spaces: | OpenCL partitions the memory in four different spaces: | ||

| Line 23: | Line 458: | ||

The target-platform is provided of a DDR memory, that is the device memory in OpenCL nomenclature. Consequently, variables are physically mapped on this memory. The compiler itself, by looking at the address-space qualifier, verifies the OpenCL constraints are satisfied. | The target-platform is provided of a DDR memory, that is the device memory in OpenCL nomenclature. Consequently, variables are physically mapped on this memory. The compiler itself, by looking at the address-space qualifier, verifies the OpenCL constraints are satisfied. | ||

| − | Each | + | Each NPU core is also equipped with a [[Scratchpad unit|Scratchpad Memory]], an on-chip non-coherent memory portion exclusive to each core. This memory is compliant with the OpenCL local memory features |

| − | [[File:Memory | + | [[File:Memory mod_new.png|700px]] |

| − | Finally, each hardware thread within the | + | Finally, each hardware thread within the NPU core has a private stack. This memory section is private to each hardware thread, that is the OpenCL work-item, and cannot be addressed by others. As a result, each stack acts as the OpenCL private memory. |

| − | + | ==Programming Model Matching== | |

OpenCL supports two kinds of programming models, data- and task-parallel. A '''data-parallel''' model requires that each point of the OpenCL index space executes a kernel instance. Since each point represents a work-item and these are mapped on hardware threads, the data-parallel requirements are correctly satisfied. Please note that the implemented model is a relaxed version, without requiring a strictly one-to-one mapping on data. | OpenCL supports two kinds of programming models, data- and task-parallel. A '''data-parallel''' model requires that each point of the OpenCL index space executes a kernel instance. Since each point represents a work-item and these are mapped on hardware threads, the data-parallel requirements are correctly satisfied. Please note that the implemented model is a relaxed version, without requiring a strictly one-to-one mapping on data. | ||

| − | A '''task-parallel''' programming model requires kernel instances are independently executed in any point of the index space. In this case, each work-item is not constrained to execute the same kernel instance of others. The compiler frontend defines a set of builtins that may be used for this purpose. Moreover, each | + | A '''task-parallel''' programming model requires kernel instances are independently executed in any point of the index space. In this case, each work-item is not constrained to execute the same kernel instance of others. The compiler frontend defines a set of builtins that may be used for this purpose. Moreover, each NPU core is built of 16 hardware lanes, useful to realise a lock-step execution. Consequently, OpenCL support is realised to allow the usage of vector types. As a result, the vector execution is supported for the following data types: |

* charn, ucharn, are respectively mapped on vec16i8 and vec16u8, where n=16. Other values of n are not supported. | * charn, ucharn, are respectively mapped on vec16i8 and vec16u8, where n=16. Other values of n are not supported. | ||

| Line 52: | Line 487: | ||

== OpenCL Example == | == OpenCL Example == | ||

| + | |||

| + | The following code shows a vectorial matrix multiplication in OpenCL running on the NPU device. | ||

#include <opencl_stdlib.h> | #include <opencl_stdlib.h> | ||

#define WORK_DIM 4 | #define WORK_DIM 4 | ||

| − | + | ||

__kernel void kernel_function(__global int16 *A, __global int16 *B, __global int16 *C, int rows, int cols) | __kernel void kernel_function(__global int16 *A, __global int16 *B, __global int16 *C, int rows, int cols) | ||

{ | { | ||

| Line 61: | Line 498: | ||

uint32_t nT = WORK_DIM; // number of threads | uint32_t nT = WORK_DIM; // number of threads | ||

| − | uint32_t nL = 16; | + | uint32_t nL = 16; // number of lanes |

uint32_t N = rows; | uint32_t N = rows; | ||

Latest revision as of 17:23, 25 July 2019

Contents

NaplesPU Programming Model

SIMD Support

Arithmetic operators (+, -, *, /, %), relational operators (==, !=, <, <=, >, >=), bitwise operators (&, |, ^, ~, <<, >>), logical operators (&&, ||, !) and assignment operators (=, +=, -=, *=, /=, %=, <<=, >>=, &=, ^=, |=) can be used with scalar and vector types and produce a scalar or vector signed integer result respectively. In some cases, mixed scalar/vector operations are possible. In such a cases, the scalar is considered as a vector with all elements equal to the scalar value.

For instance, to add two vectors:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

vec16i32 c = a+b;

}

or a vector with a scalar:

#include <stdint.h>

int main (){

vec16i32 a;

int b;

…

vec16i32 c = a+b;

}

In order to access vector elements, it is possible to use the operator []. For instance

#include <stdint.h>

int main (){

vec16i32 a;

// assign some values:

for (int i=0; i<16; i++) a[i]=i;

int sum = 0;

// calculate sum

for (int i=0; i<16; i++) sum += a[i];

}

Vectors can be initialized using curly bracket syntax. For instance, a constant vector:

#include <stdint.h>

int main (){

const vec16i32 a = { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15 };

}

or a non-constant vector

#include <stdint.h>

int main (){

int x, y, z;

...

vec16i32 a = { x, y, z, x, y, z, x, y, z, x, y, z, x, y, z, x};

}

Conversions between vectors with the same number of elements can be performed using the LLVM intrisic __builtin_convertvector. Vector types v16i32, v16u32, v16f32 can be converted between each other. Similarly, vector types v8i64, v8u64, v8f64can be converted between each other. For instance:

#include <stdint.h>

int main (){

vec16f32 a;

...

vec16i32 d = __builtin_convertvector(a,vec16i32);

}

It is also possible to convert floating point vectors with a different number of elements. In such a case, it is required to use the two NPU intrinsics __builtin_npu_v8f64tov16f32 or __builtin_npu_v16f32tov8f64. The first one converts 8 double precision FP elements into 8 single precision FP elements that are placed in the first 8 elements of a v16f32 vector. The second one converts the first 8 single precision FP elements of a v16f32 vector into 8 double precision FP elements. For instance:

#include <stdint.h>

int main (){

vec16f32 a;

...

vec8f64 b = __builtin_npu_v16f32tov8f64(a);

}

Vector comparisons can be done in two different ways. It is possible to use the traditional relational operators that result in another vector type of the same size of the two vectors. For instance:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

vec16i32 c = a < b;

}

After executing the above code, the vector c elements will be equal to 0xFFFFFFFF or 0x00000000 according to the result of the comparison. In addition, we also provide vector comparison intrinsics, as follow:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

int c = __builtin_npu_mask_cmpi32_slt (a, b)

}

After executing the above code, the integer c will contain a bitmap that can be directly used to write the mask register if required. Using vector comparison intrinsics is the natural way of performing comparisons in NaplesPU.

Note that, in NaplesPU, all instructions are masked and at the beginning, all lanes are enabled. If you want to handle SIMD control flow, you need to explicitly take care of masking operations so that they are only applied to some elements. For instance, take a look at the above code:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

int c = __builtin_npu_mask_cmpi32_slt (a, b) //generate mask for a<b

int rm_old = __builtin_npu_read_mask_reg(); //save mask register

__builtin_npu_write_mask_reg(c); //write mask register for a<b

do_something();

c = c^-1; //generate mask for a greater equal b

__builtin_npu_write_mask_reg(c); //write mask register for a greater equal b

do_somethingelse();

__builtin_npu_write_mask_reg(rm_old); //restore the old mask

}

Thread Parallelism

In a NPU core each thread runs the same code provided by the user, through builtins developers can parallelize it or differentiate flows. Each thread has a private stack, while the memory system is distributed and all threads have the same view of the main memory. User can differentiate threads flow based on the running thread ID, as described in control register each thread can read its ID from the control register through the __builtin_npu_read_control_reg accessing to the needed control register (for fetching thread ID use 2, for core ID use 0 or 1):

#define CORE_ID __builtin_npu_read_control_reg(0) #define THREAD_ID __builtin_npu_read_control_reg(2)

In the current release, each tile is equipped with 1 NPU core, hence each core ID overlaps with its tile ID.

Thread Synchronization

NaplesPU supports barrier synchronization among threads. The programmer needs to know the number of threads that require to synchronize, i.e. NumberOfThreads. For each synchronization, there is a unique barrier ID. The NaplesPU intrinsic __builtin_npu_barrier(BarrierID, NumberOfThreads-1) takes care of the synchronization. It is possible to exploit a maximum number of barriers equal to Bmax, i.e. 4 x number of threads. Barrier IDs range from 0 to Bmax-1. Note that, same barrier ID cannot be used in different kernels and can be used multiple times inside a kernel only by the same threads or by a subset of them.

In the following example, we have four threads and two barriers. After performing some operations, all threads synchronize on barrier 1. Then, only threads 0 and 1 synchronize on barrier 2. Remember that, in the main code, the user has to provide the total number of synchronizing threads:

#include <stdint.h>

static vec16i32 C[4];

static vec16i32 D[2];

const vec16i32 A[4]={{...} ,{...},{...}, {...}};

const vec16i32 B[4]={{...},{...},{...}, {...}};

int main(){

//execution thread 0,1,2,3

int threadId = __builtin_npu_read_control_reg(2);

C[threadId] = A[threadId] + B[threadId];

__builtin_npu_barrier(1,3);//Synchronization Threads:0,1,2,3.

if(threadId<2){

//execution thread 0,1

D[threadId]=C[threadId*2]+C[(threadId*2)+1];

__builtin_npu_barrier(2,1);//Synchronization Threads:0,1.

}

if(threadId==0){

D[threadId]=D[threadId]+D[threadId+1];

__builtin_npu_flush((int)(&D[threadId]));

}

return 0;

}

NaplesPU Other Aspects

Flush instruction

The NaplesPU ISA has a flush instruction that is required to avoid data to keep stuck in the cache. It is mandatory to use this instruction in case of output data that is required from the host. Otherwise, the host will read data from the main memory that is not coherent with the cache. The flush instruction takes in input the address of the involved variable and flushes an entire 512-bit cache line. Please remember to cast to integer the address, otherwise you will see the following error: “cannot initialize a parameter of type 'int' with an rvalue of type (YOUR VARIABLE TYPE)*'”. For instance, take a look at the above code:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

vec16i32 c = a + b;

__builtin_npu_flush((int)&c);

}

Please, remember that the flush instruction works for a single 512-bit cache line. As a consequence, multiple flush instructions are required in case of variable types bigger than 512 bits.

Scratchpad memory

In addition to traditional main memory, a NPU core supports a scratchpad memory with a different address space. In order to use the scratchpad memory, the __scratchpad keyword should be used when declaring a variable. Note that just a global variable can be placed in the scratchpad memory. For instance, take a look at the above code:

#include <stdint.h>

__scratchpad int a;

int main (){

...

}

The compiler will recognize the keyword and the integer a variable will be placed in the scratchpad using appropriate load/store instructions.

Barrier Instruction

NPU supports barrier synchronization between threads within the same core or among different tiles. The programmer needs to know the number of threads that require to synchronize, i.e. NumberOfThreads. For each synchronization there is an unique barrier ID. The intrinsic __builtin_npu_barrier(BarrierID, NumberOfThreads-1) takes care of the synchronization. It is possible to exploit a maximum number of barriers equal to Bmax, i.e. 4 x number of threads. Barrier IDs range from 0 to Bmax-1. Note that the same barrier ID cannot be used in different kernels and can be used multiple times inside a kernel only by the same threads or by a subset of them.

In the following example, we have four threads and two barriers. After performing some execution, all threads synchronize on barrier 1. Then, only threads 0 and 1 synchronize on barrier 2. Remember that, on the host side, it is required to initialize barrier 0 with Count=4 and barrier 1 with Count=2.

#include <stdint.h>

static vec16i32 C[4];

static vec16i32 D[2];

const vec16i32 A[4]={{...} ,{...},{...}, {...}};

const vec16i32 B[4]={{...},{...},{...}, {...}};

int main(){

//execution thread 0,1,2,3

int threadId = __builtin_npu_read_control_reg(2);

C[threadId] = A[threadId] + B[threadId];

__builtin_npu_barrier(1,3); //Synchronization Threads:0,1,2,3.

if(threadId<2){

//execution thread 0,1

D[threadId]=C[threadId*2]+C[(threadId*2)+1];

__builtin_npu_barrier(2,1);//Synchronization Threads:0,1.

}

if(threadId==0){

D[threadId]=D[threadId]+D[threadId+1];

__builtin_npu_flush((int)(&D[threadId]));

}

return 0;

}

NaplesPU Intrinsics

NaplesPU Misc Intrinsics

Main NaplesPU builtins are summarized in the following table:

| Intrinsic name (when using these intrinsics you should add “__builtin_npu_” as prefix ) | Operation | Corresponding Instruction |

|---|---|---|

| void barrier (int a, int b) | Refer to the description of the barrier instruction. a contains the barrier ID, while b contains the number of threads that should synchronize-1 | barrier |

| void flush (int a) | Flush a cache line to the main memory. a contains the memory address of the cache line. Explicit integer conversion is required. | flush |

| int createmaskv16i32 (v16i32 a) | Convert the vector a that is made of all elements equal to 0 or -1 into a 32-bit mask value that can be written in the mask register. It can be used to compute the bitmask when a vector comparison operation is performed using the common C/C++ relational operators. | crt_maskv16 |

| void write_mask_reg(int a) | Write a 32-bit bitmask inside the mask register | move |

| void write_mask_regv16i32 (v16i32 a) | Write a 512-bit vector mask inside the mask register. | crt_maskv16 + move |

| int read_mask_reg () | Read a 32-bit bitmask from the mask register. | move |

| void write_control_reg (int a, int b) | Write values to the mask register. The integer a contains the ID of the sub-register to access, while the integer b contains the data. | write_cr |

| int read_control_reg (int a) | Read values from the mask register. The integer a contains the ID of the sub-register to access. | read_cr |

NaplesPU Vector intrinsics

| Intrinsic name (when using these intrinsics you should add “__builtin_npu_” as prefix ) | Operation | Corresponding Instruction |

|---|---|---|

| vec16i32 makevectori32 (int a) | Create a vector of 16 elements whose elements are all equal to a | move_i32 |

| vec16f32 makevectorf32 (float a) | Create a vector of 16 elements whose elements are all equal to a | move_i32 |

| vec16i32 shufflei32 (vec16i32 a, vec16i32 b) or vec16i32 shufflef32 (vec16f32 a, vec16i32 b) | Vector Shuffle - it allows elements of vector a to be copied to the output vector in different positions. The elements in vector b specify, for each corresponding position in the destination register, the indexes of the elements in the source vector register. (see the description of the shuffle instruction) | shuffle_i32 or shuffle_f32 |

| int mask_cmp'w'32_'xyz' (vec16'w'32 a, vec16'w'32 b) | Return an integer bitmask where the i-th bit is equal to one if

(a[i] comp b[i]) is true. Otherwise, the i-th bit is equal to zero. w = type of compare: i for interger and f for float. x = s if signed or u otherwise. yz = traditional compare conditions, namely: gt, ge, lt, le |

cmp'xyz'_'w'32 |

| int mask_cmp'w'32_eq (vec16'w'32 a, vec16'w'32 b) | Return an integer bitmask where the i-th bit is equal to one if

(a[i] comp b[i]) is true. Otherwise, the i-th bit is equal to zero. w = type of compare: i for interger and f for float. |

cmpeq_'w'32 |

| int mask_cmp'w'32_ne (vec16'w'32 a, vec16'w'32 b) | Return an integer bitmask where the i-th bit is equal to one if

(a[i] comp b[i]) is true. Otherwise, the i-th bit is equal to zero. w = type of compare: i for interger and f for float. |

cmpne_'w'32 |

Vector comparisons can be done in two different ways. It is possible to use the traditional relational operators that result in another vector type of the same size of the two vectors. For instance:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

vec16i32 c = a < b;

}

After executing the above code, the vector c elements will be equal to 0xFFFFFFFF or 0x00000000 according to the result of the comparison. In addition, we also provide vector comparison intrinsics, as follow:

#include <stdint.h>

int main (){

vec16i32 a;

vec16i32 b;

…

int c = __builtin_npu_mask_cmpi32_slt (a, b)

}

After executing the above code, the integer c will contain a bitmap that can be directly used to write the mask register if required. Using vector comparison intrinsics is the natural way of performing comparisons in NPU.

Note that, in NPU, all vector operations are masked and after the boot all hardware lanes are enabled. If you want to handle SIMD control flow, you need to explicitly take care of masking operations so that they are only applied to some elements. For instance, take a look to the above code:

#include <stdint.h>

int main (){

volatile vec16i32 a;

volatile vec16i32 b;

…

int c = __builtin_npu_mask_cmpi32_slt (a, b) //generate mask for a lt b

int rm_old = __builtin_npu_read_mask_reg(); //save mask register

__builtin_npu_write_mask_reg(c); //write mask register for a lt b

do_something();

c = c^-1; //generate mask for a ge b

__builtin_npu_write_mask_reg(c); //write mask register for a ge b

do_somethingelse();

__builtin_npu_write_mask_reg(rm_old); //restore the old mask

}

In those cases, it is important to prevent compiler optimizations of the involved vectors and variable marking them as volatile. The compiler tends to rearrange operation, and it is good practice to double-check the compiler objdumb in order to check the order of the operation. If volatile does not prevent reordering, you might embed the code in a function or use -O0 as optimization flag.

Standard LLVM intrinsics

| Intrinsic name | Operation | Corresponding Instruction |

|---|---|---|

| vec16X __builtin_convertvector(vec16Y a, vec16X) | It is is used to express generic vector type-conversion operations. The input vector and the output vector type must have the same number of elements. X can be equal to i32/f32, while Y can be equal to i32/f32 | sext or

itof or ftoi |

Conversions between vectors with the same number of elements can be performed using the LLVM intrisic __builtin_convertvector. Vector types v16i32, v16u32, v16f32 can be converted between each other. For instance:

#include <stdint.h>

int main (){

vec16f32 a;

...

vec16i32 d = __builtin_convertvector(a,vec16i32);

}

Learning by Example

Matrix Multiplication Multithreaded Example

The following code shows a multithread version of the matrix multiplication kernel running on a NPU core. The code spreads the output computation among all available threads. Since each output element computation is independent of others, the thread parallelism is achieved dividing the outer cycle of the function. Output matrix row calculations are equally spanned across all cores and further spanned across threads. For each thread, the function first calculates the portion of the output matrix to compute at the core level: N / CORE_NUMB, with N the dimension of the matrix and CORE_NUMB the number of NPU core in the system.

Then, each thread starts computing the outer loop with start_loop = (core_id * N / CORE_NUMB) + thread_id the portion of output matrix to compute is multiplied to the running core ID (core_id) and added to the running thread ID (thread_id), and each iteration increments by the number of threads in the core THREAD_NUMB.

void matrix_mult(const int a[N][N], const int b[N][N], int mult[N][N], int core_id, int thread_id) {

int start_loop = (core_id * N / CORE_NUMB) + thread_id;

int end_loop = N / CORE_NUMB * (core_id + 1);

for (int i = start_loop; i < end_loop; i += THREAD_NUMB){

for (int j = 0; j < N; j++)

for (int k = 0; k < N; k++)

mult[i][j] += a[i][k] * b[k][j];

}

}

Parameters core_id and thread_id are passed by the main function, and fetched from NPU control registers through builtins:

#define CORE_ID __builtin_npu_read_control_reg(0) #define THREAD_ID __builtin_npu_read_control_reg(2)

In this way, each thread in each core computes a portion of the output matrix concurrently to the others.

The final result is ready when all thread over the system ended the assigned task, a system synchronization is required and in the main function this is achieved using barrier builtin provided by the programming model:

__builtin_npu_barrier(42, CORE_NUMB * THREAD_NUMB - 1);

The builtin above synchronizes CORE_NUMB * THREAD_NUMB number of threads (total number of thread running in the system) on the ID 42. When all threads hit the barrier the output matrix is ready, although most of it could be in private L1 caches. The next step is to flush output lines into the main memory:

if (THREAD_ID == 0 && CORE_ID == 0) {

for (int i = 0; i < N*N; i += 64 / sizeof(int)) {

__builtin_npu_flush((int) &C[i / N][i % N]);

}

__builtin_npu_write_control_reg(N*N, 12); // For cosimulation purpose

}

Generally, flush operations are performed by a thread, in this case the first thread in the first core calls the flush builtin which sends output results still in L1 caches to the main memory.

The full code for the main function is attached below:

#define CORE_ID __builtin_npu_read_control_reg(0)

#define THREAD_ID __builtin_npu_read_control_reg(2)

int main(){

init_matrix(A);

init_matrix(B);

matrix_mult(A, B, C, CORE_ID, THREAD_ID);

__builtin_npu_barrier(CORE_ID + 1, THREAD_NUMB - 1);

if (THREAD_ID == 0 && CORE_ID == 0) {

for (int i = 0; i < N*N; i += 64 / sizeof(int)) {

__builtin_npu_flush((int) &C[i / N][i % N]);

}

__builtin_npu_write_control_reg(N*N, 12); // For cosimulation purpose

}

return (int)&C;

}

Matrix Multiplication Vectorial Example

On the other hand, the vectorial version of the matrix multiplication function computes the output matrix in a SIMD multithreading fashion. Both input and output matrices are organized in target specific vector types, becoming vectors of vectors. Such an organization results in a distribution of the N column partial results on the 16 hardware lanes; each thread calculates N partial results every cycle. The size of the matrix must be multiple of 16.

void kernel_function(vec16i32 *A, vec16i32 *B, vec16i32 *C, int N) {

uint32_t coreId = __builtin_npu_read_control_reg(0);

uint32_t threadId = __builtin_npu_read_control_reg(2);

uint32_t nT = 2; // number of threads

uint32_t nL = 16; // number of lanes

uint32_t nC = N/nL;

uint32_t ndivnT = N/nT;

uint32_t tIdndivnT = threadId*ndivnT;

uint32_t tIdndivnTnC = tIdndivnT*nC;

for (uint32_t i = coreId; i < ndivnT*nC; i+=CORE_NUMB){

uint32_t col = (tIdndivnT+i)%nC;

C[tIdndivnTnC+i] = 0;

for (uint32_t j = 0; j < nC; j++){

for (uint32_t k = 0; k < nL; k++){

C[tIdndivnTnC+i] += A[tIdndivnTnC+i-col+j][k] * B[(nC*k)+(j*N)+col];

}

}

}

}

Please note, the C[tIdndivnTnC+i] += A[tIdndivnTnC+i-col+j][k] * B[(nC*k)+(j*N)+col] performs 16 operations on 16 different data at time. The organization of the code and the thread parallelization are equivalent to its scalar version.

How to compile a kernel

Currently, the NaplesPU toolchain is released with some example kernels located in the npu/software/kernels folder. We provide makefiles to compile these kernels for NaplesPU. In case you want to add a new kernel, it is suggested to copy a kernel folder and replace C/CPP files with your own source code. Then, remember to modify the makefile updating the SRCS variable with the current main C/CPP filenames.

OpenCL support for NaplesPU

OpenCL defines a platform as a set of computing devices on which the host is connected to. Each device is further divided into several compute units (CUs), each of them defined as a collection of processing elements (PEs). Recall that the target platform is architecturally designed around a single core, structured in terms of a set of at most eight hardware threads. Each hardware threads competes with each other to have access to sixteen hardware lanes, to execute both scalar and vector operations on 32-bit wide integer or floating-point operands.

The computing device abstraction is physically mapped on the NaplesPU many-core architecture. The NaplesPU many-core can be configured in terms of the number of cores. Each NPU core maps on the OpenCL Compute Unit. Internally, the NPU core is composed of hardware threads, each of them represents the abstraction of the OpenCL processing element.

Execution Model Matching

From the execution model point of view, OpenCL relies on an N-dimensional index space, where each point represents a kernel instance execution. Since the physical kernel instance execution is done by the hardware threads, the OpenCL work-item is mapped onto an NPU single hardware-thread. Consequently, a work-group is defined as a set of hardware threads, and all work-items in a work-group executes on a single computing unit.

Memory Model Matching

OpenCL partitions the memory in four different spaces:

- the global and constant spaces: elements in those spaces are accessible by all work-items in all work-group.

- the local space: visible only to work-items within a work-group.

- the private space: visible to only a single work-item.

The target-platform is provided of a DDR memory, that is the device memory in OpenCL nomenclature. Consequently, variables are physically mapped on this memory. The compiler itself, by looking at the address-space qualifier, verifies the OpenCL constraints are satisfied.

Each NPU core is also equipped with a Scratchpad Memory, an on-chip non-coherent memory portion exclusive to each core. This memory is compliant with the OpenCL local memory features

Finally, each hardware thread within the NPU core has a private stack. This memory section is private to each hardware thread, that is the OpenCL work-item, and cannot be addressed by others. As a result, each stack acts as the OpenCL private memory.

Programming Model Matching

OpenCL supports two kinds of programming models, data- and task-parallel. A data-parallel model requires that each point of the OpenCL index space executes a kernel instance. Since each point represents a work-item and these are mapped on hardware threads, the data-parallel requirements are correctly satisfied. Please note that the implemented model is a relaxed version, without requiring a strictly one-to-one mapping on data.

A task-parallel programming model requires kernel instances are independently executed in any point of the index space. In this case, each work-item is not constrained to execute the same kernel instance of others. The compiler frontend defines a set of builtins that may be used for this purpose. Moreover, each NPU core is built of 16 hardware lanes, useful to realise a lock-step execution. Consequently, OpenCL support is realised to allow the usage of vector types. As a result, the vector execution is supported for the following data types:

- charn, ucharn, are respectively mapped on vec16i8 and vec16u8, where n=16. Other values of n are not supported.

- shortn, ushortn, are respectively mapped on vec16i16 and vec16i32, where n=16. Other values of n are not supported.

- intn, uintn, are respectively mapped on vec16i32 and vec16u32, where n=16. Other values of n are not supported.

- floatn, mapped on vec16f32, where n=16. Other values of n are not supported.

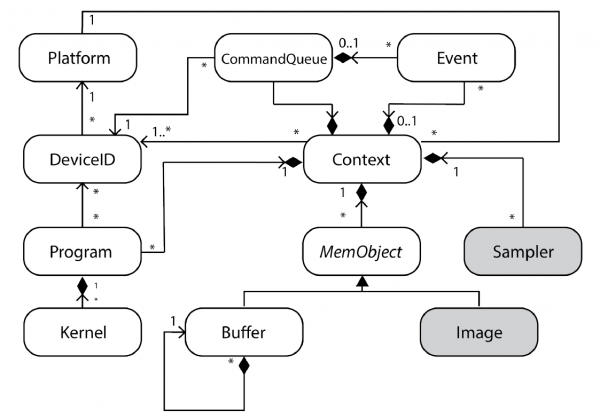

OpenCL Runtime Design

OpenCL APIs are a set of functions meant to coordinate and manage devices, those provide support for running applications and monitoring their execution. These APIs also provides a way to retrieve device-related information.

The following Figure depicts the UML Class diagram for the OpenCL Runtime as defined in the OpenCL specification. Grey-filled boxes represent not available features due to the absence of hardware support.

The custom OpenCL runtime relies on two main abstractions:

- Low-level abstractions, not entirely hardware dependent, provide device-host communication support.

- High-level abstractions, according to OpenCL APIs, administrate the life cycle of a kernel running onto the device.

OpenCL Example

The following code shows a vectorial matrix multiplication in OpenCL running on the NPU device.

#include <opencl_stdlib.h>

#define WORK_DIM 4

__kernel void kernel_function(__global int16 *A, __global int16 *B, __global int16 *C, int rows, int cols)

{

__private uint32_t threadId = get_local_id(0);

uint32_t nT = WORK_DIM; // number of threads

uint32_t nL = 16; // number of lanes

uint32_t N = rows;

uint32_t nC = N / nL;

uint32_t ndivnT = N / nT;

uint32_t tIdndivnT = threadId * ndivnT;

uint32_t tIdndivnTnC = tIdndivnT * nC;

for (uint32_t i = 0; i < ndivnT * nC; i++)

{

uint32_t col = (tIdndivnT + i) % nC;

C[tIdndivnTnC + i] = 0;

for (uint32_t j = 0; j < nC; j++)

{

for (uint32_t k = 0; k < nL; k++)

{

C[tIdndivnTnC + i] += A[tIdndivnTnC + i - col + j][k] * B[(nC * k) + (j * N) + col];

}

}

}

}