Difference between revisions of "Core"

(→Control registers) |

(→Control registers) |

||

| (30 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

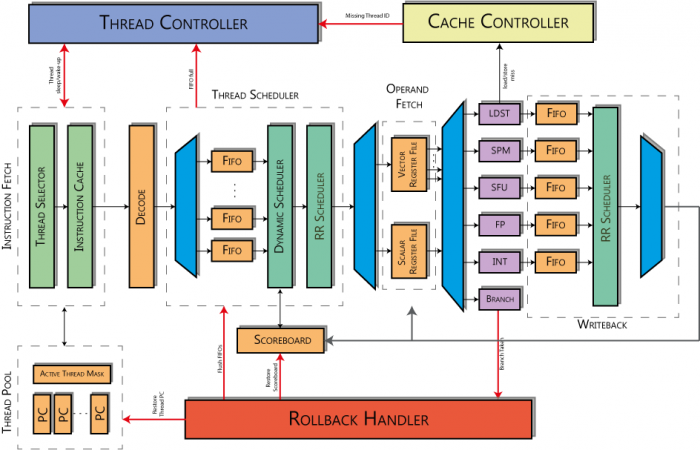

| − | The core is | + | The core is a RISC in-order pipeline (as depicted in the figure below), with a control unit is intentionally kept lightweight. The architecture masks memory and operation latencies by heavily relying on hardware multithreading and achieve high computational performance through a wide SIMD hardware support. By ensuring a light control logic, the core devotes most of its resources for accelerating computation in highly data-parallel kernels. In the hardware multithreading NaplesPU architecture, each hardware thread has its own PC, register file, and control registers. The number of threads is user configurable, but it has to be a power of two as other configurable parameters. A NPU hardware thread is equivalent to a wavefront in the AMD terminology and a CUDA warp in the NVIDIA terminology. The processor uses a deep pipeline to improve clock speed. |

| − | [[File: | + | [[File:Nup pipe.png|700px|NPU core microarchitecture]] |

| − | All threads share the same | + | All threads share the same execution units organized in a SIMD fashion. Execution pipelines are organized in hardware vector lanes (like vector processors), each operator is replicated N times. Each thread can perform a SIMD operation on independent data, while data are organized in a vector register file. The core supports a high-throughput non-coherent scratchpad memory, or SPM (corresponding to the shared memory in the NVIDIA terminology). The SPM is divided into a parameterizable number of banks based on a user-configurable mapping function. The memory controller resolves bank collisions at run-time ensuring correct execution of SPM accesses from concurrent threads. Coherence mechanisms incur a high latency and are not strictly necessary for many applications. |

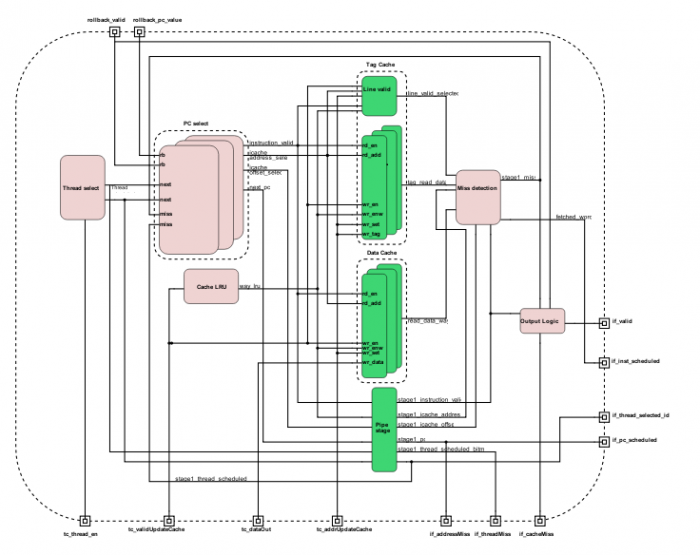

== Instruction Fetch Stage == | == Instruction Fetch Stage == | ||

| − | Instruction Fetch stage schedules the next thread PC from the eligible threads pool, handled by the Thread Controller unit. Available threads are scheduled in a Round Robin fashion. Furthermore, at the boot phase, the Thread Controller | + | '''Instruction Fetch''' stage schedules the next thread PC from the eligible threads pool, handled by the Thread Controller unit. Available threads are scheduled in a Round Robin fashion, implementing a fine-grained thread scheduling model. Furthermore, at the boot phase, the Thread Controller initializes each thread PC through a specific interface. |

[[File:ifs.png|700px|Instruction Fetch Stage]] | [[File:ifs.png|700px|Instruction Fetch Stage]] | ||

| Line 13: | Line 13: | ||

The instruction cache is N-way set associative (by default it has 4 ways and 128 sets of 512 bits each). In here, an SRAM is allocated for each instanciated way, in order to provide parallelism during the tag evaluation phase. | The instruction cache is N-way set associative (by default it has 4 ways and 128 sets of 512 bits each). In here, an SRAM is allocated for each instanciated way, in order to provide parallelism during the tag evaluation phase. | ||

| − | Once an eligible thread is selected, Instruction Fetch reads its PC | + | Once an eligible thread is selected, Instruction Fetch reads its PC and determines whether the next instruction cache line is already in instruction cache or not. Such a module is divided into two stages: in the first stage, each way has a bank of memory containing tag values and validity information for the cache sets. This stage reads requested sets from all the ways in parallel and passes these data to the second stage. The tag memory has one cycle of latency, so the next stage handles the result. This stage compares the way tags previously read with the request tag, if they match, it raises a cache hit. In such a case, the first stage issues the instruction cache data address to instruction cache data memory. If a miss occurs an instruction memory transaction is issued to the Network Interface (or to the Cache Controller in case of Single Core instance) and the thread is blocked until the instruction line is not retrieved from main memory. |

Finally, this module handles the PC restoring in case of rollback. When a rollback occurs and the rollback signals are set by Rollback Handler stage, the Instruction Fetch module overwrites the PC of the thread which issued such a rollback. | Finally, this module handles the PC restoring in case of rollback. When a rollback occurs and the rollback signals are set by Rollback Handler stage, the Instruction Fetch module overwrites the PC of the thread which issued such a rollback. | ||

=== Thread & PC selection === | === Thread & PC selection === | ||

| − | A thread is selected from | + | A thread is selected from the eligible ones using an external signal coming from the '''Thread Controller''' unit. In this module, an internal round robin arbiter issues the threads in a fair mode, and a different thread is scheduled every clock cycle, typical of fine-grained multithreaded architectures. |

| − | + | Once the thread is scheduled, its PC is fetched and updated on the base of the following events: | |

| + | |||

| + | * In case of a cache hit, the instruction is fetched from the Instruction Cache and the PC value is incremented of 4. | ||

| + | |||

| + | * In case of a cache miss, the thread is stalled and a memory transaction for the corresponding memory line is issued, and the value of the PC is restored. When the instruction is fetched from the main memory, the thread is rescheduled and these conditions are checked again. | ||

| + | |||

| + | * In case of rollback of the scheduled thread, the PC is set by the rollback transaction and nothing is propagated to the next stage. | ||

if ( tc_job_valid && thread_id == tc_job_thread_id ) // new job | if ( tc_job_valid && thread_id == tc_job_thread_id ) // new job | ||

| Line 31: | Line 37: | ||

next_pc[thread_id] <= next_pc[thread_id] + address_t'( 3'd4 ); | next_pc[thread_id] <= next_pc[thread_id] + address_t'( 3'd4 ); | ||

| − | === Cache LRU === | + | === Cache Pseudo-LRU === |

| − | The | + | The hereafter module described is a hardware implementation of the Pseudo LRU algorithm. For the full algorithm please refer to the following link [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.217.3594&rep=rep1&type=pdf link], page 13. |

| + | |||

| + | The implemented pseudo-LRU has two main interfaces, namely ''read'' and ''update''. The ''read'' interface updates the occurrence of usage of a given set address, i.e. when a hit is performed the memory line is just being used and its corresponding set address has to be updated as the most recently used line. | ||

| − | + | always_ff @( posedge clk, posedge reset ) begin | |

| + | if ( reset ) begin | ||

| + | lru_counter <= '{default : '0}; | ||

| + | lru_bits <= '{default : '0}; | ||

| + | end | ||

| + | else begin // [1] | ||

| + | if ( en_hit ) begin | ||

| + | lru_bits[set_hit] <= |way_hit[( NUM_WAYS/2 ) - 1 : 0]; // 0: first half, 1: second half | ||

| + | lru_counter <= lru_counter + 1; // update the counter | ||

| + | end else // [2] | ||

| + | if ( en_update ) begin | ||

| + | lru_bits[set_update] <= ~lru_bits[set_update]; // switch to the other half | ||

| + | lru_counter <= lru_counter + 1; // update the counter | ||

| + | end | ||

| + | end | ||

| + | end | ||

| − | + | The ''update'' interface is enabled when a new line is pushed in the cache. This happens when new instruction cache line comes from memory due to a pending transaction. In the case of Instruction Cache +, no replacement is needed since the instruction memory area is non-coherent. | |

| − | === Tag and Data | + | === Tag and Data Instruction Cache === |

| − | The tag and data cache are | + | The tag and data cache are organized in similar structures. Each way has its own SRAM module, and each read request is forwarded concurrently to all the implemented ways. on the other hand, in case of a new incoming memory line, the way to update is selected by the pseudo-LRU. |

| − | A line is | + | A line is set as valid when a memory instruction from the main memory is stored in the given way. Each memory line stored in the Instruction Cache is associated with a validity bit, called ''line_valid''. It's important to notice a cut-through for validity check operation: if the incoming memory line is equal to the current request address, the incoming memory line is also selected bypassing the Instruction Cache. |

if ( tc_valid_update_cache && way_lru == way ) | if ( tc_valid_update_cache && way_lru == way ) | ||

| Line 48: | Line 71: | ||

line_valid_selected[way] <= 1; //cut-through | line_valid_selected[way] <= 1; //cut-through | ||

else | else | ||

| − | line_valid_selected[way] <= line_valid[icache_address_selected.index] & instruction_valid; | + | line_valid_selected[way] <= line_valid[icache_address_selected.index] & instruction_valid; |

| − | |||

| − | |||

=== Hit/miss detection === | === Hit/miss detection === | ||

| − | There | + | There is a cache hit when the line is valid and the tags match, otherwise, the control unit issues a miss request to the memory through the '''Thread Controller''' interface. |

| + | The following code refers to the hit/miss detection logic. | ||

for ( way = 0; way < `ICACHE_WAY; way++ ) | for ( way = 0; way < `ICACHE_WAY; way++ ) | ||

assign hit_miss[way] = tag_read_data[way] == stage1_icache_address.tag && line_valid_selected[way]; | assign hit_miss[way] = tag_read_data[way] == stage1_icache_address.tag && line_valid_selected[way]; | ||

| − | + | In case of miss, the PC is restored (asserting ''stage1_miss[thread_id]'' signal) and a memory request is dispatched using the current PC value as address. | |

for ( thread_id = 0; thread_id < `THREAD_NUMB; thread_id++ ) | for ( thread_id = 0; thread_id < `THREAD_NUMB; thread_id++ ) | ||

assign stage1_miss[thread_id] = ( stage1_thread_scheduled_id == thread_id ) ? ~|hit_miss & stage1_instruction_valid : 1'b0; | assign stage1_miss[thread_id] = ( stage1_thread_scheduled_id == thread_id ) ? ~|hit_miss & stage1_instruction_valid : 1'b0; | ||

=== Output logic === | === Output logic === | ||

| − | The | + | The memory request due a miss is dispatched only if the following conditions are true: |

| + | * the current instruction is valid, | ||

| + | * there is no rollback on the scheduled thread | ||

| + | * and the hit/miss logic detected a miss on the current thread request. | ||

if_cache_miss = stage1_instruction_valid & ~rollback_valid[stage1_thread_scheduled_id] & stage1_miss[stage1_thread_scheduled_id]; | if_cache_miss = stage1_instruction_valid & ~rollback_valid[stage1_thread_scheduled_id] & stage1_miss[stage1_thread_scheduled_id]; | ||

| − | + | In case of a hit, the instruction is propagated to the '''Decode''' stage, and the valid is asserted only if the following conditions are true: | |

| + | * the current instruction is valid, | ||

| + | * there is no rollback on the scheduled thread, | ||

| + | * the hit/miss logic detected a cache hit on the current thread scheduled. | ||

if_valid = stage1_instruction_valid & ~rollback_valid[stage1_thread_scheduled_id] & ~stage1_miss[stage1_thread_scheduled_id]; | if_valid = stage1_instruction_valid & ~rollback_valid[stage1_thread_scheduled_id] & ~stage1_miss[stage1_thread_scheduled_id]; | ||

| Line 73: | Line 101: | ||

== Decode stage == | == Decode stage == | ||

| − | Decode stage decodes a fetched instruction from Instruction Fetch and produces the control signals for the datapath. The output signal '''dec_instr''' helps execution and control modules to manage | + | '''Decode''' stage decodes a fetched instruction from '''Instruction Fetch''' module and produces the control signals for the datapath. The output signal '''dec_instr''' helps execution and control modules to manage incoming instructions and is propagated in each pipeline stage. Instruction types are described in the [[ISA|ISA section]]. |

| − | The goal of this stage is to fill all the field of the dec_instr signal using the fetched instruction '''if_inst_scheduled'''. The if_inst_scheduled signal is composed of an opcode and a body. | + | The goal of this stage is to fill all the field of the dec_instr signal using the fetched instruction '''if_inst_scheduled'''. The if_inst_scheduled signal is composed of an opcode and a body. Each instruction could be one of 7 different types, hereafter listed: |

typedef union packed { | typedef union packed { | ||

| Line 88: | Line 116: | ||

} instruction_body_t; | } instruction_body_t; | ||

| − | A combinatorial case construct fills the dec_instr fields reading the opcode and other instruction bits. | + | A combinatorial switch-case construct (on the type field of the instruction) fills the dec_instr fields reading the opcode and other instruction bits. |

Instruction types are: | Instruction types are: | ||

- RR (Register to Register) has a destination register and two source registers. | - RR (Register to Register) has a destination register and two source registers. | ||

- RI (Register Immediate) has a destination register, one source register and an immediate encoded in the instruction word. | - RI (Register Immediate) has a destination register, one source register and an immediate encoded in the instruction word. | ||

- MVI (Move Immediate) has a destination register and a 16-bit immediate. | - MVI (Move Immediate) has a destination register and a 16-bit immediate. | ||

| − | - MEM (Memory Instruction) has a destination/source field, in case of load the first register asses the destination register. Otherwise in case of a store, the first register contains the store value. In both cases, the second source register contains the base address and the immediate is encoded in the instruction. The sum of base address and immediate gives the effective memory address. | + | - MEM (Memory Instruction) has a destination/source field, in case of load the first register asses the destination register. Otherwise, in case of a store, the first register contains the store value. In both cases, the second source register contains the base address and the immediate is encoded in the instruction. The sum of the base address and immediate gives the effective memory address. |

- JBA (Jump Base Address) handles conditional and unconditional jumps, the destination address is calculated on the base of the sum of the source register and the immediate (if present). | - JBA (Jump Base Address) handles conditional and unconditional jumps, the destination address is calculated on the base of the sum of the source register and the immediate (if present). | ||

- CTR (Control instructions). | - CTR (Control instructions). | ||

== Instruction scheduler stage == | == Instruction scheduler stage == | ||

| − | + | '''Instruction Scheduler''' (often referred to as Dynamic Scheduler) schedules in a Round Robin way active threads checking data and structural hazards. A scoreboard for each thread is allocated in this module, whenever an instruction is scheduled, the scoreboard tracks which registers are busy by setting a bit in its structure. In this way, if another instruction requires this very register, it raises a WAR or RAW hazard. Dually, when an instruction reaches the Writeback module and writes the computed outcome on its destination register, the relative bit is freed in the scoreboard, from this moment onward the register results free to use. | |

| − | Fetched instructions are stored in FIFOs in the Instruction | + | Fetched instructions are stored in FIFOs in the '''Instruction Buffer''' stage, one per thread. The Dynamic Scheduler checks data hazard and states which thread can be scheduled to the '''Operand Fetch'''. |

| − | In this component, FPU structural hazards are checked as well. The FP pipe has one output demux to the Writeback unit. | + | In this component, FPU structural hazards are checked as well. The FP pipe has one output demux to the Writeback unit. The FP pipe is conflict-free, although two different operations might terminate at the same time and collide in the output propagation causing a structural hazard. At this stage only data hazards and this FPU structural hazards are checked, other structural hazards are checked on-the-fly in the Writeback stage. |

| − | The Dynamic Scheduler relies on a light scoreboarding system, one per thread. Based on the scoreboard and on the fetched instruction, the Dynamic Scheduler states which thread is eligible to be scheduled, and a thread is selected through a round-robin arbitrage. A thread is eligible if its '''can_issue''' bit is high | + | The Dynamic Scheduler relies on a light scoreboarding system, one per thread. Based on the scoreboard mechanism and on the fetched instruction, the Dynamic Scheduler states which thread is eligible to be scheduled, and a thread is selected through a round-robin arbitrage. A thread is eligible if its '''can_issue''' bit is high, organized in a vector (a bit per thread) and assigned as follow: |

assign can_issue[thread_id] = ib_instructions_valid[thread_id] && !( hazard_raw || hazard_waw ) && ( ~( |wb_fifo_full ) ) && ~rb_valid[thread_id] && can_issue_fp && can_issue_spm && release_val[thread_id] && sync_detect[thread_id] && ~dsu_stop_issue[thread_id]; | assign can_issue[thread_id] = ib_instructions_valid[thread_id] && !( hazard_raw || hazard_waw ) && ( ~( |wb_fifo_full ) ) && ~rb_valid[thread_id] && can_issue_fp && can_issue_spm && release_val[thread_id] && sync_detect[thread_id] && ~dsu_stop_issue[thread_id]; | ||

| − | + | A round-robin arbiter evaluates every '''can_issue''' bits and, in a round-robin fashion, selects a thread from the eligible pool. | |

Next, the Dynamic Scheduler forwards the instruction selected and the relative thread info to the Operand Fetch, then updates the scoreboard of the issued thread. When the thread is scheduled, the scoreboard_set_bitmap is updated with the destination register, it sets a bit in order to track the destination register used by the current operation. From this moment onward, this register results busy and an instruction which wants to use it raises a data hazard. | Next, the Dynamic Scheduler forwards the instruction selected and the relative thread info to the Operand Fetch, then updates the scoreboard of the issued thread. When the thread is scheduled, the scoreboard_set_bitmap is updated with the destination register, it sets a bit in order to track the destination register used by the current operation. From this moment onward, this register results busy and an instruction which wants to use it raises a data hazard. | ||

| Line 117: | Line 145: | ||

Dually, the scoreboard_clear_bitmap tracks all registers released by the Writeback stage. An operation in Writeback releases its destination register. | Dually, the scoreboard_clear_bitmap tracks all registers released by the Writeback stage. An operation in Writeback releases its destination register. | ||

| − | Finally, when a rollback occurs, the Dynamic Scheduler flushes the FIFO of the thread | + | Finally, when a rollback occurs, the Dynamic Scheduler flushes the FIFO of the corresponding thread, and restores its scoreboard to its previous state. |

== Operand fetch stage == | == Operand fetch stage == | ||

| − | Operand Fetch builds operands for the Execution Pipeline. As said before, the | + | '''Operand Fetch''' builds operands for the Execution Pipeline. As said before, the NPU core supports SIMD operations, for this reason the core has been equipped with two register files: a scalar register file (SRF) and a vector register file (VRF). Each register in the SRF register has a size of `REGISTER_SIZE bits (default 32 bits). On the other hand, a register in the VRF register is wider and allocates a scalar register for each hardware lane (`REGISTER_SIZE x `HW_LANE, default 32-bit x 16 HW lane). Both, SRF and VRF, have same registers number (`REGISTER_NUMBER defined in npu_define.sv, default 64 registers each). Both register files are allocated in SRAM memories with two read and a write ports each. |

| − | The register file read ports receive as input the instruction scheduled | + | The register file read ports receive as input the instruction scheduled while data from register files are forwarded to the second stage, which is in charge of building operand 0 and 1. The operand 0, in case of memory access, defines the effective memory address, built by adding the base address and the immediate offset, in each other cases it contains a register value. |

if ( next_issue_inst_scheduled.is_memory_access ) | if ( next_issue_inst_scheduled.is_memory_access ) | ||

| Line 130: | Line 158: | ||

opf_fecthed_op0_buff <= {`HW_LANE{rd_out0_scalar}}; | opf_fecthed_op0_buff <= {`HW_LANE{rd_out0_scalar}}; | ||

| − | Immediate values are span over the operand 1. When the current instruction has an immediate, it is replicated on each vector element of operand 1. In each other cases, it contains | + | Immediate values are span over the operand 1. When the current instruction has an immediate, it is replicated on each vector element of operand 1. In each other cases, it contains a register value. |

if ( next_issue_inst_scheduled.is_source1_immediate ) | if ( next_issue_inst_scheduled.is_source1_immediate ) | ||

| Line 137: | Line 165: | ||

opf_fecthed_op1_buff <= {`HW_LANE{rd_out1_scalar}}; | opf_fecthed_op1_buff <= {`HW_LANE{rd_out1_scalar}}; | ||

| − | The PC is stored in a special register in the Instruction Fetch, hence it is not handled by the scalar register. Such a register is forwarded in this stage along with the decoded instruction | + | The PC is stored in a special register in the Instruction Fetch module, hence it is not handled by the scalar register file. Such a register is forwarded in this stage along with the decoded instruction and used in the operand composition when it is needed. |

| − | The register file write port is disposed in order to store output data from the Writeback stage. In case of vectorial | + | The register file write port is disposed in order to store output data from the Writeback stage. In case of vectorial operations, the wb_result_hw_lane_mask handles which lane is affected by the current operation. |

for ( lane_id = 0; lane_id < `HW_LANE; lane_id ++ ) begin : LANE_WRITE_EN | for ( lane_id = 0; lane_id < `HW_LANE; lane_id ++ ) begin : LANE_WRITE_EN | ||

| Line 149: | Line 177: | ||

== Integer Arithmetic & Logic unit == | == Integer Arithmetic & Logic unit == | ||

| − | + | '''Integer Arithmetic & Logic unit''' is the main execution stage, it handles jumps, arithmetic and logic operations, it also manages control register accesses and moves. For further details about the operation admitted please refer to the [[ISA]] page. | |

| − | In order to dispatch the current | + | In order to dispatch the current instruction to the right operator, such a module leverages on the selection fields of the instruction decoded. For example: |

assign is_jmpsr = opf_inst_scheduled.pipe_sel == PIPE_BRANCH & opf_inst_scheduled.is_int & opf_inst_scheduled.is_branch; | assign is_jmpsr = opf_inst_scheduled.pipe_sel == PIPE_BRANCH & opf_inst_scheduled.is_int & opf_inst_scheduled.is_branch; | ||

| − | Vectorial operations are executed in parallel spanning data over all the HW lanes: all lanes perform the same operations, and the final vectorial result vec_result is composed by merging all the scalar results from the different lanes. | + | Vectorial operations are executed in parallel spanning data over all the HW lanes: all lanes perform the same operations sharing the same control logic, and the final vectorial result signal (called vec_result) is composed by merging all the scalar results from the different lanes. |

| − | On the other hand, when an instruction performs a scalar operation, only the first lane of vec_result contains | + | On the other hand, when an instruction performs a scalar operation, only the first lane of vec_result contains a useful result. An output logic fetches the result from the right operator based on the issued instruction, as shown in the following code: |

if( is_compare ) | if( is_compare ) | ||

| Line 167: | Line 195: | ||

=== Control registers === | === Control registers === | ||

| − | The Control Register unit holds status and performance information. | + | |

| − | - TILE_ID: shared information, returns current tile identifier. | + | The Control Register unit holds status and performance information. Status information are shared among all threads (such as Core ID or the global performance counter), others are thread-specific (such as Thread ID and Thread PC): |

| − | - CORE_ID: shared information, returns current core identifier. | + | - TILE_ID: shared information, returns the current tile identifier. |

| + | - CORE_ID: shared information, returns the current core identifier. | ||

- THREAD_ID: private information, returns thread identifier. | - THREAD_ID: private information, returns thread identifier. | ||

- GLOBAL_ID: private information, returns Tile ID, Core ID and Thread ID merged together. | - GLOBAL_ID: private information, returns Tile ID, Core ID and Thread ID merged together. | ||

| Line 179: | Line 208: | ||

- PC: private information, returns the current value of the PC. | - PC: private information, returns the current value of the PC. | ||

- TRAP_REASON: private information, returns the trap reason. | - TRAP_REASON: private information, returns the trap reason. | ||

| − | - THREAD_STATUS: private information, returns the current thread status. | + | - THREAD_STATUS: private information, returns the current thread status. |

| + | - ARGC: shared information, number of arguments of the main function. | ||

| + | - ARGV_ID: shared information, the address of ARGV. | ||

| + | - THREAD_NUMB_ID: number of hardware thread currently implemented. | ||

| + | - THREAD_MISS_CC_ID: private information, clock cycles while the thread is idle due memory operations. | ||

| + | - KERNEL_WORK: private information, kernel cycles. | ||

| + | - CPU_CTRL_REG_ID: CPU mode register. | ||

| + | - PWR_MDL_REG_ID: power model performance counter. | ||

| + | - UNCOHERENCE_MAP_ID: stores information about the non-coherent memory regions. | ||

| + | - DEBUG_BASE_ADDR: debug registers base address, fetches the value of the first debug register | ||

| − | The Control Register unit has a direct interface with the Host Interface, in | + | The Control Register unit has a direct interface with the Host Interface, in order to make these information accessible on the host-side. |

Control registers are handled and accessed (on the core side) by the Integer Arithmetic & Logic unit. | Control registers are handled and accessed (on the core side) by the Integer Arithmetic & Logic unit. | ||

| + | |||

| + | User can specifies non-coherent memory regions writing on <code>UNCOHERENCE_MAP_ID</code> control register. This maps a table which tracks non-coherent regions, declared as follow: | ||

| + | |||

| + | typedef struct packed { | ||

| + | logic [`UNCOHERENCE_MAP_BITS-1:0] start_addr; | ||

| + | logic [`UNCOHERENCE_MAP_BITS-1:0] end_addr; | ||

| + | logic valid; | ||

| + | } uncoherent_mmap; | ||

| + | |||

| + | The user specifies the starting and ending address of the non-coherent region, defined as 10-bit each in the <code>npu_coherence_defines.sv</code> file. While the least significant bit states the validity of the entry. The following control logic updates the non-coherent memory table whenever a <code>write_cr</code> occurs: | ||

| + | |||

| + | genvar region_id; | ||

| + | generate | ||

| + | for (region_id = 0; region_id < `UNCOHERENCE_MAP_SIZE; region_id++) begin : UNCOHERENT_AREA_LOOKUP | ||

| + | always_ff @ ( posedge clk, posedge reset ) | ||

| + | if ( reset ) begin | ||

| + | uncoherent_memory_areas[region_id].valid <= 1'b0; | ||

| + | end else begin | ||

| + | if ( is_cr_write & write_mmap & opf_fetched_op0[0][$bits(uncoherent_mmap) +: $clog2(`UNCOHERENCE_MAP_SIZE)] == region_id ) begin | ||

| + | uncoherent_memory_areas[region_id] <= opf_fetched_op0[0][$bits(uncoherent_mmap)-1 : 0]; | ||

| + | end | ||

| + | end | ||

| + | assign uncoherent_area_hits[region_id] = address_lookup >= uncoherent_memory_areas[region_id].start_addr && address_lookup <= uncoherent_memory_areas[region_id].end_addr && uncoherent_memory_areas[region_id].valid; | ||

| + | end | ||

| + | endgenerate | ||

| + | |||

| + | The above logic also checks if an issued memory transaction hits in the non-coherent regions, whenever this occurs the module propagates to the Operand Fetch this information through the <code>uncoherent_area_hit</code> signal. | ||

== Scratchpad unit == | == Scratchpad unit == | ||

| − | |||

This unit is described in the dedicated [[Scratchpad unit|scratchpad page]]. | This unit is described in the dedicated [[Scratchpad unit|scratchpad page]]. | ||

| Line 192: | Line 256: | ||

== Load/Store unit == | == Load/Store unit == | ||

| − | + | '''LSU''' unit is described in the following section [[Load/Store unit|load/store subsection]]. | |

== Floating point unit == | == Floating point unit == | ||

| − | ''' | + | Multistage floating point instructions, The '''FPU''' supports single-precision FP operations according to the IEEE-754-2008 standard, auto-generated using FloPoCo open-source library. The FP hardware operator instantiated in the FPU are: |

| + | |||

| + | * FP Add/Sub | ||

| + | |||

| + | * FP Mult | ||

| + | |||

| + | * FP Div | ||

| + | |||

| + | * Converters: Int to FP, FP to Int | ||

| − | + | Each operator is fully-pipelined and has its own latency. The FPU is provided with a light control logic which does no structural hazard checking on the Writeback (since it is demanded to the Instruction Scheduler), although it delays the instruction information according to with the operation latency, in order to propagate the operation result along with its related information to the Writeback module. | |

== Barrier unit == | == Barrier unit == | ||

| Line 206: | Line 278: | ||

== Branch unit == | == Branch unit == | ||

| − | + | '''Branch Control''' unit handles conditional and unconditional jumps and restores scoreboards when a jump is taken. It signals to the Rollback Handler whether a jump has to be taken or not. Base address or condition are stored in opf_fecthed_op0[0] while the immediate is stored in opf_fecthed_op1[0]. | |

| − | + | NPU core supports two jump instruction formats: | |

* JRA: Jump Relative Address is an unconditional jump instruction, it takes an immediate and the core will always jump to PC + immediate location. E.g. jmp -12 -> BC will jump to PC-12 (3 instruction back) memory location. | * JRA: Jump Relative Address is an unconditional jump instruction, it takes an immediate and the core will always jump to PC + immediate location. E.g. jmp -12 -> BC will jump to PC-12 (3 instruction back) memory location. | ||

| − | * JBA: Jump Base Address can be a conditional or unconditional jump, it takes a register and an immediate as input. In case of conditional jump, the input register holds the jump condition, if the condition is satisfied BC will jump to PC + immediate location. E.g. branch_eqz s4, -12 -> BC will jump if register s4 is equal zero to PC-12 location. | + | * JBA: Jump Base Address can be a conditional or unconditional jump, it takes a register and an immediate as input. In case of a conditional jump, the input register holds the jump condition, if the condition is satisfied BC will jump to PC + immediate location. E.g. branch_eqz s4, -12 -> BC will jump if register s4 is equal zero to PC-12 location. |

| − | In case of unconditional jump, the input register is the effective address where to jump. | + | In case of an unconditional jump, the input register is the effective address where to jump. |

E.g. jmp s4 -> BC will jump to memory location stored in s4. | E.g. jmp s4 -> BC will jump to memory location stored in s4. | ||

| − | + | The following signals notify to the Rollback Handler if a branch is taken: | |

assign bc_rollback_enable = jump & opf_inst_scheduled.is_branch & opf_valid; | assign bc_rollback_enable = jump & opf_inst_scheduled.is_branch & opf_valid; | ||

assign bc_rollback_valid = opf_valid && opf_inst_scheduled.pipe_sel == PIPE_BRANCH && ~bc_rollback_enable; | assign bc_rollback_valid = opf_valid && opf_inst_scheduled.pipe_sel == PIPE_BRANCH && ~bc_rollback_enable; | ||

| − | The | + | The bc_rollback_enable signal is asserted only when a jump is taken. The valid is asserted just if a branch operation is executed, regardless of the result. |

== Writeback stage == | == Writeback stage == | ||

| − | + | '''Writeback''' stage forwards outcoming results from the execution pipes into the register files. Execution pipelines have different lengths and latencies, so instructions issued in different cycles could arrive at the Writeback in the same clock cycle. The Writeback module avoids structural hazards on-the-fly, in fact, a load/store or scratchpad memory operations can have variable latency which is not defined at compile or issue time, this can result in an unpredictable structural hazard at this stage. | |

=== Writeback Request FIFOs === | === Writeback Request FIFOs === | ||

| − | The Writeback module | + | The Writeback module resolves collision on itself on-the-fly leveraging on a set of dedicated queues: in each queue, the incoming result from an execution module is stored, then an arbiter selects one result in a round-robin fashion and forwards it into its destination register. Each queue stores all information needed for a writeback operation, such as destination register and write mask. |

| + | |||

| + | The following code shows how those FIFO are declared: | ||

| + | |||

| + | assign input_wb_request[PIPE_FP_ID].pc = fp_inst_scheduled.pc; | ||

| + | assign input_wb_request[PIPE_FP_ID].writeback_valid = fp_valid; | ||

| + | assign input_wb_request[PIPE_FP_ID].thread_id = fp_inst_scheduled.thread_id; | ||

| + | assign input_wb_request[PIPE_FP_ID].writeback_result = fp_result; | ||

| + | ... | ||

| + | assign input_wb_request[PIPE_INT_ID].pc = int_inst_scheduled.pc; | ||

| + | assign input_wb_request[PIPE_INT_ID].writeback_valid = int_valid; | ||

| + | assign input_wb_request[PIPE_INT_ID].thread_id = int_inst_scheduled.thread_id; | ||

| + | assign input_wb_request[PIPE_INT_ID].writeback_result = int_result; | ||

| + | |||

| + | Each FIFO issues a pending request whenever it is not empty, all pending requests are issued to a RR arbiter which selects a requesto to be forwarded to the Operand Fetch in order to be completed. The following logic shows the RR arbiter: | ||

| − | Note that a writeback_request_fifo has the almost_full_threashold reduced by 4, this is equal to the distance from the first stage of the operand fetch stage in the worst case | + | assign pending_requests = ~writeback_fifo_empty; |

| + | |||

| + | rr_arbiter #( | ||

| + | .NUM_REQUESTERS( `NUM_EX_PIPE ) | ||

| + | ) rr_arbiter ( | ||

| + | .clk ( clk ), | ||

| + | .reset ( reset ), | ||

| + | .request ( pending_requests ), | ||

| + | .update_lru ( 1'b0 ), | ||

| + | .grant_oh ( selected_request_oh ) | ||

| + | ); | ||

| + | |||

| + | oh_to_idx #( | ||

| + | .NUM_SIGNALS( `NUM_EX_PIPE ), | ||

| + | .DIRECTION ( "LSB0" ) | ||

| + | ) oh_to_idx ( | ||

| + | .one_hot( selected_request_oh ), | ||

| + | .index ( selected_pipe ) | ||

| + | ); | ||

| + | |||

| + | Note that a writeback_request_fifo has the almost_full_threashold reduced by 4, this is equal to the distance from the first stage of the operand fetch stage in the worst case, avoiding operation-loss. | ||

=== Result Composer === | === Result Composer === | ||

| − | Depending on the | + | Depending on the incoming result, two tasks have to be done for each execution pipe before reaching the register files: |

| − | * | + | * create a byte-grained register mask, in order to avoid the writing in an undesirable byte (e.g. a load_8 operation writes only in the first byte and not in all the 4-byte register); |

| − | * | + | * compose the final result, moving the bytes in the right positions and handling 8-bit/16-bit and 32-bit load sign extensions. |

| − | + | The following code shows the composition of the result in case of a memory load operation of a byte. The control logic checks the address offset and organizes the data consequently, if required by the operation the sign is also extended as in the case shown below: | |

| − | + | ||

| + | // LDST unit load operation sign extension | ||

| + | assign word_data_mem[j] = output_wb_request[PIPE_MEM_ID].writeback_result[j][31 : 0]; | ||

| + | |||

| + | always_comb | ||

| + | case ( output_wb_request[PIPE_MEM_ID].result_address.offset[1 : 0] ) | ||

| + | 2'b00 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][7 : 0]}; | ||

| + | 2'b01 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][15 : 8]}; | ||

| + | 2'b10 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][23 : 16]}; | ||

| + | 2'b11 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][31 : 24]}; | ||

| + | endcase | ||

| + | |||

| + | ... | ||

| + | |||

| + | always_comb | ||

| + | case ( output_wb_request[PIPE_MEM_ID].result_address.offset[1 : 0] ) | ||

| + | 2'b00 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][7]} }, word_data_mem[j][7 : 0]}; | ||

| + | 2'b01 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][15]} }, word_data_mem[j][15 : 8]}; | ||

| + | 2'b10 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][23]} }, word_data_mem[j][23 : 16]}; | ||

| + | 2'b11 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][31]} }, word_data_mem[j][31 : 24]}; | ||

| + | endcase | ||

== Rollback handler == | == Rollback handler == | ||

| − | Rollback Handler restores PCs and scoreboards of the thread | + | '''Rollback Handler''' restores PCs and scoreboards of the thread which issued a rollback. In case of jump or trap, the Brach module in the Execution pipeline issues a rollback request to this stage and passes to it thread ID, the old scoreboard value and the PC to restore. |

| − | Furthermore, the Rollback Handler flushes all | + | Furthermore, the Rollback Handler flushes all ongoing operations and the queued requests in the Instruction Buffer stage of the corresponding thread. It uses a clear_bitmap for each thread in the following way: |

| − | * each time a rollback is issued, the clear_bitmap | + | * each time a rollback is issued, the clear_bitmap is reset; |

if ( rollback_valid[thread_id] ) | if ( rollback_valid[thread_id] ) | ||

clear_bitmap[thread_id] <= scoreboard_t'( 1'b0 ); | clear_bitmap[thread_id] <= scoreboard_t'( 1'b0 ); | ||

| − | * each time an operation is issued, this | + | * each time an operation is issued, a dedicated structure tracks old values of the scoreboard up to 4 cycles before. In the case of rollback, the current scoreboard system is restored using these records. An issued instruction is considered not flushable after 4 clock cycles. A conditional branch is detected after 3 cycles from its issue, if the jump is taken, all instructions issued during this period have to be flushed and the scoreboard bits cleared. |

| + | Consequently, an instruction after 4 clock cycles ought not to be affected by the flush of the pipeline. This structure tracks issued instruction and after 4 clock cycles clean the clear bitmap accordingly. | ||

| − | + | always_ff @ ( posedge clk, posedge reset ) begin : ROLLBACK_INSTRUCTION_TRACKER | |

| + | if ( reset ) begin | ||

| + | scoreboard_set_issue_t0 <= scoreboard_t'( 1'b0 ); | ||

| + | scoreboard_set_issue_t1 <= scoreboard_t'( 1'b0 ); | ||

| + | scoreboard_set_issue_t2 <= scoreboard_t'( 1'b0 ); | ||

| + | end | ||

| + | else if ( enable ) begin | ||

| + | if ( rollback_valid[thread_id] ) begin | ||

| + | scoreboard_set_issue_t0 <= scoreboard_t'( 1'b0 ); | ||

| + | scoreboard_set_issue_t1 <= scoreboard_t'( 1'b0 ); | ||

| + | scoreboard_set_issue_t2 <= scoreboard_t'( 1'b0 ); | ||

| + | end | ||

| + | else begin | ||

| + | scoreboard_set_issue_t0 <= scoreboard_set_issue; | ||

| + | scoreboard_set_issue_t1 <= scoreboard_set_issue_t0; | ||

| + | scoreboard_set_issue_t2 <= scoreboard_set_issue_t1; | ||

| + | scoreboard_set_issue_t3 <= scoreboard_set_issue_t2; | ||

| + | end | ||

| + | end | ||

| + | end | ||

* when a rollback is issued, the scoreboard_temp mask records all the operations issued before the rollback - but the jump operation - the instruction schedule uses this mask to undo all the instruction scheduled after the rollback | * when a rollback is issued, the scoreboard_temp mask records all the operations issued before the rollback - but the jump operation - the instruction schedule uses this mask to undo all the instruction scheduled after the rollback | ||

| Line 260: | Line 406: | ||

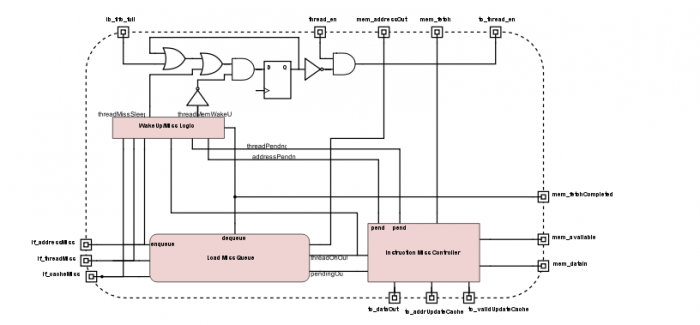

== Thread controller == | == Thread controller == | ||

| − | Thread Controller handles the eligible thread pool. This module blocks threads that cannot proceed due cache misses or | + | |

| + | '''Thread Controller''' handles the eligible thread pool. This module blocks threads that cannot proceed due to cache misses or hazards. Dually, Thread Controller handles threads wake-up when the blocking conditions are no more trues. | ||

assign tc_if_thread_en = ~wait_thread_mask & thread_en & ~ib_fifo_full; | assign tc_if_thread_en = ~wait_thread_mask & thread_en & ~ib_fifo_full; | ||

| Line 269: | Line 416: | ||

Note that a load miss blocks the corresponding thread throughput the ib_fifo_full signal until the data is retrieved from the main memory. | Note that a load miss blocks the corresponding thread throughput the ib_fifo_full signal until the data is retrieved from the main memory. | ||

| − | Furthermore, the Thread Controller interfaces the Instruction Cache with the main memory, the architecture supports only one level of caching for instructions, in other words when an instruction cache miss occurs the data is retrieved directly from the main memory through the network-on-chip. An instruction miss is handled by a simple 3-state FSM: | + | Furthermore, the Thread Controller interfaces the Instruction Cache with the main memory, the architecture supports only one level of caching for instructions, in other words when an instruction cache miss occurs the data is retrieved directly from the main memory through the network-on-chip. An instruction miss is handled by a simple 3-state FSM: first, it waits for a cache miss request, then it saves all the needed information and waits for a memory response. Finally, it sends a "wake-up" signal to the core and waits for the next memory request. |

| − | Note that if during the memory | + | Note that if during the memory wait time (1) another cache miss might raise and/or (2) a cache miss raises for the same address from another thread, a specific queue merges those transactions: it queues the cache misses and also merges requests to the same instruction address from different threads. |

| − | + | Finally, the Thread Controller provides direct access to core performance counters and special registers, such as PC. | |

[[File:tc.png|700px|Thread controller]] | [[File:tc.png|700px|Thread controller]] | ||

Latest revision as of 14:52, 28 June 2019

The core is a RISC in-order pipeline (as depicted in the figure below), with a control unit is intentionally kept lightweight. The architecture masks memory and operation latencies by heavily relying on hardware multithreading and achieve high computational performance through a wide SIMD hardware support. By ensuring a light control logic, the core devotes most of its resources for accelerating computation in highly data-parallel kernels. In the hardware multithreading NaplesPU architecture, each hardware thread has its own PC, register file, and control registers. The number of threads is user configurable, but it has to be a power of two as other configurable parameters. A NPU hardware thread is equivalent to a wavefront in the AMD terminology and a CUDA warp in the NVIDIA terminology. The processor uses a deep pipeline to improve clock speed.

All threads share the same execution units organized in a SIMD fashion. Execution pipelines are organized in hardware vector lanes (like vector processors), each operator is replicated N times. Each thread can perform a SIMD operation on independent data, while data are organized in a vector register file. The core supports a high-throughput non-coherent scratchpad memory, or SPM (corresponding to the shared memory in the NVIDIA terminology). The SPM is divided into a parameterizable number of banks based on a user-configurable mapping function. The memory controller resolves bank collisions at run-time ensuring correct execution of SPM accesses from concurrent threads. Coherence mechanisms incur a high latency and are not strictly necessary for many applications.

Contents

Instruction Fetch Stage

Instruction Fetch stage schedules the next thread PC from the eligible threads pool, handled by the Thread Controller unit. Available threads are scheduled in a Round Robin fashion, implementing a fine-grained thread scheduling model. Furthermore, at the boot phase, the Thread Controller initializes each thread PC through a specific interface.

The instruction cache is N-way set associative (by default it has 4 ways and 128 sets of 512 bits each). In here, an SRAM is allocated for each instanciated way, in order to provide parallelism during the tag evaluation phase.

Once an eligible thread is selected, Instruction Fetch reads its PC and determines whether the next instruction cache line is already in instruction cache or not. Such a module is divided into two stages: in the first stage, each way has a bank of memory containing tag values and validity information for the cache sets. This stage reads requested sets from all the ways in parallel and passes these data to the second stage. The tag memory has one cycle of latency, so the next stage handles the result. This stage compares the way tags previously read with the request tag, if they match, it raises a cache hit. In such a case, the first stage issues the instruction cache data address to instruction cache data memory. If a miss occurs an instruction memory transaction is issued to the Network Interface (or to the Cache Controller in case of Single Core instance) and the thread is blocked until the instruction line is not retrieved from main memory.

Finally, this module handles the PC restoring in case of rollback. When a rollback occurs and the rollback signals are set by Rollback Handler stage, the Instruction Fetch module overwrites the PC of the thread which issued such a rollback.

Thread & PC selection

A thread is selected from the eligible ones using an external signal coming from the Thread Controller unit. In this module, an internal round robin arbiter issues the threads in a fair mode, and a different thread is scheduled every clock cycle, typical of fine-grained multithreaded architectures.

Once the thread is scheduled, its PC is fetched and updated on the base of the following events:

- In case of a cache hit, the instruction is fetched from the Instruction Cache and the PC value is incremented of 4.

- In case of a cache miss, the thread is stalled and a memory transaction for the corresponding memory line is issued, and the value of the PC is restored. When the instruction is fetched from the main memory, the thread is rescheduled and these conditions are checked again.

- In case of rollback of the scheduled thread, the PC is set by the rollback transaction and nothing is propagated to the next stage.

if ( tc_job_valid && thread_id == tc_job_thread_id ) // new job next_pc[thread_id] <= tc_job_pc; else if ( rollback_valid[thread_id] ) // rollback next_pc[thread_id] <= rollback_pc_value[thread_id]; else if ( stage1_miss[thread_id] && stage1_thread_scheduled_id == thread_id ) // Inst miss next_pc[thread_id] <= next_pc[thread_id] - address_t'( 3'd4 ); else if ( thread_scheduled_bitmap[thread_id] ) // Normal execution next_pc[thread_id] <= next_pc[thread_id] + address_t'( 3'd4 );

Cache Pseudo-LRU

The hereafter module described is a hardware implementation of the Pseudo LRU algorithm. For the full algorithm please refer to the following link link, page 13.

The implemented pseudo-LRU has two main interfaces, namely read and update. The read interface updates the occurrence of usage of a given set address, i.e. when a hit is performed the memory line is just being used and its corresponding set address has to be updated as the most recently used line.

always_ff @( posedge clk, posedge reset ) begin

if ( reset ) begin

lru_counter <= '{default : '0};

lru_bits <= '{default : '0};

end

else begin // [1]

if ( en_hit ) begin

lru_bits[set_hit] <= |way_hit[( NUM_WAYS/2 ) - 1 : 0]; // 0: first half, 1: second half

lru_counter <= lru_counter + 1; // update the counter

end else // [2]

if ( en_update ) begin

lru_bits[set_update] <= ~lru_bits[set_update]; // switch to the other half

lru_counter <= lru_counter + 1; // update the counter

end

end

end

The update interface is enabled when a new line is pushed in the cache. This happens when new instruction cache line comes from memory due to a pending transaction. In the case of Instruction Cache +, no replacement is needed since the instruction memory area is non-coherent.

Tag and Data Instruction Cache

The tag and data cache are organized in similar structures. Each way has its own SRAM module, and each read request is forwarded concurrently to all the implemented ways. on the other hand, in case of a new incoming memory line, the way to update is selected by the pseudo-LRU.

A line is set as valid when a memory instruction from the main memory is stored in the given way. Each memory line stored in the Instruction Cache is associated with a validity bit, called line_valid. It's important to notice a cut-through for validity check operation: if the incoming memory line is equal to the current request address, the incoming memory line is also selected bypassing the Instruction Cache.

if ( tc_valid_update_cache && way_lru == way ) line_valid[tc_addr_update.index] <= 1; if ( tc_valid_update_cache && way_lru == way && tc_addr_update.index == icache_address_selected.index) line_valid_selected[way] <= 1; //cut-through else line_valid_selected[way] <= line_valid[icache_address_selected.index] & instruction_valid;

Hit/miss detection

There is a cache hit when the line is valid and the tags match, otherwise, the control unit issues a miss request to the memory through the Thread Controller interface.

The following code refers to the hit/miss detection logic.

for ( way = 0; way < `ICACHE_WAY; way++ )

assign hit_miss[way] = tag_read_data[way] == stage1_icache_address.tag && line_valid_selected[way];

In case of miss, the PC is restored (asserting stage1_miss[thread_id] signal) and a memory request is dispatched using the current PC value as address.

for ( thread_id = 0; thread_id < `THREAD_NUMB; thread_id++ )

assign stage1_miss[thread_id] = ( stage1_thread_scheduled_id == thread_id ) ? ~|hit_miss & stage1_instruction_valid : 1'b0;

Output logic

The memory request due a miss is dispatched only if the following conditions are true:

- the current instruction is valid,

- there is no rollback on the scheduled thread

- and the hit/miss logic detected a miss on the current thread request.

if_cache_miss = stage1_instruction_valid & ~rollback_valid[stage1_thread_scheduled_id] & stage1_miss[stage1_thread_scheduled_id];

In case of a hit, the instruction is propagated to the Decode stage, and the valid is asserted only if the following conditions are true:

- the current instruction is valid,

- there is no rollback on the scheduled thread,

- the hit/miss logic detected a cache hit on the current thread scheduled.

if_valid = stage1_instruction_valid & ~rollback_valid[stage1_thread_scheduled_id] & ~stage1_miss[stage1_thread_scheduled_id];

Decode stage

Decode stage decodes a fetched instruction from Instruction Fetch module and produces the control signals for the datapath. The output signal dec_instr helps execution and control modules to manage incoming instructions and is propagated in each pipeline stage. Instruction types are described in the ISA section.

The goal of this stage is to fill all the field of the dec_instr signal using the fetched instruction if_inst_scheduled. The if_inst_scheduled signal is composed of an opcode and a body. Each instruction could be one of 7 different types, hereafter listed:

typedef union packed {

RR_instruction_body_t RR_body;

RI_instruction_body_t RI_body;

MVI_instruction_body_t MVI_body;

MEM_instruction_body_t MEM_body;

MPOLI_instruction_body_t MPOLI_body;

JBA_instruction_body_t JBA_body;

JRA_instruction_body_t JRA_body;

CTR_instruction_body_t CTR_body;

} instruction_body_t;

A combinatorial switch-case construct (on the type field of the instruction) fills the dec_instr fields reading the opcode and other instruction bits. Instruction types are:

- RR (Register to Register) has a destination register and two source registers. - RI (Register Immediate) has a destination register, one source register and an immediate encoded in the instruction word. - MVI (Move Immediate) has a destination register and a 16-bit immediate. - MEM (Memory Instruction) has a destination/source field, in case of load the first register asses the destination register. Otherwise, in case of a store, the first register contains the store value. In both cases, the second source register contains the base address and the immediate is encoded in the instruction. The sum of the base address and immediate gives the effective memory address. - JBA (Jump Base Address) handles conditional and unconditional jumps, the destination address is calculated on the base of the sum of the source register and the immediate (if present). - CTR (Control instructions).

Instruction scheduler stage

Instruction Scheduler (often referred to as Dynamic Scheduler) schedules in a Round Robin way active threads checking data and structural hazards. A scoreboard for each thread is allocated in this module, whenever an instruction is scheduled, the scoreboard tracks which registers are busy by setting a bit in its structure. In this way, if another instruction requires this very register, it raises a WAR or RAW hazard. Dually, when an instruction reaches the Writeback module and writes the computed outcome on its destination register, the relative bit is freed in the scoreboard, from this moment onward the register results free to use.

Fetched instructions are stored in FIFOs in the Instruction Buffer stage, one per thread. The Dynamic Scheduler checks data hazard and states which thread can be scheduled to the Operand Fetch.

In this component, FPU structural hazards are checked as well. The FP pipe has one output demux to the Writeback unit. The FP pipe is conflict-free, although two different operations might terminate at the same time and collide in the output propagation causing a structural hazard. At this stage only data hazards and this FPU structural hazards are checked, other structural hazards are checked on-the-fly in the Writeback stage.

The Dynamic Scheduler relies on a light scoreboarding system, one per thread. Based on the scoreboard mechanism and on the fetched instruction, the Dynamic Scheduler states which thread is eligible to be scheduled, and a thread is selected through a round-robin arbitrage. A thread is eligible if its can_issue bit is high, organized in a vector (a bit per thread) and assigned as follow:

assign can_issue[thread_id] = ib_instructions_valid[thread_id] && !( hazard_raw || hazard_waw ) && ( ~( |wb_fifo_full ) ) && ~rb_valid[thread_id] && can_issue_fp && can_issue_spm && release_val[thread_id] && sync_detect[thread_id] && ~dsu_stop_issue[thread_id];

A round-robin arbiter evaluates every can_issue bits and, in a round-robin fashion, selects a thread from the eligible pool.

Next, the Dynamic Scheduler forwards the instruction selected and the relative thread info to the Operand Fetch, then updates the scoreboard of the issued thread. When the thread is scheduled, the scoreboard_set_bitmap is updated with the destination register, it sets a bit in order to track the destination register used by the current operation. From this moment onward, this register results busy and an instruction which wants to use it raises a data hazard.

assign hazard_raw = |( ( source_mask_0 | source_mask_1 ) & scoreboard ); assign hazard_waw = |( destination_mask & scoreboard );

Dually, the scoreboard_clear_bitmap tracks all registers released by the Writeback stage. An operation in Writeback releases its destination register.

Finally, when a rollback occurs, the Dynamic Scheduler flushes the FIFO of the corresponding thread, and restores its scoreboard to its previous state.

Operand fetch stage

Operand Fetch builds operands for the Execution Pipeline. As said before, the NPU core supports SIMD operations, for this reason the core has been equipped with two register files: a scalar register file (SRF) and a vector register file (VRF). Each register in the SRF register has a size of `REGISTER_SIZE bits (default 32 bits). On the other hand, a register in the VRF register is wider and allocates a scalar register for each hardware lane (`REGISTER_SIZE x `HW_LANE, default 32-bit x 16 HW lane). Both, SRF and VRF, have same registers number (`REGISTER_NUMBER defined in npu_define.sv, default 64 registers each). Both register files are allocated in SRAM memories with two read and a write ports each.

The register file read ports receive as input the instruction scheduled while data from register files are forwarded to the second stage, which is in charge of building operand 0 and 1. The operand 0, in case of memory access, defines the effective memory address, built by adding the base address and the immediate offset, in each other cases it contains a register value.

if ( next_issue_inst_scheduled.is_memory_access )

opf_fecthed_op0_buff <= {`HW_LANE{rd_out0_scalar + scal_reg_size_t'( next_issue_inst_scheduled.immediate )}};

else

opf_fecthed_op0_buff <= {`HW_LANE{rd_out0_scalar}};

Immediate values are span over the operand 1. When the current instruction has an immediate, it is replicated on each vector element of operand 1. In each other cases, it contains a register value.

if ( next_issue_inst_scheduled.is_source1_immediate )

opf_fecthed_op1_buff <= {`HW_LANE{next_issue_inst_scheduled.immediate}};

else

opf_fecthed_op1_buff <= {`HW_LANE{rd_out1_scalar}};

The PC is stored in a special register in the Instruction Fetch module, hence it is not handled by the scalar register file. Such a register is forwarded in this stage along with the decoded instruction and used in the operand composition when it is needed.

The register file write port is disposed in order to store output data from the Writeback stage. In case of vectorial operations, the wb_result_hw_lane_mask handles which lane is affected by the current operation.

for ( lane_id = 0; lane_id < `HW_LANE; lane_id ++ ) begin : LANE_WRITE_EN

assign write_en_byte[lane_id] = wb_result.wb_result_write_byte_enable & {( `BYTE_PER_REGISTER ){wb_result.wb_result_hw_lane_mask[lane_id] & wr_en_vector}};

Each thread has its own register file, this is done by allocating a bigger SRAM (REGISTER_NUMBER x `THREAD_NUMB).

When a masked instruction is issued, the special register `MASK_REG (default scalar register s60) is stored in opf_fecthed_mask. When source 1 is immediate, its value is replied on each vector element. Memory access and branch operation require a base address. In both cases Decode module maps base address in source0.

Integer Arithmetic & Logic unit

Integer Arithmetic & Logic unit is the main execution stage, it handles jumps, arithmetic and logic operations, it also manages control register accesses and moves. For further details about the operation admitted please refer to the ISA page.

In order to dispatch the current instruction to the right operator, such a module leverages on the selection fields of the instruction decoded. For example:

assign is_jmpsr = opf_inst_scheduled.pipe_sel == PIPE_BRANCH & opf_inst_scheduled.is_int & opf_inst_scheduled.is_branch;

Vectorial operations are executed in parallel spanning data over all the HW lanes: all lanes perform the same operations sharing the same control logic, and the final vectorial result signal (called vec_result) is composed by merging all the scalar results from the different lanes.

On the other hand, when an instruction performs a scalar operation, only the first lane of vec_result contains a useful result. An output logic fetches the result from the right operator based on the issued instruction, as shown in the following code:

if( is_compare ) int_result[0] <= cmp_result; else if (is_shuffle) int_result <= shuffle_result; else int_result <= vec_result;

Control registers

The Control Register unit holds status and performance information. Status information are shared among all threads (such as Core ID or the global performance counter), others are thread-specific (such as Thread ID and Thread PC):

- TILE_ID: shared information, returns the current tile identifier. - CORE_ID: shared information, returns the current core identifier. - THREAD_ID: private information, returns thread identifier. - GLOBAL_ID: private information, returns Tile ID, Core ID and Thread ID merged together. - GCOUNTER_LOW: shared information, returns the low part of the global performance counter. - GCOUNTER_HIGH: shared information, returns the high part of the global performance counter. - THREAD_EN: shared information, returns the thread-active bitmap mask, one bit per thread. - MISS_DATA: shared information, returns the count of data miss occurred so far. - MISS_INSTR: shared information, returns the count of instruction miss occurred so far. - PC: private information, returns the current value of the PC. - TRAP_REASON: private information, returns the trap reason. - THREAD_STATUS: private information, returns the current thread status. - ARGC: shared information, number of arguments of the main function. - ARGV_ID: shared information, the address of ARGV. - THREAD_NUMB_ID: number of hardware thread currently implemented. - THREAD_MISS_CC_ID: private information, clock cycles while the thread is idle due memory operations. - KERNEL_WORK: private information, kernel cycles. - CPU_CTRL_REG_ID: CPU mode register. - PWR_MDL_REG_ID: power model performance counter. - UNCOHERENCE_MAP_ID: stores information about the non-coherent memory regions. - DEBUG_BASE_ADDR: debug registers base address, fetches the value of the first debug register

The Control Register unit has a direct interface with the Host Interface, in order to make these information accessible on the host-side.

Control registers are handled and accessed (on the core side) by the Integer Arithmetic & Logic unit.

User can specifies non-coherent memory regions writing on UNCOHERENCE_MAP_ID control register. This maps a table which tracks non-coherent regions, declared as follow:

typedef struct packed {

logic [`UNCOHERENCE_MAP_BITS-1:0] start_addr;

logic [`UNCOHERENCE_MAP_BITS-1:0] end_addr;

logic valid;

} uncoherent_mmap;

The user specifies the starting and ending address of the non-coherent region, defined as 10-bit each in the npu_coherence_defines.sv file. While the least significant bit states the validity of the entry. The following control logic updates the non-coherent memory table whenever a write_cr occurs:

genvar region_id;

generate

for (region_id = 0; region_id < `UNCOHERENCE_MAP_SIZE; region_id++) begin : UNCOHERENT_AREA_LOOKUP

always_ff @ ( posedge clk, posedge reset )

if ( reset ) begin

uncoherent_memory_areas[region_id].valid <= 1'b0;

end else begin

if ( is_cr_write & write_mmap & opf_fetched_op0[0][$bits(uncoherent_mmap) +: $clog2(`UNCOHERENCE_MAP_SIZE)] == region_id ) begin

uncoherent_memory_areas[region_id] <= opf_fetched_op0[0][$bits(uncoherent_mmap)-1 : 0];

end

end

assign uncoherent_area_hits[region_id] = address_lookup >= uncoherent_memory_areas[region_id].start_addr && address_lookup <= uncoherent_memory_areas[region_id].end_addr && uncoherent_memory_areas[region_id].valid;

end

endgenerate

The above logic also checks if an issued memory transaction hits in the non-coherent regions, whenever this occurs the module propagates to the Operand Fetch this information through the uncoherent_area_hit signal.

Scratchpad unit

This unit is described in the dedicated scratchpad page.

Load/Store unit

LSU unit is described in the following section load/store subsection.

Floating point unit

Multistage floating point instructions, The FPU supports single-precision FP operations according to the IEEE-754-2008 standard, auto-generated using FloPoCo open-source library. The FP hardware operator instantiated in the FPU are:

- FP Add/Sub

- FP Mult

- FP Div

- Converters: Int to FP, FP to Int

Each operator is fully-pipelined and has its own latency. The FPU is provided with a light control logic which does no structural hazard checking on the Writeback (since it is demanded to the Instruction Scheduler), although it delays the instruction information according to with the operation latency, in order to propagate the operation result along with its related information to the Writeback module.

Barrier unit

This unit is described in the dedicated synchronization section.

Branch unit

Branch Control unit handles conditional and unconditional jumps and restores scoreboards when a jump is taken. It signals to the Rollback Handler whether a jump has to be taken or not. Base address or condition are stored in opf_fecthed_op0[0] while the immediate is stored in opf_fecthed_op1[0].

NPU core supports two jump instruction formats:

- JRA: Jump Relative Address is an unconditional jump instruction, it takes an immediate and the core will always jump to PC + immediate location. E.g. jmp -12 -> BC will jump to PC-12 (3 instruction back) memory location.

- JBA: Jump Base Address can be a conditional or unconditional jump, it takes a register and an immediate as input. In case of a conditional jump, the input register holds the jump condition, if the condition is satisfied BC will jump to PC + immediate location. E.g. branch_eqz s4, -12 -> BC will jump if register s4 is equal zero to PC-12 location.

In case of an unconditional jump, the input register is the effective address where to jump. E.g. jmp s4 -> BC will jump to memory location stored in s4.

The following signals notify to the Rollback Handler if a branch is taken:

assign bc_rollback_enable = jump & opf_inst_scheduled.is_branch & opf_valid; assign bc_rollback_valid = opf_valid && opf_inst_scheduled.pipe_sel == PIPE_BRANCH && ~bc_rollback_enable;

The bc_rollback_enable signal is asserted only when a jump is taken. The valid is asserted just if a branch operation is executed, regardless of the result.

Writeback stage

Writeback stage forwards outcoming results from the execution pipes into the register files. Execution pipelines have different lengths and latencies, so instructions issued in different cycles could arrive at the Writeback in the same clock cycle. The Writeback module avoids structural hazards on-the-fly, in fact, a load/store or scratchpad memory operations can have variable latency which is not defined at compile or issue time, this can result in an unpredictable structural hazard at this stage.

Writeback Request FIFOs

The Writeback module resolves collision on itself on-the-fly leveraging on a set of dedicated queues: in each queue, the incoming result from an execution module is stored, then an arbiter selects one result in a round-robin fashion and forwards it into its destination register. Each queue stores all information needed for a writeback operation, such as destination register and write mask.

The following code shows how those FIFO are declared:

assign input_wb_request[PIPE_FP_ID].pc = fp_inst_scheduled.pc; assign input_wb_request[PIPE_FP_ID].writeback_valid = fp_valid; assign input_wb_request[PIPE_FP_ID].thread_id = fp_inst_scheduled.thread_id; assign input_wb_request[PIPE_FP_ID].writeback_result = fp_result; ... assign input_wb_request[PIPE_INT_ID].pc = int_inst_scheduled.pc; assign input_wb_request[PIPE_INT_ID].writeback_valid = int_valid; assign input_wb_request[PIPE_INT_ID].thread_id = int_inst_scheduled.thread_id; assign input_wb_request[PIPE_INT_ID].writeback_result = int_result;

Each FIFO issues a pending request whenever it is not empty, all pending requests are issued to a RR arbiter which selects a requesto to be forwarded to the Operand Fetch in order to be completed. The following logic shows the RR arbiter:

assign pending_requests = ~writeback_fifo_empty; rr_arbiter #( .NUM_REQUESTERS( `NUM_EX_PIPE ) ) rr_arbiter ( .clk ( clk ), .reset ( reset ), .request ( pending_requests ), .update_lru ( 1'b0 ), .grant_oh ( selected_request_oh ) ); oh_to_idx #( .NUM_SIGNALS( `NUM_EX_PIPE ), .DIRECTION ( "LSB0" ) ) oh_to_idx ( .one_hot( selected_request_oh ), .index ( selected_pipe ) );

Note that a writeback_request_fifo has the almost_full_threashold reduced by 4, this is equal to the distance from the first stage of the operand fetch stage in the worst case, avoiding operation-loss.

Result Composer

Depending on the incoming result, two tasks have to be done for each execution pipe before reaching the register files:

- create a byte-grained register mask, in order to avoid the writing in an undesirable byte (e.g. a load_8 operation writes only in the first byte and not in all the 4-byte register);

- compose the final result, moving the bytes in the right positions and handling 8-bit/16-bit and 32-bit load sign extensions.

The following code shows the composition of the result in case of a memory load operation of a byte. The control logic checks the address offset and organizes the data consequently, if required by the operation the sign is also extended as in the case shown below:

// LDST unit load operation sign extension

assign word_data_mem[j] = output_wb_request[PIPE_MEM_ID].writeback_result[j][31 : 0];

always_comb

case ( output_wb_request[PIPE_MEM_ID].result_address.offset[1 : 0] )

2'b00 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][7 : 0]};

2'b01 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][15 : 8]};

2'b10 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][23 : 16]};

2'b11 : byte_data_mem[j] = {{( `REGISTER_SIZE - 8 ){1'b0}}, word_data_mem[j][31 : 24]};

endcase

...

always_comb

case ( output_wb_request[PIPE_MEM_ID].result_address.offset[1 : 0] )

2'b00 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][7]} }, word_data_mem[j][7 : 0]};

2'b01 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][15]} }, word_data_mem[j][15 : 8]};

2'b10 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][23]} }, word_data_mem[j][23 : 16]};

2'b11 : byte_data_mem_s[j] = {{( `REGISTER_SIZE - 8 ){word_data_mem[j][31]} }, word_data_mem[j][31 : 24]};

endcase

Rollback handler

Rollback Handler restores PCs and scoreboards of the thread which issued a rollback. In case of jump or trap, the Brach module in the Execution pipeline issues a rollback request to this stage and passes to it thread ID, the old scoreboard value and the PC to restore.

Furthermore, the Rollback Handler flushes all ongoing operations and the queued requests in the Instruction Buffer stage of the corresponding thread. It uses a clear_bitmap for each thread in the following way:

- each time a rollback is issued, the clear_bitmap is reset;

if ( rollback_valid[thread_id] ) clear_bitmap[thread_id] <= scoreboard_t'( 1'b0 );

- each time an operation is issued, a dedicated structure tracks old values of the scoreboard up to 4 cycles before. In the case of rollback, the current scoreboard system is restored using these records. An issued instruction is considered not flushable after 4 clock cycles. A conditional branch is detected after 3 cycles from its issue, if the jump is taken, all instructions issued during this period have to be flushed and the scoreboard bits cleared.

Consequently, an instruction after 4 clock cycles ought not to be affected by the flush of the pipeline. This structure tracks issued instruction and after 4 clock cycles clean the clear bitmap accordingly.

always_ff @ ( posedge clk, posedge reset ) begin : ROLLBACK_INSTRUCTION_TRACKER

if ( reset ) begin

scoreboard_set_issue_t0 <= scoreboard_t'( 1'b0 );

scoreboard_set_issue_t1 <= scoreboard_t'( 1'b0 );

scoreboard_set_issue_t2 <= scoreboard_t'( 1'b0 );

end

else if ( enable ) begin

if ( rollback_valid[thread_id] ) begin

scoreboard_set_issue_t0 <= scoreboard_t'( 1'b0 );

scoreboard_set_issue_t1 <= scoreboard_t'( 1'b0 );

scoreboard_set_issue_t2 <= scoreboard_t'( 1'b0 );

end

else begin

scoreboard_set_issue_t0 <= scoreboard_set_issue;

scoreboard_set_issue_t1 <= scoreboard_set_issue_t0;

scoreboard_set_issue_t2 <= scoreboard_set_issue_t1;

scoreboard_set_issue_t3 <= scoreboard_set_issue_t2;

end

end

end

- when a rollback is issued, the scoreboard_temp mask records all the operations issued before the rollback - but the jump operation - the instruction schedule uses this mask to undo all the instruction scheduled after the rollback

Note that all threads are completely independent.

Thread controller

Thread Controller handles the eligible thread pool. This module blocks threads that cannot proceed due to cache misses or hazards. Dually, Thread Controller handles threads wake-up when the blocking conditions are no more trues.

assign tc_if_thread_en = ~wait_thread_mask & thread_en & ~ib_fifo_full; assign wait_thread_mask_next = ( wait_thread_mask | thread_miss_sleep ) & ~thread_mem_wakeup; assign thread_mem_wakeup = // 1: thread[i] activated, 0: thread[i] state unmodified assign thread_miss_sleep = // 1: thread[i] asleep, 0: thread[i] state unmodified

Note that a load miss blocks the corresponding thread throughput the ib_fifo_full signal until the data is retrieved from the main memory.

Furthermore, the Thread Controller interfaces the Instruction Cache with the main memory, the architecture supports only one level of caching for instructions, in other words when an instruction cache miss occurs the data is retrieved directly from the main memory through the network-on-chip. An instruction miss is handled by a simple 3-state FSM: first, it waits for a cache miss request, then it saves all the needed information and waits for a memory response. Finally, it sends a "wake-up" signal to the core and waits for the next memory request.

Note that if during the memory wait time (1) another cache miss might raise and/or (2) a cache miss raises for the same address from another thread, a specific queue merges those transactions: it queues the cache misses and also merges requests to the same instruction address from different threads.

Finally, the Thread Controller provides direct access to core performance counters and special registers, such as PC.