Difference between revisions of "Single Core Cache Controller"

(→FSM) |

|||

| (94 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | This page describes the L1 cache controller (CC) allocated in the | + | This page describes the L1 cache controller (CC) allocated in the NPU core and directly connected to the LDST unit, Core Interface (CI), Thread Controller (TC), Memory Controller (MeC) and Instruction Cache (IC). The Single Core CC handles requests from the core (load/store miss, instruction miss, flush, evict, data invalidate) and serializes them. Pending requests are scheduled with a fixed priority. |

| − | + | [[File:sc cc.png]] | |

| − | + | This lightweight CC handles a transaction at a time, hence there is no need for a Miss Status Holding Register (MSHR). | |

| − | |||

| − | |||

= Interface = | = Interface = | ||

| − | This section shows the interface of the CC to/from all other | + | This section shows the interface of the CC to/from all other units. |

== To/from Core interface == | == To/from Core interface == | ||

| − | CI | + | CI buffers memory requests from the LDST unit, providing a separate queue for each type of request. This interface is directly forwarded to the CC, which schedules a request at the time. Furthermore, CI decouples the LDST, which is highly parallel, and this CC which is much slower. In fact, the cache controller executes one request at a time, although the LDST unit might issue multiple requests concurrently, up to the current number of threads allocated into the core. Those requests are scheduled by a fixed priority arbiter, which selects a pending request and dequeues it once the CC forwards a memory transaction to the memory. |

Following lines of code define interface to/from core interface: | Following lines of code define interface to/from core interface: | ||

| Line 25: | Line 23: | ||

== To/from LDST == | == To/from LDST == | ||

| − | + | As described in ''[[Load/Store unit|load/store unit]]'', Load/Store unit has no information about the coherency protocol used, although it keeps track of information regarding privileges on all cache addresses. Each cache line stored, in fact, has two privileges: ''can read'' and ''can write'' that are used to determine cache miss/hit and are updated by the Cache Controller. | |

| + | |||

| + | Memory requests from the LDST unit: | ||

| + | LOAD | ||

| + | STORE | ||

| + | REPLACEMENT | ||

| + | FLUSH | ||

| + | DINV | ||

| + | INSTR | ||

| + | IOM | ||

| + | |||

| + | All the listed operation above are handled by the control FSM in the CC. On the coherence protocol side, as said before, the LDST has an abstract view of the implemented protocol, and the CC grants it READ or WRITE privileges, while the current coherence protocol is transparent to the LDST unit. | ||

| + | |||

| + | Following lines of code define interface to/from load/store unit: | ||

| + | |||

| + | ~ | ||

| + | output logic cc_update_ldst_valid, | ||

| + | output dcache_way_idx_t cc_update_ldst_way, | ||

| + | output dcache_address_t cc_update_ldst_address, | ||

| + | output dcache_privileges_t cc_update_ldst_privileges, | ||

| + | output dcache_line_t cc_update_ldst_store_value, | ||

| + | output cc_command_t cc_update_ldst_command, | ||

| + | output logic cc_wakeup, | ||

| + | output thread_id_t cc_wakeup_thread_id, | ||

| + | input dcache_line_t ldst_snoop_data, | ||

| + | input dcache_privileges_t [`DCACHE_WAY - 1 : 0] ldst_snoop_privileges, | ||

| + | input dcache_tag_t [`DCACHE_WAY - 1 : 0] ldst_snoop_tag, | ||

| + | input logic ldst_io_valid, | ||

| + | input thread_id_t ldst_io_thread, | ||

| + | input logic [$bits(io_operation_t)-1 : 0] ldst_io_operation, | ||

| + | input address_t ldst_io_address, | ||

| + | input register_t ldst_io_data, | ||

| + | output logic io_intf_resp_valid, | ||

| + | output thread_id_t io_intf_wakeup_thread, | ||

| + | output register_t io_intf_resp_data, | ||

| + | input logic ldst_io_resp_consumed, | ||

| + | output logic io_intf_available, | ||

| + | ~ | ||

| + | |||

== To/from Memory controller == | == To/from Memory controller == | ||

| − | + | The CC has a valid/available handshake interface with the main memory, it can issue a request when the memory availability bit is asserted: | |

| − | + | ||

| − | + | // Memory availability bit | |

| + | input logic m2n_request_available, | ||

| + | |||

| + | Dually the memory can forward a response to CC when its availability bit is asserted: | ||

| + | // CC availability bit | ||

| + | output logic mc_avail_o, | ||

| + | |||

| + | When the memory is available, an issued request is loaded on the following signals: | ||

| + | // To Memory controller | ||

| + | output address_t n2m_request_address, | ||

| + | output dcache_line_t n2m_request_data, | ||

| + | output dcache_store_mask_t n2m_request_dirty_mask, | ||

| + | output logic n2m_request_read, | ||

| + | output logic n2m_request_write, | ||

| + | |||

| + | While ''n2m_request_address'' and ''n2m_request_data'' signals cointain respectively the requesting address and the output data (used in case of writes), ''n2m_request_read'' and ''n2m_request_write'' control signals state the operation to perform on main memory. Finally, ''n2m_request_dirty_mask'' stores a bitmask of dirty words into the data signals. | ||

| + | |||

| + | When CC is available, a response from the main memory is forwarded on the following signals: | ||

| + | input logic m2n_response_valid, | ||

| + | input address_t m2n_response_address, | ||

| + | input dcache_line_t m2n_response_data, | ||

| + | |||

== To/from Thread controller == | == To/from Thread controller == | ||

| − | + | TC on core side handles instruction cache requests and independently manages threads and cache misses merging. Interface with CC has, as usual, a valid/availability based handshake when CC is ready to accept an instruction cache miss the corresponding availability bit is asserted: | |

| + | output logic mem_instr_request_available, | ||

| + | |||

| + | and incoming instruction requests are pending on the following interface signals: | ||

| + | input logic tc_instr_request_valid, | ||

| + | input address_t tc_instr_request_address | ||

| + | |||

| + | TC is always ready, there is no need for an availability signal in its interface. When a memory response has arrived for a pending instruction cache miss, this is directly forwarded on the interface signals: | ||

| + | output icache_lane_t mem_instr_request_data_in, | ||

| + | output logic mem_instr_request_valid, | ||

= Implementation = | = Implementation = | ||

| Line 37: | Line 103: | ||

== FSM == | == FSM == | ||

| − | The | + | The control unit is implemented with a finite state machine (FSM) with three states: |

* idle | * idle | ||

* send request | * send request | ||

* wait response | * wait response | ||

| − | + | The following figure depicts the graph flow of the control FSM. | |

[[File:fsm cc.png]] | [[File:fsm cc.png]] | ||

| − | + | At HDL level, the FSM is implemented through a sequential process, which handles the state evolution, and a combinatorial process, shaped to implement the valid/available interface to the main memory: | |

| + | |||

| + | ~ | ||

| + | output address_t n2m_request_address, | ||

| + | output dcache_line_t n2m_request_data, | ||

| + | output dcache_store_mask_t n2m_request_dirty_mask, | ||

| + | output logic n2m_request_read, | ||

| + | output logic n2m_request_write, | ||

| + | output logic mc_avail_o, | ||

| + | input logic m2n_request_available, | ||

| + | input logic m2n_response_valid, | ||

| + | input address_t m2n_response_address, | ||

| + | input dcache_line_t m2n_response_data, | ||

| + | ~ | ||

=== Sequential section === | === Sequential section === | ||

| − | + | In case of reset or preliminary phase of FSM, control signals used to updating the LDST and the signals used to control a memory transaction are set to zero, as shown in the following code: | |

| + | |||

| + | ~ | ||

| + | always_ff @( posedge clk, posedge reset ) begin | ||

| + | if ( reset ) begin | ||

| + | state <= IDLE; | ||

| + | cc_update_ldst_valid <= 1'b0; | ||

| + | cc_wakeup <= 1'b0; | ||

| + | n2m_request_read <= 1'b0; | ||

| + | n2m_request_write <= 1'b0; | ||

| + | granted_read <= 1'b0; | ||

| + | granted_write <= 1'b0; | ||

| + | mem_instr_request_valid <= 1'b0; | ||

| + | end else begin | ||

| + | cc_update_ldst_valid <= 1'b0; | ||

| + | cc_wakeup <= 1'b0; | ||

| + | n2m_request_read <= 1'b0; | ||

| + | n2m_request_write <= 1'b0; | ||

| + | mem_instr_request_valid <= 1'b0; | ||

| + | ~ | ||

| + | |||

| + | In the '''IDLE''' state, a pending request is selected by a nested if-then-else structure that checks which bit of ''grant'' mask is asserted. Once a request is selected, thread ID, address of line of cache and control signals, that are used by LDST to execute an atomic operation, are configurated. Examples of signals are: | ||

| + | *''granted_read''/''granted_write'', used to track type of request; | ||

| + | *''granted_need_snoop'', used to track if the request needs for snoop operation; | ||

| + | *''granted_wakeup'', used to track if the request has to wake up the thread; | ||

| + | *''granted_need_hit_miss'' used to track if FSM needs for a hit or miss. | ||

| − | + | The code snippet reported below reports all actions taken by the control logic in case of a LOAD instruction scheduled. All other cases share the same structure. | |

~ | ~ | ||

| Line 64: | Line 168: | ||

~ | ~ | ||

| − | + | The '''SEND REQ''' state implements a logic which allows CC to send a request to the memory. If the request is executable and the memory is available, then CC sends the request by loading the address and kind (READ or WRITE) of request to the memory interface. If the type of request is READ (such as LOAD, STORE, INSTR requests), then CC needs to wait for a response from the memory. Dually, in case of WRITE (such as FLUSH, DINV, REPLACEMENT requests), the CC comes back in the IDLE state and, if DINV request is pending, it computes a DINV request by executing following lines of code. When the memory is not available, then CC waits until the availability bit of the memory is asserted. When the request is not executable, then CC jumps back into the IDLE state. | |

| − | |||

| − | |||

~ | ~ | ||

| Line 78: | Line 180: | ||

~ | ~ | ||

| − | In these lines of code, CC | + | In these lines of code, CC sends to LDST a cache line invalidation request lowering the respective privileges. From this moment onward, no thread can read or write that cache line until next LOAD/STORE request. |

| − | In the '''WAIT RESP''' state, CC waits for a response from the memory. If memory response is available, then CC comes back in the IDLE state. If | + | In the '''WAIT RESP''' state, CC waits for a response from the memory. If memory response is available, then CC comes back in the IDLE state. If the issued request is for Instruction cache, then CC returns to TC the contents of memory. On the other hand, if the issued request is for Data cache, then CC forwards memory response to LDST unit and sets privileges accordingly, as shown below: |

~ | ~ | ||

| Line 92: | Line 194: | ||

~ | ~ | ||

| − | CC returns to LDST the way that a request has to execute, READ and WRITE privileges, data from memory that has to write if the type of request is STORE. | + | The ''cc_update_ldst_command'' signal states how the LDST should handle the response. In the case of L1 cache ways full for the given set (''ways_full'' signal asserted), the CC sends a '''CC_REPLACEMENT''' request on the given set and ''cc_update_ldst_way'' signal indicates the way to replace. In such a case the LDST starts a replacement operation, storing the response in the given location and evicting the old data. This is finally forwarded to CI on the eviction FIFO. |

| + | On the other hand, if the L1 cache has a free way on the given set (''ways_full'' signal asserted), the CC sends '''CC_UPDATE_INFO_DATA''' request. In this case LDST stores in the given location the memory response and updates its privileges, incurring in no replacement. | ||

| + | |||

| + | CC returns to LDST the way that a request has to execute, READ and WRITE privileges, data from memory that has to write if the type of request is STORE. So, all threads can read or can write a line of a cache of request. | ||

=== Combinatorial section === | === Combinatorial section === | ||

| − | + | In the FSM, dequeue signals used for dequeuing requests from CI queues and information signals are set to zero by default, as shown in the following code: | |

| + | |||

| + | ~ | ||

| + | always_comb begin | ||

| + | cc_snoop_tag_valid <= 1'b0; | ||

| + | cc_snoop_tag_set <= dcache_set_t'( 1'bX ); | ||

| + | dequeue_instr <= 1'b0; | ||

| + | dequeue_iom <= 1'b0; | ||

| + | iom_resp_enqueue <= 1'b0; | ||

| + | cc_dequeue_store_request <= 1'b0; | ||

| + | cc_dequeue_load_request <= 1'b0; | ||

| + | cc_dequeue_replacement_request <= 1'b0; | ||

| + | cc_dequeue_flush_request <= 1'b0; | ||

| + | cc_dequeue_dinv_request <= 1'b0; | ||

| + | update_counter_way <= 1'b0; | ||

| + | ~ | ||

| + | |||

| + | ''update_counter_way'' signal updates the selection of a next way of the cache for a given set, as shown below: | ||

| + | |||

| + | ~ | ||

| + | always_ff @ ( posedge clk, posedge reset ) | ||

| + | if ( reset ) | ||

| + | counter_way <= '{default : '0}; | ||

| + | else | ||

| + | if ( update_counter_way ) | ||

| + | counter_way[granted_address.index] <= counter_way[granted_address.index] + 1; | ||

| + | ~ | ||

| + | |||

| + | In the '''IDLE''' state, by checking which bit is asserted in the ''grants'' signal, a request is selected, a snoop request is issues to LDST asserting ''cc_snoop_tag_valid'' signal, and ''cc_snoop_tag_set'' is loaded with the set to snoop on the L1. | ||

| − | + | IDLE: begin | |

| + | if (grants[LOAD]) begin | ||

| + | cc_snoop_tag_valid <= 1'b1; | ||

| + | cc_snoop_tag_set <= ci_load_request_address.index; | ||

| + | ... | ||

| − | + | The '''SEND REQ''' state, if memory is available, dequeues the scheduled request asserting the corresponding dequeuing bit in the interface with CI. The following code shows the dequeuing logic in case of replacement scheduled: | |

| − | In the '''WAIT RESP''', once a response from memory is ready, dequeue signal | + | SEND_REQ: begin |

| + | if (execute_req) begin | ||

| + | if (m2n_request_available) begin | ||

| + | if (grants_reg[REPLACEMENT]) begin | ||

| + | cc_dequeue_replacement_request <= 1'b1; | ||

| + | |||

| + | Signal ''execute_request'' checks wheater a pending request in CI still need a memory transaction or not. In some cases, due to the nature of the LDST and its multithreaded organization, a request might be already satisfied by previous transactions, hence ''execute_request'' double-checks the condition of miss again, if there is no need for a memory transaction the control logic dequeues the pending request issuing no memory transaction and wakes up stalling threads if needed (''granted_wakeup'' signal high). | ||

| + | |||

| + | In the '''WAIT RESP''', once a response from memory is ready, FSM the corresponding dequeue signal for LOAD, STORE, I/O read or INSTR request is asserted: | ||

| + | WAIT_RESP: begin | ||

| + | if (m2n_response_valid) begin | ||

| + | if (grants_reg[INSTR]) begin | ||

| + | dequeue_instr <= 1'b1; | ||

== IO, Instruction and Core Interface requests buffering == | == IO, Instruction and Core Interface requests buffering == | ||

| − | + | CI and CC, as said above, share a valid/availability handshake interface for each request type. All these validity bits are buffered into a vector, namely ''grants''. An arbiter (described [[http://www.naplespu.com/doc/index.php?title=Basic_comps#Control%20Logic%20Support here]] and set in fixed priority mode) allows requests to be scheduled a fixed-priority policy. The output of the arbiter is a one-hot mask, namely ''grants''. | |

| − | IO and INSTR requests are managed in | + | IO and INSTR requests are managed in different ways by the control logic. |

=== IO Map request === | === IO Map request === | ||

| − | + | Two FIFOs are instantiated for IO requests, one for incoming responses and the other for issued requests, each of default size 8: | |

* IO FIFO REQUEST | * IO FIFO REQUEST | ||

* IO FIFO RESPONSE | * IO FIFO RESPONSE | ||

| − | The format of the queues is defined by the following lines of code: | + | The format of the queues is defined by the typedef in the following lines of code: |

~ | ~ | ||

| Line 124: | Line 273: | ||

~ | ~ | ||

| − | Requests are buffered into IO FIFO REQUEST queue. When the IO FIFO REQUEST is full, the CC refuses further IO Map requests. | + | Requests are buffered into IO FIFO REQUEST queue. When the IO FIFO REQUEST is full, the CC refuses further IO Map requests lowering ''io_intf_available'' signal on the interface. The request is dequeue from IO FIFO REQUEST once the corresponding IO has arrived and this is queued into IO FIFO RESPONSE queue. When ready, the LDST unit consumes the IO response, asserting ''ldst_io_resp_consumed'' signal for a clock cycle, then the response is dequeued from |

| + | IO FIFO RESPONSE queue and forwarded to LDST. | ||

=== Instruction miss request === | === Instruction miss request === | ||

| − | INSTR requests are buffered | + | INSTR requests are buffered into INSTR FIFO queue of size 2, which tracks address of the pending request for the instruction cache. When an instruction request is satified, the relative memory response is forwarded back to the TC which will handle threads waking up internally: |

~ | ~ | ||

| Line 139: | Line 289: | ||

~ | ~ | ||

| − | m2n_response_data_swap register contains data from memory that | + | ''m2n_response_data_swap'' register contains data from memory that are transformed into big-endian if needed. |

== Snoop managing == | == Snoop managing == | ||

| − | snoop + way | + | Snoop managing checks whether a cache hit occurs or not after a snoop request, through following lines of code: |

| − | + | ||

| + | ~ | ||

| + | generate | ||

| + | for ( dcache_way = 0; dcache_way < `DCACHE_WAY; dcache_way++ ) begin | ||

| + | assign way_busy_oh[dcache_way] = ( ldst_snoop_privileges[dcache_way].can_read | ldst_snoop_privileges[dcache_way].can_write ); | ||

| + | assign way_matched_oh[dcache_way] = ( ldst_snoop_tag[dcache_way] == granted_address.tag ) & way_busy_oh[dcache_way]; | ||

| + | end | ||

| + | endgenerate | ||

| + | assign snoop_hit = |way_matched_oh; | ||

| + | assign ways_full = &way_busy_oh; | ||

| + | ~ | ||

| + | |||

| + | A cache hit is asserted if a thread has read or write privileges on such address and if the tag of the requesting address is equal to an element present in the tag array ''ldst_snoop_tag''. | ||

| + | |||

| + | ''snoop_hit'' is asserted if there is a cache hit, while ''ways_full'' is asserted if all ways are busy. | ||

| + | |||

| + | ''way_matched_oh'' array tracks the index of the matched way in a one-hot encoding. A component converts one-hot string to a natural number, and it is described [[http://www.naplespu.com/doc/index.php?title=Basic_comps#Control%20Logic%20Support here]]. The output is loaded into ''way_matched_idx'' register. | ||

| + | |||

| + | Some requests need to snoop L1 in order to be scheduled, such as LOAD/STORE, FLUSH and DINV, hence CC has to evaluate if the line produces a cache hit before issuing a memory transaction, as explained above. | ||

== Memory swap == | == Memory swap == | ||

| − | + | '''NaplesPU''' is a parametrizable little-endian manycore, it might be embedded into architectures with different memory endianness. The CC provides an optional endianness swapper logic used to convert data from little-endian to big-endian and vice versa. | |

| + | CC interface provides a parameter, called ENDSWAP, which it is in the instantiation of the CC. | ||

| + | |||

| + | module sc_cache_controller #( | ||

| + | parameter ENDSWAP = 0 | ||

| + | )( | ||

| + | input clk, | ||

| + | input reset, | ||

| + | ~ | ||

| + | |||

| + | By default, ENDSWAP is zero so it does not perform any memory swap. | ||

Latest revision as of 13:54, 19 June 2019

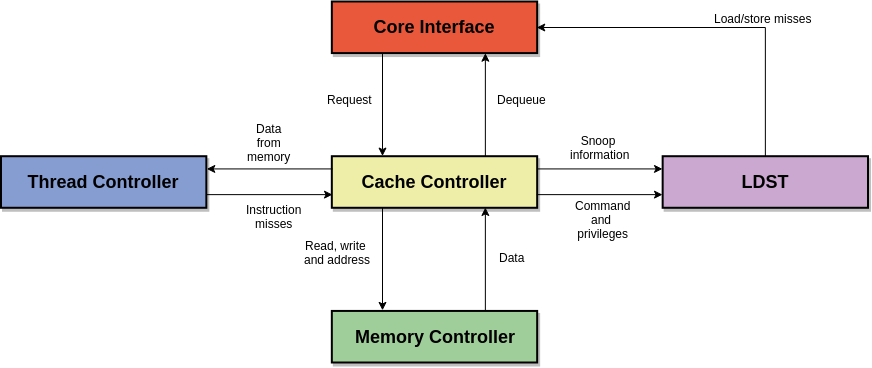

This page describes the L1 cache controller (CC) allocated in the NPU core and directly connected to the LDST unit, Core Interface (CI), Thread Controller (TC), Memory Controller (MeC) and Instruction Cache (IC). The Single Core CC handles requests from the core (load/store miss, instruction miss, flush, evict, data invalidate) and serializes them. Pending requests are scheduled with a fixed priority.

This lightweight CC handles a transaction at a time, hence there is no need for a Miss Status Holding Register (MSHR).

Contents

Interface

This section shows the interface of the CC to/from all other units.

To/from Core interface

CI buffers memory requests from the LDST unit, providing a separate queue for each type of request. This interface is directly forwarded to the CC, which schedules a request at the time. Furthermore, CI decouples the LDST, which is highly parallel, and this CC which is much slower. In fact, the cache controller executes one request at a time, although the LDST unit might issue multiple requests concurrently, up to the current number of threads allocated into the core. Those requests are scheduled by a fixed priority arbiter, which selects a pending request and dequeues it once the CC forwards a memory transaction to the memory.

Following lines of code define interface to/from core interface:

~ output logic cc_dequeue_store_request, ~ input logic ci_store_request_valid, input thread_id_t ci_store_request_thread_id, input dcache_address_t ci_store_request_address, input logic ci_store_request_coherent, ~

To/from LDST

As described in load/store unit, Load/Store unit has no information about the coherency protocol used, although it keeps track of information regarding privileges on all cache addresses. Each cache line stored, in fact, has two privileges: can read and can write that are used to determine cache miss/hit and are updated by the Cache Controller.

Memory requests from the LDST unit:

LOAD STORE REPLACEMENT FLUSH DINV INSTR IOM

All the listed operation above are handled by the control FSM in the CC. On the coherence protocol side, as said before, the LDST has an abstract view of the implemented protocol, and the CC grants it READ or WRITE privileges, while the current coherence protocol is transparent to the LDST unit.

Following lines of code define interface to/from load/store unit:

~ output logic cc_update_ldst_valid, output dcache_way_idx_t cc_update_ldst_way, output dcache_address_t cc_update_ldst_address, output dcache_privileges_t cc_update_ldst_privileges, output dcache_line_t cc_update_ldst_store_value, output cc_command_t cc_update_ldst_command, output logic cc_wakeup, output thread_id_t cc_wakeup_thread_id, input dcache_line_t ldst_snoop_data, input dcache_privileges_t [`DCACHE_WAY - 1 : 0] ldst_snoop_privileges, input dcache_tag_t [`DCACHE_WAY - 1 : 0] ldst_snoop_tag, input logic ldst_io_valid, input thread_id_t ldst_io_thread, input logic [$bits(io_operation_t)-1 : 0] ldst_io_operation, input address_t ldst_io_address, input register_t ldst_io_data, output logic io_intf_resp_valid, output thread_id_t io_intf_wakeup_thread, output register_t io_intf_resp_data, input logic ldst_io_resp_consumed, output logic io_intf_available, ~

To/from Memory controller

The CC has a valid/available handshake interface with the main memory, it can issue a request when the memory availability bit is asserted:

// Memory availability bit input logic m2n_request_available,

Dually the memory can forward a response to CC when its availability bit is asserted:

// CC availability bit output logic mc_avail_o,

When the memory is available, an issued request is loaded on the following signals:

// To Memory controller output address_t n2m_request_address, output dcache_line_t n2m_request_data, output dcache_store_mask_t n2m_request_dirty_mask, output logic n2m_request_read, output logic n2m_request_write,

While n2m_request_address and n2m_request_data signals cointain respectively the requesting address and the output data (used in case of writes), n2m_request_read and n2m_request_write control signals state the operation to perform on main memory. Finally, n2m_request_dirty_mask stores a bitmask of dirty words into the data signals.

When CC is available, a response from the main memory is forwarded on the following signals:

input logic m2n_response_valid, input address_t m2n_response_address, input dcache_line_t m2n_response_data,

To/from Thread controller

TC on core side handles instruction cache requests and independently manages threads and cache misses merging. Interface with CC has, as usual, a valid/availability based handshake when CC is ready to accept an instruction cache miss the corresponding availability bit is asserted:

output logic mem_instr_request_available,

and incoming instruction requests are pending on the following interface signals:

input logic tc_instr_request_valid, input address_t tc_instr_request_address

TC is always ready, there is no need for an availability signal in its interface. When a memory response has arrived for a pending instruction cache miss, this is directly forwarded on the interface signals:

output icache_lane_t mem_instr_request_data_in, output logic mem_instr_request_valid,

Implementation

In this section is described how to is implemented CC.

FSM

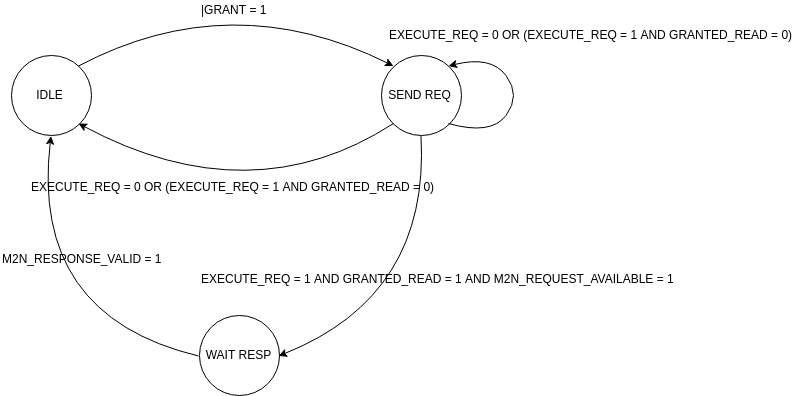

The control unit is implemented with a finite state machine (FSM) with three states:

- idle

- send request

- wait response

The following figure depicts the graph flow of the control FSM.

At HDL level, the FSM is implemented through a sequential process, which handles the state evolution, and a combinatorial process, shaped to implement the valid/available interface to the main memory:

~ output address_t n2m_request_address, output dcache_line_t n2m_request_data, output dcache_store_mask_t n2m_request_dirty_mask, output logic n2m_request_read, output logic n2m_request_write, output logic mc_avail_o, input logic m2n_request_available, input logic m2n_response_valid, input address_t m2n_response_address, input dcache_line_t m2n_response_data, ~

Sequential section

In case of reset or preliminary phase of FSM, control signals used to updating the LDST and the signals used to control a memory transaction are set to zero, as shown in the following code:

~ always_ff @( posedge clk, posedge reset ) begin if ( reset ) begin state <= IDLE; cc_update_ldst_valid <= 1'b0; cc_wakeup <= 1'b0; n2m_request_read <= 1'b0; n2m_request_write <= 1'b0; granted_read <= 1'b0; granted_write <= 1'b0; mem_instr_request_valid <= 1'b0; end else begin cc_update_ldst_valid <= 1'b0; cc_wakeup <= 1'b0; n2m_request_read <= 1'b0; n2m_request_write <= 1'b0; mem_instr_request_valid <= 1'b0; ~

In the IDLE state, a pending request is selected by a nested if-then-else structure that checks which bit of grant mask is asserted. Once a request is selected, thread ID, address of line of cache and control signals, that are used by LDST to execute an atomic operation, are configurated. Examples of signals are:

- granted_read/granted_write, used to track type of request;

- granted_need_snoop, used to track if the request needs for snoop operation;

- granted_wakeup, used to track if the request has to wake up the thread;

- granted_need_hit_miss used to track if FSM needs for a hit or miss.

The code snippet reported below reports all actions taken by the control logic in case of a LOAD instruction scheduled. All other cases share the same structure.

~ if (grants[LOAD]) begin granted_read <= 1'b1; granted_write <= 1'b0; granted_need_snoop <= 1'b1; granted_need_hit_miss <= 1'b0; granted_wakeup <= 1'b1; granted_thread_id <= ci_load_request_thread_id; granted_address <= ci_load_request_address; ~

The SEND REQ state implements a logic which allows CC to send a request to the memory. If the request is executable and the memory is available, then CC sends the request by loading the address and kind (READ or WRITE) of request to the memory interface. If the type of request is READ (such as LOAD, STORE, INSTR requests), then CC needs to wait for a response from the memory. Dually, in case of WRITE (such as FLUSH, DINV, REPLACEMENT requests), the CC comes back in the IDLE state and, if DINV request is pending, it computes a DINV request by executing following lines of code. When the memory is not available, then CC waits until the availability bit of the memory is asserted. When the request is not executable, then CC jumps back into the IDLE state.

~ if (grants_reg[DINV]) begin cc_update_ldst_valid <= 1'b1; cc_update_ldst_way <= way_matched_reg; cc_update_ldst_address <= granted_address; cc_update_ldst_privileges <= dcache_privileges_t'(0); cc_update_ldst_command <= CC_UPDATE_INFO_DATA; end ~

In these lines of code, CC sends to LDST a cache line invalidation request lowering the respective privileges. From this moment onward, no thread can read or write that cache line until next LOAD/STORE request.

In the WAIT RESP state, CC waits for a response from the memory. If memory response is available, then CC comes back in the IDLE state. If the issued request is for Instruction cache, then CC returns to TC the contents of memory. On the other hand, if the issued request is for Data cache, then CC forwards memory response to LDST unit and sets privileges accordingly, as shown below:

~ end else if (grants_reg[LOAD] | grants_reg[STORE]) begin cc_update_ldst_valid <= 1'b1; cc_update_ldst_way <= counter_way[granted_address.index]; cc_update_ldst_address <= granted_address; cc_update_ldst_privileges <= dcache_privileges_t'(2'b11); cc_update_ldst_store_value <= m2n_response_data_swap; cc_update_ldst_command <= ways_full ? CC_REPLACEMENT : CC_UPDATE_INFO_DATA; ~

The cc_update_ldst_command signal states how the LDST should handle the response. In the case of L1 cache ways full for the given set (ways_full signal asserted), the CC sends a CC_REPLACEMENT request on the given set and cc_update_ldst_way signal indicates the way to replace. In such a case the LDST starts a replacement operation, storing the response in the given location and evicting the old data. This is finally forwarded to CI on the eviction FIFO. On the other hand, if the L1 cache has a free way on the given set (ways_full signal asserted), the CC sends CC_UPDATE_INFO_DATA request. In this case LDST stores in the given location the memory response and updates its privileges, incurring in no replacement.

CC returns to LDST the way that a request has to execute, READ and WRITE privileges, data from memory that has to write if the type of request is STORE. So, all threads can read or can write a line of a cache of request.

Combinatorial section

In the FSM, dequeue signals used for dequeuing requests from CI queues and information signals are set to zero by default, as shown in the following code:

~ always_comb begin cc_snoop_tag_valid <= 1'b0; cc_snoop_tag_set <= dcache_set_t'( 1'bX ); dequeue_instr <= 1'b0; dequeue_iom <= 1'b0; iom_resp_enqueue <= 1'b0; cc_dequeue_store_request <= 1'b0; cc_dequeue_load_request <= 1'b0; cc_dequeue_replacement_request <= 1'b0; cc_dequeue_flush_request <= 1'b0; cc_dequeue_dinv_request <= 1'b0; update_counter_way <= 1'b0; ~

update_counter_way signal updates the selection of a next way of the cache for a given set, as shown below:

~

always_ff @ ( posedge clk, posedge reset )

if ( reset )

counter_way <= '{default : '0};

else

if ( update_counter_way )

counter_way[granted_address.index] <= counter_way[granted_address.index] + 1;

~

In the IDLE state, by checking which bit is asserted in the grants signal, a request is selected, a snoop request is issues to LDST asserting cc_snoop_tag_valid signal, and cc_snoop_tag_set is loaded with the set to snoop on the L1.

IDLE: begin

if (grants[LOAD]) begin

cc_snoop_tag_valid <= 1'b1;

cc_snoop_tag_set <= ci_load_request_address.index;

...

The SEND REQ state, if memory is available, dequeues the scheduled request asserting the corresponding dequeuing bit in the interface with CI. The following code shows the dequeuing logic in case of replacement scheduled:

SEND_REQ: begin

if (execute_req) begin

if (m2n_request_available) begin

if (grants_reg[REPLACEMENT]) begin

cc_dequeue_replacement_request <= 1'b1;

Signal execute_request checks wheater a pending request in CI still need a memory transaction or not. In some cases, due to the nature of the LDST and its multithreaded organization, a request might be already satisfied by previous transactions, hence execute_request double-checks the condition of miss again, if there is no need for a memory transaction the control logic dequeues the pending request issuing no memory transaction and wakes up stalling threads if needed (granted_wakeup signal high).

In the WAIT RESP, once a response from memory is ready, FSM the corresponding dequeue signal for LOAD, STORE, I/O read or INSTR request is asserted:

WAIT_RESP: begin

if (m2n_response_valid) begin

if (grants_reg[INSTR]) begin

dequeue_instr <= 1'b1;

IO, Instruction and Core Interface requests buffering

CI and CC, as said above, share a valid/availability handshake interface for each request type. All these validity bits are buffered into a vector, namely grants. An arbiter (described [here] and set in fixed priority mode) allows requests to be scheduled a fixed-priority policy. The output of the arbiter is a one-hot mask, namely grants.

IO and INSTR requests are managed in different ways by the control logic.

IO Map request

Two FIFOs are instantiated for IO requests, one for incoming responses and the other for issued requests, each of default size 8:

- IO FIFO REQUEST

- IO FIFO RESPONSE

The format of the queues is defined by the typedef in the following lines of code:

~

typedef struct packed {

thread_id_t thread;

io_operation_t operation;

dcache_address_t address;

register_t data;

} io_fifo_t;

~

Requests are buffered into IO FIFO REQUEST queue. When the IO FIFO REQUEST is full, the CC refuses further IO Map requests lowering io_intf_available signal on the interface. The request is dequeue from IO FIFO REQUEST once the corresponding IO has arrived and this is queued into IO FIFO RESPONSE queue. When ready, the LDST unit consumes the IO response, asserting ldst_io_resp_consumed signal for a clock cycle, then the response is dequeued from IO FIFO RESPONSE queue and forwarded to LDST.

Instruction miss request

INSTR requests are buffered into INSTR FIFO queue of size 2, which tracks address of the pending request for the instruction cache. When an instruction request is satified, the relative memory response is forwarded back to the TC which will handle threads waking up internally:

~ WAIT_RESP: begin if (m2n_response_valid) begin state <= IDLE; // handle req if (grants_reg[INSTR]) begin mem_instr_request_data_in <= m2n_response_data_swap; mem_instr_request_valid <= 1'b1; ~

m2n_response_data_swap register contains data from memory that are transformed into big-endian if needed.

Snoop managing

Snoop managing checks whether a cache hit occurs or not after a snoop request, through following lines of code:

~ generate for ( dcache_way = 0; dcache_way < `DCACHE_WAY; dcache_way++ ) begin assign way_busy_oh[dcache_way] = ( ldst_snoop_privileges[dcache_way].can_read | ldst_snoop_privileges[dcache_way].can_write ); assign way_matched_oh[dcache_way] = ( ldst_snoop_tag[dcache_way] == granted_address.tag ) & way_busy_oh[dcache_way]; end endgenerate assign snoop_hit = |way_matched_oh; assign ways_full = &way_busy_oh; ~

A cache hit is asserted if a thread has read or write privileges on such address and if the tag of the requesting address is equal to an element present in the tag array ldst_snoop_tag.

snoop_hit is asserted if there is a cache hit, while ways_full is asserted if all ways are busy.

way_matched_oh array tracks the index of the matched way in a one-hot encoding. A component converts one-hot string to a natural number, and it is described [here]. The output is loaded into way_matched_idx register.

Some requests need to snoop L1 in order to be scheduled, such as LOAD/STORE, FLUSH and DINV, hence CC has to evaluate if the line produces a cache hit before issuing a memory transaction, as explained above.

Memory swap

NaplesPU is a parametrizable little-endian manycore, it might be embedded into architectures with different memory endianness. The CC provides an optional endianness swapper logic used to convert data from little-endian to big-endian and vice versa. CC interface provides a parameter, called ENDSWAP, which it is in the instantiation of the CC.

module sc_cache_controller #( parameter ENDSWAP = 0 )( input clk, input reset, ~

By default, ENDSWAP is zero so it does not perform any memory swap.