Difference between revisions of "Single Core Cache Controller"

(→IO, Instruction and Core Interface requests buffering) |

(→Sequential section) |

||

| Line 99: | Line 99: | ||

=== Sequential section === | === Sequential section === | ||

First of all, some signals are initialized. '''[[MIRKO] rifrasare, che significa "prima di tutto" - intendi al reset?]''' | First of all, some signals are initialized. '''[[MIRKO] rifrasare, che significa "prima di tutto" - intendi al reset?]''' | ||

| + | |||

| + | In case of reset of FSM, control signals used to updating the LDST and the signals used to control a memory transaction are set to zero. | ||

In the '''IDLE''' state, a pending request is selected by a nested if-then-else structure that checks which bit of ''grant'' mask is asserted. Once a request is selected, thread ID, address of line of cache and control signals, that are used by LDST to execute an atomic operation, are configurated. Examples of signals are: | In the '''IDLE''' state, a pending request is selected by a nested if-then-else structure that checks which bit of ''grant'' mask is asserted. Once a request is selected, thread ID, address of line of cache and control signals, that are used by LDST to execute an atomic operation, are configurated. Examples of signals are: | ||

Revision as of 14:18, 10 May 2019

This page describes the L1 cache controller (CC) allocated in the nu+ core and directly connected to the LDST unit, Core Interface (CI), Thread Controller (TC), Memory Controller (MeC) and Instruction Cache (IC). The Single Core CC handles requests from the core (load/store miss, instruction miss, flush, evict, data invalidate) and serializes them. Pending requests are scheduled with a fixed priority.

TODO: magari un disegno di tutti i componenti collegati al CC

This lightweight CC handles a transaction at time, hence there is no need for a Miss Status Holding Register (MSHR).

Contents

Interface

This section shows the interface of the CC to/from all other units.

To/from Core interface

CI buffers memory requests from LDST unit, providing a separate queue for each type of request. This interface is directly forwarded to the CC, which schedules a request at time. Regards to this component, it can be possible to decouple a service speed of CC and a service speed of LDST units [[MIRKO] non ho capito questo periodo]. In fact, the cache controller executes one request at a time, although LDST unit might issue multiple request concurrently, up to the current number of threads allocated into the core. Those requests are scheduled by a fixed priority arbiter, which selects a pending request and dequeues it once the CC forwards a memory transaction to the memory.

Following lines of code define interface to/from core interface:

~ output logic cc_dequeue_store_request, ~ input logic ci_store_request_valid, input thread_id_t ci_store_request_thread_id, input dcache_address_t ci_store_request_address, input logic ci_store_request_coherent, ~

To/from LDST

As described in load/store unit, Load/Store unit does not store any information about the coherency protocol used, although it keeps track of information regarding privileges on all cache addresses. Each cache line stored, in fact, has two privileges: can read and can write that are used to determine cache miss/hit and are updated by the Cache Controller.

Memory requests from the LDST unit:

LOAD STORE REPLACEMENT FLUSH DINV INSTR IOM

All the listed operation above are handled by the control FSM in the CC. On the coherence protocol side, as said before, the LDST has an abstract view of the implemented protocol, and the CC grants it READ or WRITE privileges, while the current coherence protocol is transparent to the LDST unit.

Following lines of code define interface to/from load/store unit:

~ output logic cc_update_ldst_valid, output dcache_way_idx_t cc_update_ldst_way, output dcache_address_t cc_update_ldst_address, output dcache_privileges_t cc_update_ldst_privileges, output dcache_line_t cc_update_ldst_store_value, output cc_command_t cc_update_ldst_command, output logic cc_wakeup, output thread_id_t cc_wakeup_thread_id, input dcache_line_t ldst_snoop_data, input dcache_privileges_t [`DCACHE_WAY - 1 : 0] ldst_snoop_privileges, input dcache_tag_t [`DCACHE_WAY - 1 : 0] ldst_snoop_tag, input logic ldst_io_valid, input thread_id_t ldst_io_thread, input logic [$bits(io_operation_t)-1 : 0] ldst_io_operation, input address_t ldst_io_address, input register_t ldst_io_data, output logic io_intf_resp_valid, output thread_id_t io_intf_wakeup_thread, output register_t io_intf_resp_data, input logic ldst_io_resp_consumed, output logic io_intf_available, ~

To/from Memory controller

TODO

To/from Instruction cache

TODO

To/from Thread controller

TODO

Implementation

In this section is described how to is implemented CC.

FSM

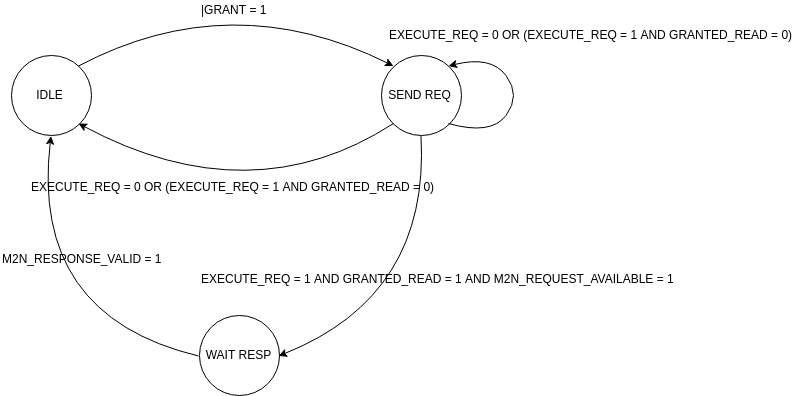

The control unit is implemented with a finite state machine (FSM) with three states:

- idle

- send request

- wait response

The following figure depicts the graph flow of the control FSM.

At HDL level, the FSM is implemented through a sequential process, which handles the state evolution, and a combinatorial process, shaped to implement the valid/available interface to the main memory:

~ output address_t n2m_request_address, output dcache_line_t n2m_request_data, output dcache_store_mask_t n2m_request_dirty_mask, output logic n2m_request_read, output logic n2m_request_write, output logic mc_avail_o, input logic m2n_request_available, input logic m2n_response_valid, input address_t m2n_response_address, input dcache_line_t m2n_response_data, ~

Sequential section

First of all, some signals are initialized. [[MIRKO] rifrasare, che significa "prima di tutto" - intendi al reset?]

In case of reset of FSM, control signals used to updating the LDST and the signals used to control a memory transaction are set to zero.

In the IDLE state, a pending request is selected by a nested if-then-else structure that checks which bit of grant mask is asserted. Once a request is selected, thread ID, address of line of cache and control signals, that are used by LDST to execute an atomic operation, are configurated. Examples of signals are:

- granted_read/granted_write, used to track type of request;

- granted_need_snoop, used to track if the request needs for snoop operation;

- granted_wakeup, used to track if the request has to wake up the thread;

- granted_need_hit_miss used to track if FSM needs for a hit or miss.

The code snippet reported below reports all actions taken by the control logic in case of a LOAD instruction scheduled. All other cases share the same structure.

~ if (grants[LOAD]) begin granted_read <= 1'b1; granted_write <= 1'b0; granted_need_snoop <= 1'b1; granted_need_hit_miss <= 1'b0; granted_wakeup <= 1'b1; granted_thread_id <= ci_load_request_thread_id; granted_address <= ci_load_request_address; ~

The SEND REQ state implements a logic which allows CC to send a request to the memory. If the request is executable and the memory is available, then CC sends the request by loading the address and kind (READ or WRITE) of request to the memory interface. If the type of request is READ (such as LOAD, STORE, INSTR requests), then CC needs to wait for a response from the memory. Dually, in case of WRITE (such as FLUSH, DINV, REPLACEMENT requests) the CC comes back in the IDLE state and, if DINV request is pending, it computes a DINV request by executing following lines of code. When the memory is not available, then CC waits until the availability bit of the memory is asserted. When the request is not executable, then CC jumps back into the IDLE state.

~ if (grants_reg[DINV]) begin cc_update_ldst_valid <= 1'b1; cc_update_ldst_way <= way_matched_reg; cc_update_ldst_address <= granted_address; cc_update_ldst_privileges <= dcache_privileges_t'(0); cc_update_ldst_command <= CC_UPDATE_INFO_DATA; end ~

In these lines of code, CC send to LDST the response of line cache invalidation request without any privileges. So, no thread can read or write that cache line until next LOAD/STORE request.

In the WAIT RESP state, CC waits for a response from the memory. If memory response is available, then CC comes back in the IDLE state. If kind of request is INSTR, then CC return to TC the contents of memory. Else if kind of request is LOAD or STORE, then CC performs a request by executing below lines of code:

~ end else if (grants_reg[LOAD] | grants_reg[STORE]) begin cc_update_ldst_valid <= 1'b1; cc_update_ldst_way <= counter_way[granted_address.index]; cc_update_ldst_address <= granted_address; cc_update_ldst_privileges <= dcache_privileges_t'(2'b11); cc_update_ldst_store_value <= m2n_response_data_swap; cc_update_ldst_command <= ways_full ? CC_REPLACEMENT : CC_UPDATE_INFO_DATA; ~

CC returns to LDST the way that a request has to execute, READ and WRITE privileges, data from memory that has to write if the type of request is STORE. So, all threads can read or can write a line of a cache of request.

Combinatorial section

[[MIRKO] guideline generale: nella descrizione dei meccanismi cita anche i segnali ]. First of all, some signals are initialized [[MIRKO] stesso discorso di sopra].

In the IDLE state, the address of cache (tag and set), that a WRITE request has to compute, is defined. [[MIRKO] per una READ non è definito?].

In the SEND REQ state, there is a logic for dequeue request. If the request is executable and the memory is available, then dequeue signal of REPLACEMENT, FLUSH, DINV and I/O write request is asserted. Else if the request isn't executable, then dequeue signal of LOAD, STORE, FLUSH and DINV is asserted.

In the WAIT RESP, once a response from memory is ready, dequeue signal of LOAD, STORE, I/O read and INSTR request is asserted.

IO, Instruction and Core Interface requests buffering

Every request has a valid bit. If this bit is asserted means that CC receives a request from CI. Also, there are two bits that mean there is IO or INSTR pending request. All these bits are buffered into a vector. Regards to this vector, it can be possible to schedule requests. An arbiter (described [here]) allows requests to be scheduled by round-robin scheduling policy. The output of the arbiter is a one-hot mask, named grants.

Following lines of code defined when a request is executable and when a request is completed:

~ assign req_completed = dequeue_instr | dequeue_iom | cc_dequeue_store_request | cc_dequeue_load_request | cc_dequeue_replacement_request | cc_dequeue_flush_request | cc_dequeue_dinv_request; assign execute_req = ~granted_need_snoop | (granted_need_snoop & ((snoop_hit & granted_need_hit_miss) | (~snoop_hit & ~granted_need_hit_miss))); ~

A request is completed when relative dequeue signal is asserted, after FSM execution.

A request is executable if it doesn't need for a snoop operation or it needs for a snoop operation and it needs for a snoop hit and FSM needs for a hit (such as FLUSH request) or it needs for a snoop miss and FSM needs for a miss (such as LOAD operation).

IO and INSTR requests are managed in a different way.

IO Map request

There are two queues of size 8:

- IO FIFO REQUEST

- IO FIFO RESPONSE

The format of the queues is defined by the following lines of code:

~

typedef struct packed {

thread_id_t thread;

io_operation_t operation;

dcache_address_t address;

register_t data;

} io_fifo_t;

~

Requests are buffered into IO FIFO REQUEST queue. When the IO FIFO REQUEST is full, the CC refuses further IO Map requests. Once that processing of a request is terminated, the request is dequeue from IO FIFO REQUEST and the relative response is queued into IO FIFO RESPONSE queue. Once the LDST unit consumed the response, it asserts ldst_io_resp_consumed signal, then the response is dequeued from IO FIFO RESPONSE queue.

It needs two queues because LDST is implemented by FSM, so LDST can be busy sometimes.

Instruction miss request

INSTR requests are buffered in INSTR FIFO queue of size 2. Data that are stored into the INSTR FIFO queue is the address of the request. The relative response of a request is sent to the TC during the execution of FSM, like below lines of code:

~ WAIT_RESP: begin if (m2n_response_valid) begin state <= IDLE; // handle req if (grants_reg[INSTR]) begin mem_instr_request_data_in <= m2n_response_data_swap; mem_instr_request_valid <= 1'b1; ~

m2n_response_data_swap register contains data from memory that are transformed into big-endian if needed.

It needs one queue because MeC is implemented by a pipeline architecture, so response time is known.

Snoop managing

Snoop managing checks whether a cache hit occurs or not after a snoop request, through following lines of code:

~ generate for ( dcache_way = 0; dcache_way < `DCACHE_WAY; dcache_way++ ) begin assign way_busy_oh[dcache_way] = ( ldst_snoop_privileges[dcache_way].can_read | ldst_snoop_privileges[dcache_way].can_write ); assign way_matched_oh[dcache_way] = ( ldst_snoop_tag[dcache_way] == granted_address.tag ) & way_busy_oh[dcache_way]; end endgenerate assign snoop_hit = |way_matched_oh; assign ways_full = &way_busy_oh; ~

A cache hit is asserted if a thread has read or write privileges on such address and if the tag of the requested address is equal to an element present in the tag array.

snoop_hit is asserted if there is at least one cache hit. ways_full is asserted if all ways are busy.

way_matched_oh array is the index of the matched way that is one-hot encoded. There is a component that converts one-hot string to a natural number, it is described [here]. The output is put in way_matched_idx register.

For each clock period, there is a matched way used by CC, thanks to these lines of code:

~ always_ff @( posedge clk, posedge reset ) begin if ( reset ) begin way_matched_reg <= dcache_way_idx_t'(0); end else if (snoop_hit) begin way_matched_reg <= way_matched_idx; end end ~

Some requests need for a snoop, such as LOAD/STORE, FLUSH and DINV request because they need to access to L1 cache for hitting a specific line of cache, so CC has to evaluate a cache hit. All other types of request don't need for a snoop, like REPLACEMENT because this type of request has to replace a line of cache when the relative cache is full and INSTR and IO request because these types of request have to communicate directly with memory.

CC checks a cache hit occurs every request that needs for a snoop operation because if CC has some request on the same address, at the first request CC regains the data from memory and refresh data and privileges of the line of the cache. From the second request onwards, CC checks a cache hit but it doesn't have to access to the memory. This optimizes performances of nu+ because access to memory is an onerous operation.

Memory swap

nu+ is a parametrizable little-endian manycore, so it can be embedded in architecture with various memory with big endianness or little endianness. So, CC has a piece of code that is used to convert data from little-endian to big-endian and vice versa, if it needed. Such as following lines of code, CC interface has a parameter, called ENDSWAP, which it is set by who instantiate the CC.

module sc_cache_controller #( parameter ENDSWAP = 0 )( input clk, input reset, ~

By default, ENDSWAP is 0. This means it doesn't need for the memory swap.