Difference between revisions of "Synchronization"

| Line 1: | Line 1: | ||

| − | The nu+ manycore supports | + | The nu+ manycore supports a hardware synchronization mechanism based on barrier primitives. A barrier stalls all thread hitting it until all other threads reach the same synchronization point. |

| − | The mechanism of Barrier Synchronization implemented in this system is based on master-slave | + | The mechanism of Barrier Synchronization implemented in this system is based on the master-slave model, with master distributed all over the tiles instead of being centralized. We support more independent barriers in parallel execution on manycore in each time. For identifying the different barriers we have an ID for each Barrier, and each Synchronization Core Master handles a range of Barrier in manycore in order to spread the control all over the architecture. The main idea behind our solution is to provide a distributed approach inspired by the directory-based system. Our architecture aims to eliminate central synchronization masters, resulting in a better message balancing across the network. Combining a distributed approach, hardware-level messages, and a fine-grain control, the solution supports multiple barriers simultaneously from different core subsets. |

== Barrier Synchronization Protocol == | == Barrier Synchronization Protocol == | ||

| − | The | + | The implemented barrier protocol is based on a message passing. We have three types of message: |

| − | + | *'''Account_Message''': It notifies to ''Synchronization Core'' that the thread is arrived to point of synchronization. If this is the first message with the given barrier ID, the control logic initializes the structure and sets the counter to the number of thread involved in the synchronization. Subsequent account messages with the same ID will decrement the counter. | |

| − | *'''Account_Message''': It notifies to ''Synchronization Core'' that the thread is arrived to point of | + | *'''Release_Message''': When the counter hits zero, all threads are in the synchronization point. The control logic releases all threads sending a release message to each barrier core module at the core-level are arrived which releases the involved threads. |

| − | *'''Release_Message''': | ||

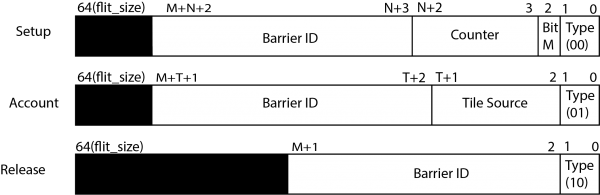

| − | + | The size of message change with a number of Barrier Synchronization supported, as view in the Figure: | |

| − | The size of message change with number of Barrier Synchronization supported, as view in | ||

[[File:SynchronizationMessages.png|600px]] | [[File:SynchronizationMessages.png|600px]] | ||

| − | The size of each message is of 1 ''flit'' (64 | + | The size of each message is of 1 ''flit'' (64 bits), and the field size of message change with the number of barriers supported. |

We have these fields in the messages: | We have these fields in the messages: | ||

*'''Type''': It specifies the type of message: '00'(Setup_Message), '01'(Account_Message), '10'(Release_message). The size is 2 bits; | *'''Type''': It specifies the type of message: '00'(Setup_Message), '01'(Account_Message), '10'(Release_message). The size is 2 bits; | ||

| Line 21: | Line 19: | ||

=== Example of Barrier === | === Example of Barrier === | ||

| − | + | ||

| + | Assume we have a manycore with 16 tiles: 14 processing tiles, one tile for the host interface, and one tile connected to the memory controller. The programmer executes a parallel kernel that involves the | ||

| + | 14 processor tiles. As a first step, a core sends a Setup message to the chosen synchronization master, containing a counter value set to 14 and the unique barrier ID. The synchronization master is chosen by a simple module operation on ID: T = ID mod TileNumber. The Synchronization Core, located in tile T, receives those values and initializes a counter, that will be assigned to this specific barrier from this moment onward. The most significant bits of the barrier ID locate the tile that manages that ID, as in a distributed directory approach. On the core side, when a thread hits the synchronization point, the Barrier Core in its tile sends an Account message to the master in tile T and freezes the thread. As soon as the master receives this type of message, it decrements the respective counter. When the counter reaches 0, all the involved cores have hit the synchronization point, and the master sends to each of them a Release message by multicast. When receiving the Release message, the Barrier Core releases the thread and resumes the execution flow. | ||

| + | |||

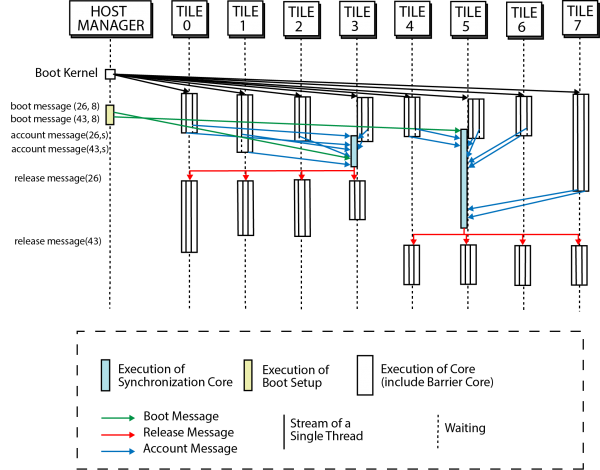

| + | === Example of Concurrent Barriers === | ||

| + | In this example (please refer to the Figure below) assume we have a manycore with 8 nu+ tiles, with two different groups of thread and one barrier synchronization request for each group. In group 1 is composed of threads between tile 0 to tile 3, and in group 2 the other tiles. Group 1 has a synchronization barrier with ID 26, whereas group 2 has a barrier with ID 43. The Barrier ID 26 and 43 are handled respectively by Synchronization Core in tile 3 and 5. | ||

[[File:ExampleBarrier.png|600px]] | [[File:ExampleBarrier.png|600px]] | ||

| − | In the | + | In the default configuration, each tile is equipped with an independent Synchronization Core. This allows concurrent barrier synchronization, spreading both the traffic network and the hardware overhead and sspeeding up synchronization in case of concurrent barriers ongoing from different thread groups. |

| − | |||

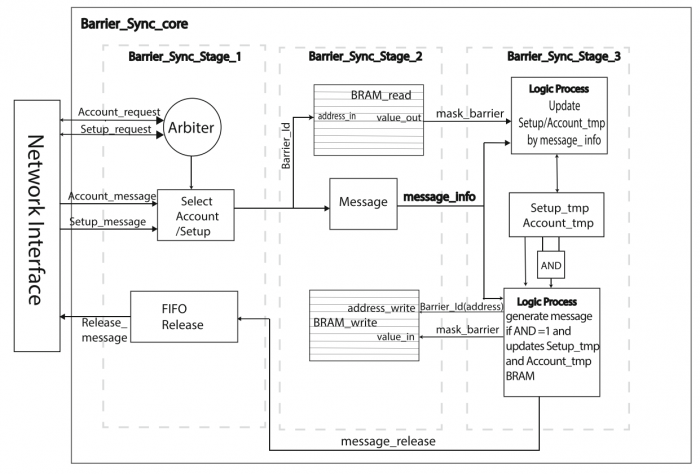

| − | === | + | === Synchronization Core === |

| − | + | [[File:Sync core.png|700px]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | The Synchronization Core is the key component of our solution. This module acts as the synchronization master, but unlike other hardware synchronization architectures, it is distributed among all tiles in the manycore. As explained above, the Boot Setup module selects a specific Synchronization Core based on the barrier ID set by the user. In this way, the architecture spreads the synchronization messages all over the manycore. By using | ||

| + | this approach, the synchronization master is no longer a network congestion point. The Figure above shows a simplified view of the Synchronization Core. The module is made of three stages: the first stage selects and schedules the Setup and the Account requests. The selected request steps into the second stage, which strips the control information from the message. In the last stage, the stripped request is finally processed. If it is a Setup request, the counter is initialized with the number of involved threads. On the other hand, if the request is an Account message, the counter is decremented by 1. When the counter is 0, all the involved cores have hit the synchronization point, and the master sends a multicast Release message reaching all of them. | ||

=== Barrier Core === | === Barrier Core === | ||

| − | + | The Barrier Core manages the synchronization on the core side. Each thread in a processing core can issue a barrier request through a specific barrier instruction introduced into the processor ISA. When a thread hits the synchronization point, the Barrier Core sends an Account message to the Synchronization Core, and stalls the requesting thread until a Release message from the master arrives. | |

| − | |||

| − | The | ||

| − | |||

Revision as of 13:44, 27 March 2019

The nu+ manycore supports a hardware synchronization mechanism based on barrier primitives. A barrier stalls all thread hitting it until all other threads reach the same synchronization point. The mechanism of Barrier Synchronization implemented in this system is based on the master-slave model, with master distributed all over the tiles instead of being centralized. We support more independent barriers in parallel execution on manycore in each time. For identifying the different barriers we have an ID for each Barrier, and each Synchronization Core Master handles a range of Barrier in manycore in order to spread the control all over the architecture. The main idea behind our solution is to provide a distributed approach inspired by the directory-based system. Our architecture aims to eliminate central synchronization masters, resulting in a better message balancing across the network. Combining a distributed approach, hardware-level messages, and a fine-grain control, the solution supports multiple barriers simultaneously from different core subsets.

Contents

Barrier Synchronization Protocol

The implemented barrier protocol is based on a message passing. We have three types of message:

- Account_Message: It notifies to Synchronization Core that the thread is arrived to point of synchronization. If this is the first message with the given barrier ID, the control logic initializes the structure and sets the counter to the number of thread involved in the synchronization. Subsequent account messages with the same ID will decrement the counter.

- Release_Message: When the counter hits zero, all threads are in the synchronization point. The control logic releases all threads sending a release message to each barrier core module at the core-level are arrived which releases the involved threads.

The size of message change with a number of Barrier Synchronization supported, as view in the Figure:

The size of each message is of 1 flit (64 bits), and the field size of message change with the number of barriers supported. We have these fields in the messages:

- Type: It specifies the type of message: '00'(Setup_Message), '01'(Account_Message), '10'(Release_message). The size is 2 bits;

- Counter: It specifies the number of Hardware Threads that takes part in the Barrier Synchronization. The size is on N bits, where N is log2 of total number of Hardware Threads in the manycore;

- Barrier ID: It identifies the Barrier. The size si on M bits, where M is the log2 of total number of Barrier supported by architecture; it is defined in the file(indicare il file).

- Tile Source: It specifies the ID of Tile Source of Barrier. We use it for destination multicast of Release Message. The size is T and it is log2 of total number of tiles in the manycore.

Example of Barrier

Assume we have a manycore with 16 tiles: 14 processing tiles, one tile for the host interface, and one tile connected to the memory controller. The programmer executes a parallel kernel that involves the 14 processor tiles. As a first step, a core sends a Setup message to the chosen synchronization master, containing a counter value set to 14 and the unique barrier ID. The synchronization master is chosen by a simple module operation on ID: T = ID mod TileNumber. The Synchronization Core, located in tile T, receives those values and initializes a counter, that will be assigned to this specific barrier from this moment onward. The most significant bits of the barrier ID locate the tile that manages that ID, as in a distributed directory approach. On the core side, when a thread hits the synchronization point, the Barrier Core in its tile sends an Account message to the master in tile T and freezes the thread. As soon as the master receives this type of message, it decrements the respective counter. When the counter reaches 0, all the involved cores have hit the synchronization point, and the master sends to each of them a Release message by multicast. When receiving the Release message, the Barrier Core releases the thread and resumes the execution flow.

Example of Concurrent Barriers

In this example (please refer to the Figure below) assume we have a manycore with 8 nu+ tiles, with two different groups of thread and one barrier synchronization request for each group. In group 1 is composed of threads between tile 0 to tile 3, and in group 2 the other tiles. Group 1 has a synchronization barrier with ID 26, whereas group 2 has a barrier with ID 43. The Barrier ID 26 and 43 are handled respectively by Synchronization Core in tile 3 and 5.

In the default configuration, each tile is equipped with an independent Synchronization Core. This allows concurrent barrier synchronization, spreading both the traffic network and the hardware overhead and sspeeding up synchronization in case of concurrent barriers ongoing from different thread groups.

Synchronization Core

The Synchronization Core is the key component of our solution. This module acts as the synchronization master, but unlike other hardware synchronization architectures, it is distributed among all tiles in the manycore. As explained above, the Boot Setup module selects a specific Synchronization Core based on the barrier ID set by the user. In this way, the architecture spreads the synchronization messages all over the manycore. By using this approach, the synchronization master is no longer a network congestion point. The Figure above shows a simplified view of the Synchronization Core. The module is made of three stages: the first stage selects and schedules the Setup and the Account requests. The selected request steps into the second stage, which strips the control information from the message. In the last stage, the stripped request is finally processed. If it is a Setup request, the counter is initialized with the number of involved threads. On the other hand, if the request is an Account message, the counter is decremented by 1. When the counter is 0, all the involved cores have hit the synchronization point, and the master sends a multicast Release message reaching all of them.

Barrier Core

The Barrier Core manages the synchronization on the core side. Each thread in a processing core can issue a barrier request through a specific barrier instruction introduced into the processor ISA. When a thread hits the synchronization point, the Barrier Core sends an Account message to the Synchronization Core, and stalls the requesting thread until a Release message from the master arrives.